AI and GDPR Compliance in 2025: Navigating the New Regulatory Landscape

Discover how to navigate AI and GDPR compliance in 2025 with practical strategies, regulatory updates, and best practices for data-driven businesses. Expert insights on maintaining privacy while leveraging artificial intelligence technology.

The year 2025 marks a pivotal period for organizations navigating the complex intersection of Artificial Intelligence (AI) and data protection. With the General Data Protection Regulation (GDPR) firmly established and the European Union's AI Act entering critical phases of its staggered implementation, businesses face a dynamic and increasingly stringent regulatory environment. This report provides a comprehensive analysis of the core GDPR principles as they apply to AI, the intricate interplay between GDPR and the nascent EU AI Act, and the key compliance challenges that emerge from this evolving landscape. It outlines strategic measures and best practices, drawing from recent regulatory guidance and enforcement trends, to enable organizations to not only meet their legal obligations but also to build trust and foster responsible innovation. Proactive, integrated compliance is no longer merely a legal necessity but a strategic imperative for long-term viability in the AI-driven economy.

II. Introduction: The Converging Worlds of AI and Data Protection

The rapid evolution and pervasive integration of Artificial Intelligence across diverse sectors are fundamentally reshaping how organizations operate and interact with data. From automating complex decision-making processes to enhancing customer engagement, AI's transformative potential is undeniable. However, this revolution inherently relies on the processing of vast datasets, frequently encompassing personal and sensitive information, for both training and operational phases. This data-intensive characteristic creates a natural point of friction with robust data privacy laws, particularly the General Data Protection Regulation (GDPR), which is designed to protect individuals' personal information by meticulously regulating its handling.

The imperative of GDPR compliance cannot be overstated. The regulation's core objective is to safeguard personal information and streamline the regulatory environment by unifying data protection standards across the European Union. Consequently, any AI application that processes personal data must adhere to GDPR principles to avert substantial regulatory penalties, which can escalate to €20 million or 4% of global annual turnover, whichever is greater.

The year 2025 is particularly significant, representing a crucial juncture in this regulatory evolution due to the staggered application timeline of the EU AI Act. While the AI Act formally entered into force on August 1, 2024, specific provisions have already become applicable. For instance, requirements related to AI literacy and the prohibition of certain AI practices took effect on February 2, 2025. Furthermore, obligations concerning general-purpose AI (GPAI) models are scheduled to apply on August 2, 2025. This rolling applicability means that businesses are not facing a static set of rules but rather a continuously evolving array of obligations.

The simultaneous and staggered application of the EU AI Act, layered upon the foundational GDPR, presents organizations with a significant challenge that extends beyond merely adhering to two distinct legal frameworks. It necessitates a deep understanding of their intricate overlaps, potential areas of tension—such as the processing of sensitive data for bias mitigation—and their differing enforcement timelines. This complexity demands continuous adaptation rather than a singular compliance event. Organizations must therefore adopt agile governance models and foster collaboration among legal, technical, and compliance teams to effectively navigate this fragmented yet interconnected regulatory landscape. The intricate nature of these requirements can lead to increased operational costs and the risk of overlooking critical interdependencies between the two regulatory frameworks, particularly for small and medium-sized enterprises.

III. The Foundational Pillars: GDPR's Core Principles Applied to AI

The General Data Protection Regulation (GDPR), through its foundational principles outlined in Article 5, fundamentally governs the entire lifecycle of Artificial Intelligence systems, from their initial development to their ongoing deployment and operation. These principles are not abstract legal concepts but concrete requirements that dictate how personal data must be handled. A thorough understanding of their direct application to AI is indispensable for establishing a compliant and ethical AI framework.

Lawfulness, Fairness, and Transparency: AI systems must possess a clear legal basis for processing personal data at every stage. This encompasses obtaining explicit consent when mandated, such as for automated decision-making or the processing of sensitive data, or relying on other lawful grounds like legitimate interest. However, relying on legitimate interest necessitates a careful balancing of the organization's interests against the fundamental rights and freedoms of data subjects. Transparency is a cornerstone requirement, compelling organizations to provide clear, accessible information regarding how their AI systems collect, store, and utilize personal data. This includes detailing the volume and sensitivity of data, the specific purposes of processing, and any significant effects that automated decision-making might have on individuals.

Purpose Limitation: Organizations are obligated to collect data for specific, explicit, and legitimate purposes. This principle is particularly pertinent to AI, as it means AI systems should not reuse personal data for unrelated tasks without obtaining further consent or establishing a new, compatible legal justification. This prevents the training or deployment of models using data originally gathered for entirely different objectives. Before processing data for any new purpose, a compatibility assessment must be performed to ensure alignment with the original purpose.

Data Minimization: This principle dictates that organizations should process only the data that is strictly necessary for the intended task. This presents a notable challenge for AI, which frequently benefits from and often thrives on large datasets to achieve optimal performance. Organizations must diligently assess the importance and relevance of data, actively avoid overcollection, and conduct regular data audits to identify and eliminate non-essential information. Techniques such as anonymization and pseudonymization are crucial tools for achieving data minimization while still enabling valuable insights from data.

Accuracy: The outputs generated by AI systems must be predicated on accurate, complete, and up-to-date data. Substandard data quality can lead to detrimental or biased outcomes, which not only constitutes a violation of GDPR but also severely erodes trust in the AI system and the organization deploying it. Consequently, regular data validation, cleansing, and continuous monitoring are indispensable practices to maintain data integrity.

Storage Limitation: Personal data should not be retained for longer than is necessary to fulfill its original purpose. This principle directly impacts the retention periods for AI training datasets that contain personal data. Organizations must establish defined retention schedules, and data should be promptly deleted or anonymized once it is no longer required for its initial purpose. Indefinite data retention significantly increases privacy and legal risks.

Integrity and Confidentiality (Security): Robust security measures, commensurate with the sensitivity and volume of the data being processed, are mandated to protect personal data handled by AI systems. This includes the implementation of technical controls such as encryption and access controls, coupled with organizational measures like regular security audits, penetration testing, and comprehensive employee training.

Accountability: Organizations bear the responsibility for demonstrating compliance with all GDPR principles, which necessitates clear oversight and traceability of AI actions and decisions. This involves maintaining detailed records, establishing robust governance frameworks, documenting audit logs, and implementing clear procedures for addressing errors or biases within AI systems. The European Data Protection Board (EDPB) specifically emphasizes the critical role of accountability and meticulous record-keeping for AI models.

The dynamic and evolving nature of AI models, characterized by continuous learning, retraining cycles, and the emergence of new inference mechanisms, means that compliance with GDPR principles such as accuracy, purpose limitation, and storage limitation is not a static achievement but an ongoing, iterative process. As AI models are refined and retrained, the initial "purpose" for which data was collected might subtly shift, or the "necessity" of retaining older training data could change. This requires organizations to conduct continuous data audits, regularly re-assess the compatibility of new processing purposes, and implement dynamic data retention policies rather than relying on static ones. This creates a continuous compliance loop, demanding ongoing monitoring, auditing, and adaptation of data governance frameworks for AI. While this increases operational overhead, it is crucial for avoiding non-compliance and maintaining public trust.

Furthermore, compliance with one GDPR principle often directly supports and strengthens compliance with others. For instance, a strong commitment to data minimization can have a cascading positive effect across multiple compliance areas. By collecting only the necessary data, organizations inherently reduce the attack surface for potential data breaches, which directly enhances security. This also simplifies data management, making it easier to fulfill transparency obligations and respond to data subject rights requests. Additionally, by limiting the volume of data, organizations can potentially reduce the amount of biased information ingested by AI models, thereby indirectly contributing to bias mitigation efforts. This interconnectedness suggests that prioritizing robust data minimization strategies at the outset of AI development can significantly streamline overall GDPR compliance, making it more efficient and effective.

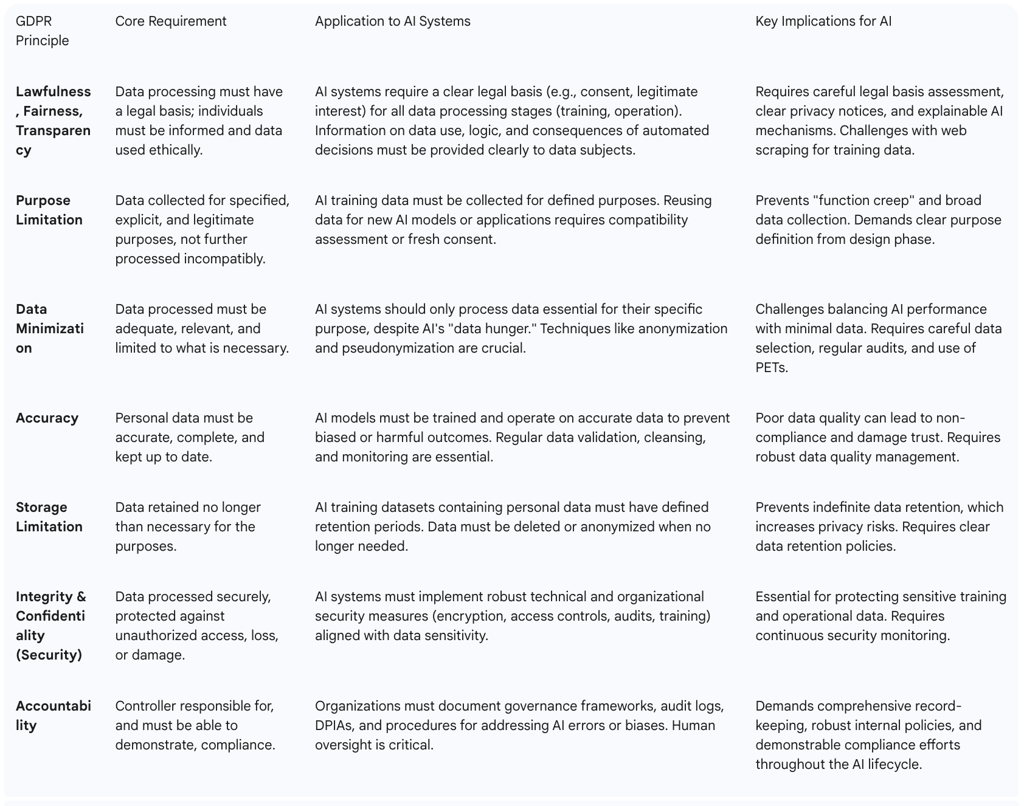

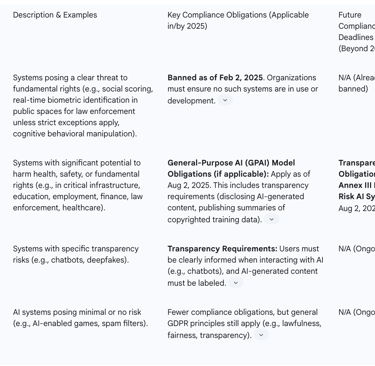

The following table provides a concise overview of how each core GDPR principle translates into practical requirements and implications for AI systems:

Table 1: Key GDPR Principles and their Application to AI Systems

This table serves as a practical checklist and quick reference guide, enabling compliance professionals to rapidly assess their AI initiatives against fundamental GDPR requirements. It aids in training, policy development, and internal audits, making complex legal concepts immediately actionable for AI-specific scenarios.

IV. The New Regulatory Horizon: Interplay of GDPR and the EU AI Act in 2025

The year 2025 marks a critical phase in the European Union's regulatory landscape for Artificial Intelligence, with the EU AI Act's staggered implementation coming further into effect. This section delineates the EU AI Act's risk-based framework and, crucially, clarifies its intricate relationship with the General Data Protection Regulation. While the AI Act introduces AI-specific obligations, it explicitly complements, rather than replaces, GDPR, especially when AI systems process personal data. Understanding this dual compliance requirement is paramount for businesses operating in the EU.

The EU AI Act establishes a comprehensive framework for AI governance, categorizing AI systems based on their potential impact on citizens' rights and safety. This risk-based approach dictates the stringency of compliance obligations:

Unacceptable Risk AI: These systems are strictly prohibited due to their clear potential for substantial harm to individuals' fundamental rights or safety. Examples include AI used for mass surveillance, social scoring, cognitive behavioral manipulation, or untargeted web scraping to create facial recognition databases. The ban on unacceptable risk AI systems became applicable on February 2, 2025. Organizations must ensure no such systems are in use or development.

High-Risk AI: These systems are subject to stringent regulations because of their significant potential to negatively affect safety or fundamental rights. This category includes AI deployed in critical infrastructure, education, employment, finance, legal decisions, and healthcare. While some obligations for high-risk AI will apply later, high-risk AI systems will have additional time to comply, with deadlines extended to August 2, 2027, for Annex I systems and August 2, 2026, for Annex III systems. Compliance obligations for high-risk AI include requirements for human oversight, bias detection and mitigation, transparency, and explainability.

Limited and Minimal Risk AI: Systems falling into these categories have fewer compliance obligations. However, limited risk AI systems still require transparency, such as informing users when they are interacting with an AI (e.g., chatbots) and clearly labeling AI-generated content like deepfakes. Minimal risk AI systems, such as AI-enabled games or spam filters, have the lightest compliance burden, though general GDPR principles still apply.

General-Purpose AI (GPAI) Models: Obligations for GPAI models are scheduled to apply on August 2, 2025. These models, exemplified by large language models (LLMs), are not classified as high-risk by default but must adhere to transparency requirements and EU copyright law. This includes disclosing when content has been generated by AI and publishing summaries of copyrighted data used for training.

The relationship between GDPR and the EU AI Act is one of complementarity. The AI Act explicitly clarifies that GDPR always applies when personal data is processed by AI systems. Both regulations share the overarching goal of safeguarding fundamental rights and mitigating harm through robust risk management. Significant overlap exists, particularly in areas such as lawful processing, automated decision-making, transparency, and risk assessments. For instance, any AI system processing personal data must adhere to GDPR principles, including having a lawful basis for processing and ensuring data accuracy. Organizations are therefore required to conduct both Data Protection Impact Assessments (DPIAs) under GDPR and AI Risk Assessments under the AI Act when AI systems handle personal data. These dual assessments are crucial for evaluating risks and ensuring alignment with legal requirements. The European Data Protection Board (EDPB) has further clarified this interplay, noting that GDPR transparency (informing data subjects about personal data processing) and AI Act transparency (informing users/entities about AI system functioning) serve different purposes, involve different actors, and relate to distinct categories of information, though both ultimately require accountability.

The explicit statement that GDPR always applies when personal data is processed by AI systems, alongside the AI Act's new requirements, establishes a dual compliance burden for organizations. This is not merely about fulfilling two separate sets of legal requirements but about integrating compliance efforts holistically. Organizations cannot treat AI Act compliance and GDPR compliance as isolated tasks. Instead, they must adopt a unified policy framework and embed compliance considerations at every stage of AI development, leveraging existing data protection infrastructure. For example, a comprehensive data inventory maintained for GDPR Article 30 purposes can also serve as the foundational basis for classifying AI systems under the AI Act, demonstrating data minimization and purpose limitation compliance. This dual burden necessitates robust cross-functional collaboration among legal, technical, and compliance teams. Organizations with strong GDPR foundations are inherently better positioned to adapt to the AI Act, as many requirements—such as transparency, data governance, and risk management—are reinforced or expanded upon by the new legislation.

Despite the general clarity on dual applicability, specific areas of overlap continue to present legal uncertainty and potential conflict. A notable example is the processing of sensitive data for bias mitigation. The AI Act explicitly permits the processing of sensitive personal data (e.g., race, health data) for the purpose of detecting and correcting algorithmic bias in high-risk AI systems. However, GDPR Article 9 imposes strict prohibitions on processing sensitive data unless specific exceptions apply, such as explicit consent or substantial public interest. Legal scholars continue to debate whether the AI Act's bias mitigation mandate constitutes sufficient legal justification under GDPR's 'substantial public interest' exemption, creating a challenging legal puzzle for businesses. This tension forces organizations into a dilemma: they risk violating the AI Act by not comprehensively testing for bias, or they risk violating GDPR by processing sensitive data without clear legal grounds. The absence of explicit guidelines in this area can lead to inconsistent regulatory enforcement and potentially costly compliance issues. This specific conflict underscores a broader challenge in regulating rapidly evolving technology: the inherent difficulty for legislation to keep pace with practical needs. It highlights the urgent need for policymakers to provide explicit guidance or legislative reforms to harmonize these provisions, thereby enabling responsible AI development without compromising fundamental privacy rights.

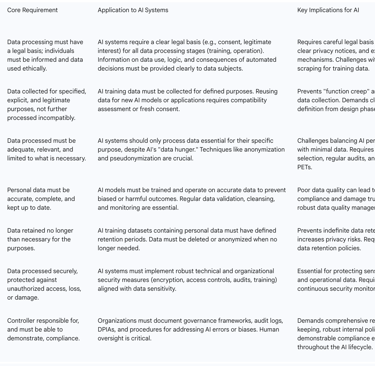

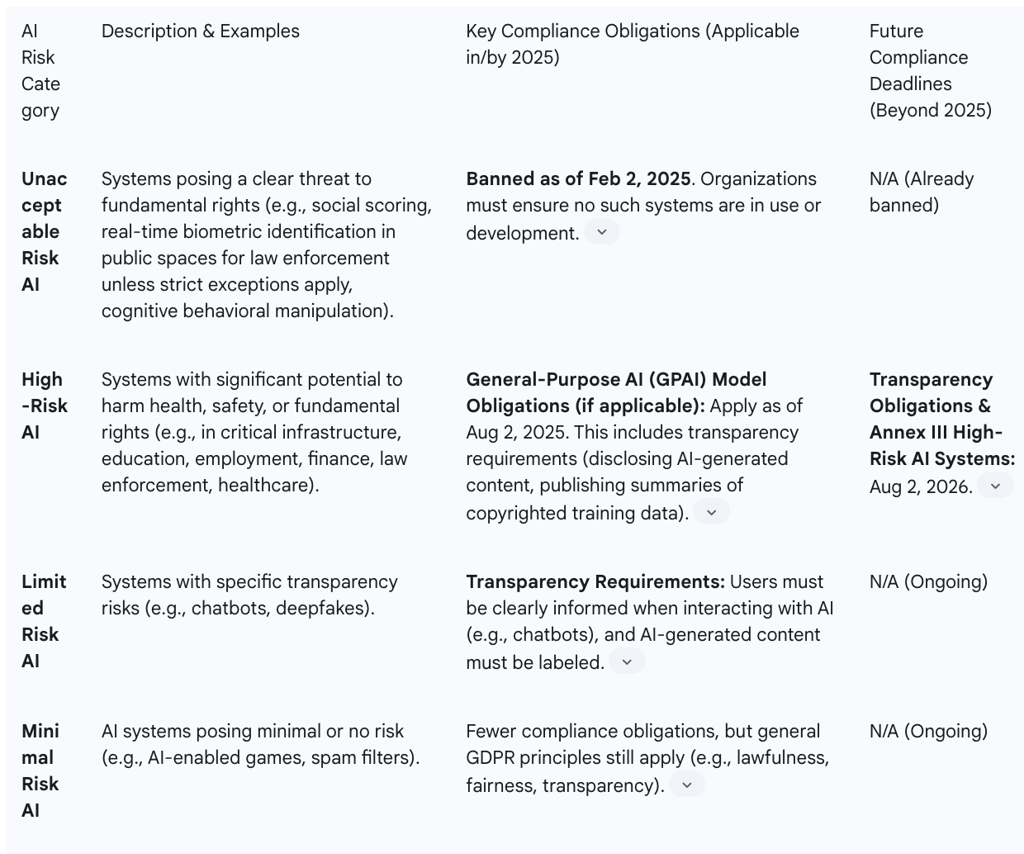

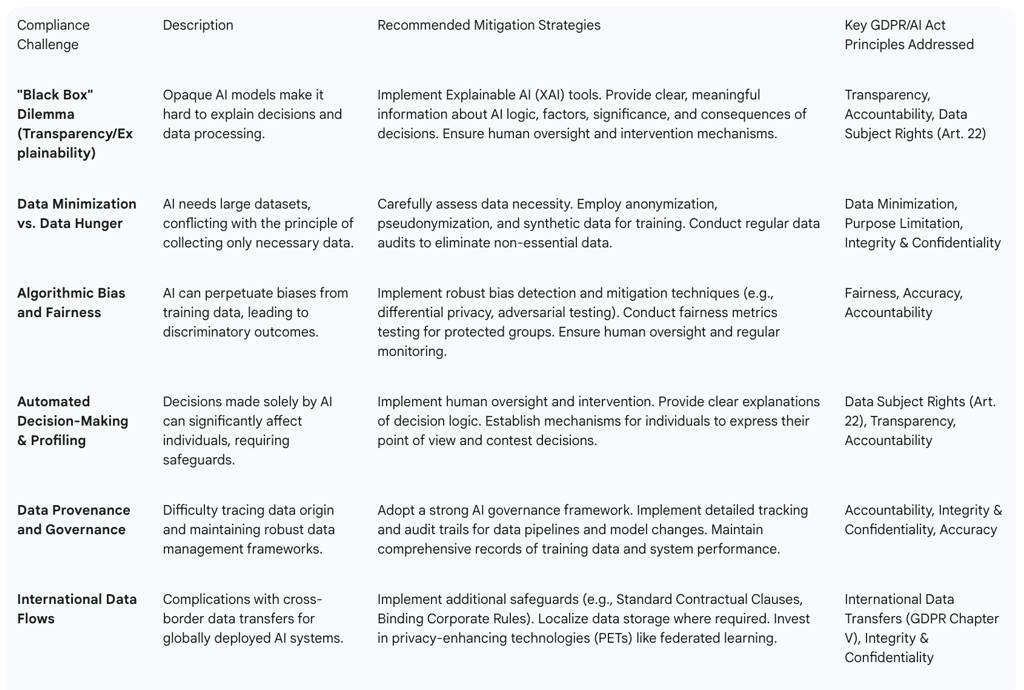

The following table provides a clear roadmap for organizations, allowing them to quickly identify which parts of their AI portfolio are immediately affected by the AI Act in 2025 and to plan for future compliance efforts. It helps prioritize resources and ensures that businesses are not caught off guard by new regulatory requirements.

Table 2: EU AI Act Risk Classification and Key Compliance Obligations (2025 Timeline)

V. Navigating Key Compliance Challenges in AI Development and Deployment

The integration of Artificial Intelligence with personal data processing introduces a unique set of compliance challenges that organizations must proactively address. These challenges stem from the inherent characteristics of AI systems and their interaction with established data protection principles, creating complex legal and technical hurdles. Successfully navigating these requires a deep understanding of the friction points and a commitment to innovative solutions.

A. The "Black Box" Dilemma: Transparency and Explainability: Many advanced AI models, particularly deep learning systems, are inherently opaque. This "black box" nature makes it profoundly challenging to explain precisely how personal data is processed within them and how specific decisions are reached. GDPR, however, places a strong emphasis on transparency and individuals' right to understand the logic behind automated decisions, especially those that significantly affect them, as outlined in Article 22. This includes providing meaningful information about the logic involved, the factors contributing to a decision, and its significance and consequences. The core challenge lies in increasing algorithmic transparency without compromising system performance or revealing sensitive proprietary information.

The tension between GDPR's demand for explainability and the inherent "black box" nature of complex AI models often creates a trade-off with system performance or development complexity. Achieving high levels of explainability frequently necessitates either utilizing simpler AI models, which might limit predictive power, or adding intricate interpretability layers to opaque models, which can introduce significant computational and development overhead. This balancing act is a fundamental design challenge for AI developers, requiring strategic choices regarding AI architectures and investment in Explainable AI (XAI) tools. It represents a continuous technical and legal balancing act.

B. Data Minimization vs. Data Hunger: A fundamental tension exists between the data-hungry nature of sophisticated AI systems and GDPR's principle of data minimization. While AI models typically require vast volumes of training data to learn effectively and achieve optimal performance, GDPR mandates that organizations process only the data strictly necessary for a specific purpose. The challenge, therefore, is to strike a delicate balance: ensuring sufficient training data for effective AI functionality while adhering to the principle that data must be "adequate, relevant, and limited to what is necessary".

C. Algorithmic Bias and Fairness: AI systems can inadvertently perpetuate or even amplify biases present in their training data, leading to discriminatory outcomes that directly violate GDPR's fairness principles. A significant hurdle in addressing this is that effectively detecting and correcting algorithmic bias often requires access to sensitive personal data, such as racial or health data, which is subject to stricter protections under GDPR Article 9. This creates a notable tension between the EU AI Act's objective of bias mitigation and GDPR's stringent privacy rules, posing a complex legal dilemma for organizations.

D. Automated Decision-Making and Profiling: GDPR Article 22 grants individuals the right not to be subject to decisions based solely on automated processing, including profiling, if these decisions produce legal effects concerning them or similarly significantly affect them. Examples include automated credit scoring, filtering job applications, or predictive policing. The challenge for organizations is to ensure that such AI-driven decisions incorporate human oversight, provide a clear right to explanation, and allow individuals to contest the outcomes. The Court of Justice of the European Union (CJEU) has clarified that explanations must be clear and meaningful, outlining the procedure and principles applied, but organizations are not necessarily required to disclose the algorithm itself.

E. Data Provenance and Governance: Tracking the origin, or provenance, of data used to train AI models presents a significant compliance challenge. This is particularly complex when data is derived from the outputs of other models or aggregated from multiple, disparate sources. Maintaining robust data governance frameworks, which include detailed records of training data, identified biases, and system performance throughout the AI lifecycle, is crucial for ensuring accountability and traceability.

F. International Data Flows: AI systems frequently leverage global computing resources and data sources, which complicates compliance with cross-border data transfer regulations. The invalidation of the Privacy Shield framework and the subsequent introduction of mechanisms like the EU-U.S. Data Privacy Framework necessitate that organizations implement additional safeguards when transferring personal data to third countries. This remains an ongoing and evolving challenge within the broader global privacy landscape.

Ensuring high data quality is not merely an operational best practice but serves as a critical enabler for compliance across multiple GDPR principles and AI Act requirements. If data quality is poor—meaning it is inaccurate, incomplete, biased, or lacks traceable origin—it directly undermines the accuracy principle, exacerbates concerns about algorithmic bias, complicates the management of purpose limitation and storage limitation, and hinders overall accountability and transparency efforts. Conversely, a strategic investment in data quality—through robust cleansing, validation, and comprehensive provenance tracking—directly improves data accuracy, significantly aids in bias detection and mitigation, supports data minimization by identifying and eliminating non-essential data, and strengthens the entire data governance framework. Therefore, data quality should be viewed as a foundational compliance strategy. Organizations that prioritize data quality from the initial stages of data collection through AI model deployment will find it considerably easier to meet diverse GDPR and AI Act requirements, reduce associated risks, and ultimately build more trustworthy AI systems.

VI. Strategic Compliance Measures and Best Practices for Organizations

To effectively navigate the complex regulatory landscape of AI and GDPR in 2025, organizations must adopt a proactive and integrated approach to compliance. This involves embedding data protection principles throughout the AI lifecycle, leveraging technological solutions, and fostering a robust governance culture. These strategic measures not only ensure adherence to legal obligations but also build trust with data subjects and stakeholders, positioning organizations as responsible innovators in the AI era.

A. Privacy by Design and Default: This foundational approach mandates the embedding of privacy safeguards and data protection mechanisms into the design of AI systems and processes from their earliest conceptual stages, rather than treating them as an afterthought. It involves ensuring that default settings within AI systems maximize data protection, for instance, by setting data sharing to the most restrictive level unless explicitly configured otherwise. For AI, this translates into a proactive consideration of privacy implications during every phase of model development, data collection, and system architecture design.

B. Data Protection Impact Assessments (DPIAs) and AI Risk Assessments: DPIAs are a legal requirement under GDPR for high-risk data processing activities, a category that encompasses many AI applications due to their scale and potential impact. Concurrently, the EU AI Act mandates specific AI Risk Assessments to classify AI systems based on their potential risk levels. Organizations are therefore required to conduct both DPIAs and AI Risk Assessments when their AI systems handle personal data, ensuring a comprehensive evaluation of risks and alignment with both sets of legal requirements. These assessments are vital for describing processing operations, evaluating potential risks, and identifying appropriate mitigation strategies.

C. Leveraging Privacy-Enhancing Technologies (PETs): Privacy-Enhancing Technologies (PETs) are crucial for bridging the inherent tension between AI's need for large datasets and the GDPR principles of data minimization and privacy. These technologies enable organizations to work with data in a privacy-preserving manner, thereby allowing AI innovation to flourish even with sensitive or restricted data. Key PETs include:

Anonymization: The irreversible removal of personally identifiable information from datasets, making it impossible to trace data back to specific individuals.

Pseudonymization: The replacement of direct identifiers with artificial pseudonyms, allowing for re-identification only if necessary and with appropriate safeguards.

Federated Learning: A technique that trains AI models on decentralized datasets located at their source, eliminating the need to centralize raw data and thereby reducing data transfer risks.

Differential Privacy: A mathematical framework that adds a controlled amount of statistical noise to data or query responses, obscuring individual data points while still allowing for aggregate insights.

Synthetic Data: The generation of artificial datasets that statistically mimic the properties and patterns of real-world data without containing any actual personal information.

Homomorphic Encryption and Secure Multiparty Computation: Advanced cryptographic techniques that enable computations to be performed directly on encrypted data or across distributed datasets without requiring decryption, ensuring data confidentiality throughout the processing lifecycle.

These technologies are not merely compliance tools; they actively enable AI innovation by allowing organizations to leverage data that would otherwise be too sensitive or restricted under GDPR. By enabling organizations to work with sensitive or large datasets in a privacy-preserving manner, PETs directly address the tension between AI's data hunger and GDPR's strictures. This allows for the development and training of more robust and accurate AI models without incurring the full privacy risks associated with raw personal data. Investing in PETs should therefore be viewed as a strategic investment in AI innovation, fostering responsible AI development and gaining a competitive advantage in a privacy-conscious market.

D. Robust Data Governance Frameworks: Implementing strong data governance frameworks is essential for managing compliance risks, ensuring lawful data processing, and promoting ethical AI development. This includes establishing clear data collection policies, defining data ownership and accountability roles, implementing data catalogs, and maintaining comprehensive metadata for all datasets. Regular monitoring and auditing of AI systems and data governance processes are critical to proactively identify and address any instances of non-compliance.

E. Ensuring Human Oversight and Data Subject Rights: GDPR grants individuals various fundamental rights over their personal data, which apply throughout the entire AI system lifecycle. These rights include access, rectification, erasure (the "right to be forgotten"), objection to processing, and data portability. Organizations must develop and implement suitable mechanisms to respond to these requests in a timely and adequate manner, ideally from the design phase of the AI system onwards. Furthermore, human oversight is essential for ensuring that AI systems operate in a fair, transparent, and accountable manner, particularly for decisions that have a significant impact on individuals. GDPR Article 22 specifically provides an unconditional right to human review of automated decisions.

F. Employee Training and AI Literacy: Regular and comprehensive training for employees on data minimization principles, legal requirements, best practices, and the potential consequences of non-compliance is crucial. Fostering a strong culture of privacy awareness and empowering employees to identify and report any unnecessary data collection or retention practices significantly strengthens overall compliance. Investing in AI literacy for all stakeholders, from developers to end-users, is vital for responsible deployment.

G. Third-Party Vendor Compliance: Many businesses increasingly rely on third-party AI tools and services. It is paramount to ensure that these vendors also comply with GDPR. This necessitates thorough vetting of their data protection practices and the establishment of clear Data Processing Agreements (DPAs) that meticulously outline roles, responsibilities, and data protection obligations. It is important to recognize that if a data processor or its services violate GDPR, it can lead to significant consequences for the data controller.

Robust documentation and governance frameworks, which are central to GDPR's accountability principle, serve as a proactive defense mechanism against regulatory scrutiny and potential fines. Comprehensive documentation, including model cards, system mapping, records of bias testing, and detailed audit trails, along with a well-defined governance framework, provides tangible evidence of compliance efforts. The European Data Protection Board (EDPB) has explicitly stated that if effective anonymization measures cannot be confirmed from documentation, supervisory authorities may conclude that accountability obligations have not been met. Given the significant financial penalties organizations face for non-compliance , this proactive approach not only assists in identifying and mitigating risks internally but also acts as a crucial defense during regulatory investigations, potentially reducing the severity of penalties or even preventing them altogether. Accountability should therefore be viewed as an ongoing, auditable process that builds internal resilience and external trust, aligning with the broader trend of leadership that extends beyond mere compliance in the AI space.

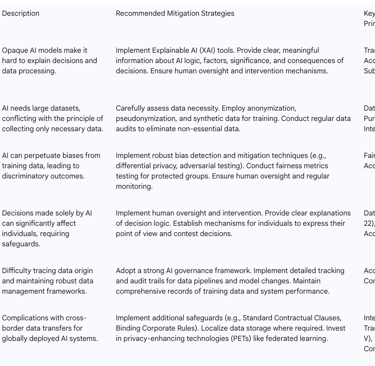

The following table offers a clear, concise guide to implementing practical solutions for complex AI-related compliance issues. It facilitates decision-making, resource allocation, and the development of internal policies and procedures.

Table 3: Key Compliance Challenges and Recommended Mitigation Strategies

VII. Regulatory Guidance and Enforcement Trends (2023-2025)

The evolving landscape of AI and GDPR compliance is shaped not only by legislation but also by authoritative guidance from supervisory authorities and precedents set through enforcement actions. This section provides critical insights into the interpretations and expectations of key regulatory bodies, such as the European Data Protection Board (EDPB) and the French Data Protection Authority (CNIL), alongside an analysis of notable GDPR enforcement actions related to AI systems between 2023 and 2025. These trends offer invaluable lessons and highlight areas of increasing regulatory scrutiny.

Insights from the European Data Protection Board (EDPB) Opinions: The EDPB's Opinion 28/2024 offers valuable, though non-mandatory, guidance on the intersection of AI models and data protection, particularly concerning GDPR compliance. The EDPB emphasizes the necessity for case-by-case assessments to determine GDPR applicability to AI models, underscoring the importance of accountability and meticulous record-keeping. It clarifies that AI models trained on personal data cannot automatically be considered anonymous; rigorous evidence is required to prove that personal data cannot be extracted or inferred from the model's outputs. The opinion also states that "legitimate interests" can serve as an appropriate legal basis for AI model training and development under specific conditions, which includes performing a necessity test and implementing safeguards to prevent the storage or regurgitation of personal data. Crucially, the EDPB stresses that all data subject rights must be respected, including the right to object when legitimate interest is used as a legal basis for processing.

Insights from CNIL Recommendations: The CNIL (French Data Protection Authority) actively contributes to clarifying the legal framework for AI, aiming to foster innovation while simultaneously protecting fundamental rights. Their recommendations provide concrete solutions for informing individuals whose data is used and for facilitating the exercise of their rights. The CNIL applies the principle of purpose limitation with flexibility for general-purpose AI systems, allowing operators to describe the type of system and its key functionalities if all potential applications are not known at the training stage. They also clarify that data minimization does not preclude the use of large training datasets, provided that the data is carefully selected and cleaned to optimize algorithm training while avoiding unnecessary processing of personal data. Furthermore, the retention of training data can be extended if justified and if the dataset is subject to appropriate security measures, particularly for scientifically and financially significant databases that may become recognized standards within the research community. The CNIL offers specific guidance on handling data subject rights requests, distinguishing between requests related to training datasets and the AI model itself, and emphasizing proportionality in responses.

These approaches by regulators like CNIL and EDPB highlight a principle of proportionality and context in applying GDPR. While GDPR principles are universal, these authorities are emphasizing a nuanced, context-specific application, particularly for complex AI systems. This indicates a move away from rigid interpretations towards more flexible compliance. Regulators acknowledge that a "one-size-fits-all" approach is impractical for AI development. They provide guidance that allows for flexibility in applying principles like purpose limitation and data minimization, recognizing the unique technical and operational realities of AI, such as the need for large datasets for large language models and the evolving nature of functionalities. This means organizations should not view GDPR as an absolute barrier to AI innovation. Instead, they must be prepared to thoroughly document their reasoning and the technical and organizational measures taken, demonstrating that their approach is justified and proportionate to the risks, even if it deviates from a literal interpretation of certain principles in a non-AI context.

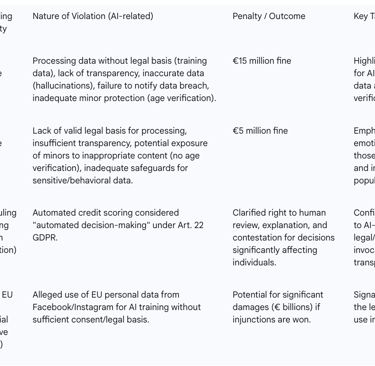

Analysis of Recent GDPR Enforcement Actions and Fines (2023-2025) related to AI Systems: GDPR fines have significantly escalated, reaching approximately €5.88 billion by January 2025. The recurrence of fines against major companies like Meta and Google indicates ongoing compliance issues, underscoring the critical need for explicit user consent and transparent data handling practices.

Luka Inc. (Replika Chatbot) - Italy (Garante): In April 2025, the Italian data protection authority (Garante) imposed a €5 million fine on Luka, Inc., the US-based developer of the emotional AI companion chatbot Replika, for multiple GDPR violations. Key deficiencies identified included the lack of a valid legal basis for data processing, insufficient transparency in its privacy notices, the potential exposure of minors to inappropriate content due to the absence of effective age-verification mechanisms, and inadequate safeguards for sensitive or behavioral data collected through the chatbot's emotionally responsive interactions. This case highlights increasing regulatory scrutiny on AI models that interact directly with individuals and process sensitive personal data.

OpenAI - Italy (Garante): In December 2024, the Italian Garante fined OpenAI €15 million for several GDPR breaches. The violations included processing data without a proper legal basis for training its models, a lack of transparency regarding its data collection practices, the production of inaccurate data (AI hallucinations), and a failure to notify a data breach that occurred in March 2023. Additionally, OpenAI was found to have not implemented adequate systems to protect minors from inappropriate content generated by its AI, specifically lacking user age verification. This enforcement action underscores the critical need for a clear legal basis for AI training data, comprehensive transparency, ensuring data accuracy, and robust age verification for AI services.

CJEU Clarification on Automated Decision-Making (SCHUFA case): In February 2025, the Court of Justice of the European Union (CJEU) issued a significant ruling in the SCHUFA case (C-634/21). The court held that automated credit scores, which significantly affect contractual outcomes for individuals, qualify as "automated decision-making" under Article 22 GDPR. This ruling affirmed individuals' entitlement to safeguards such as a right to explanation and human review of such decisions. Furthermore, the CJEU clarified that organizations cannot, "as a rule," invoke trade secrets to deny data subjects access to meaningful information about automated decisions, although the algorithm itself does not need to be disclosed. This judgment confirms the broad applicability of Article 22 to AI-driven decisions that have legal or similarly significant effects.

NOYB vs. Meta AI: The privacy advocacy group NOYB has sent Meta a formal "cease and desist" letter concerning its Europe-wide AI training practices. The complaint alleges that Meta's plan to use EU personal data from Instagram and Facebook users to train new AI systems from May 27 onwards lacks sufficient consent or a valid legal basis. If injunctions are successfully pursued under the new EU Collective Redress Directive, this case could potentially lead to billions in damages. This ongoing case signals a continued regulatory focus on the legal basis for large-scale data use in the training of generative AI models.

These recent enforcement actions highlight a growing trend of holding AI developers and deployers liable for fundamental GDPR breaches, with particular scrutiny on AI systems that impact vulnerable populations or process sensitive behavioral data. The cases involving Luka Inc. and OpenAI, which both concerned AI models interacting directly with users and processing highly personal or sensitive information, demonstrate that the absence of robust age verification and clear consent mechanisms for these types of AI systems is a recurring theme in regulatory actions. Similarly, the CJEU's SCHUFA ruling emphasizes the importance of safeguards for automated decisions that significantly affect individuals, such as credit scores. This indicates that organizations deploying "emotional AI," predictive analytics, or any AI systems that affect critical individual rights must anticipate heightened regulatory scrutiny. Proactive measures, including stringent age verification, robust consent frameworks, comprehensive Data Protection Impact Assessments, and strong safeguards for sensitive data, are no longer just best practices but immediate necessities to avoid substantial fines and reputational damage.

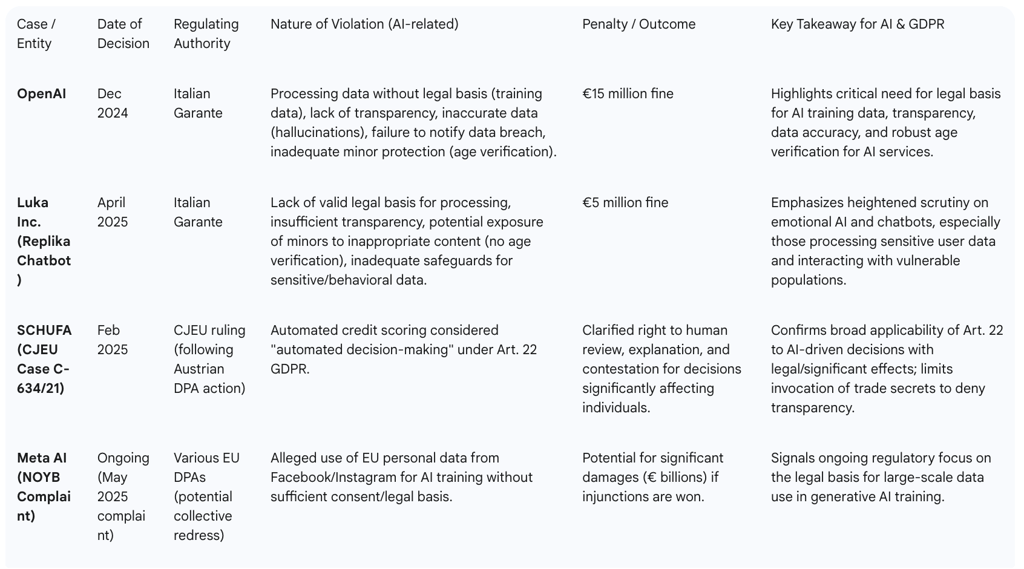

The following table serves as a powerful learning tool, demonstrating real-world consequences of non-compliance. It helps organizations identify high-risk areas in their AI operations that are currently under regulatory microscope, allowing them to prioritize their compliance efforts and learn from the mistakes of others.

Table 4: Notable GDPR Enforcement Actions Involving AI (2023-2025)

VIII. Looking Ahead: The Future of AI Regulation Beyond 2025

While 2025 presents immediate compliance challenges, the regulatory landscape for AI and data protection is continuously evolving. This section explores anticipated trends and predictions for AI regulation and data protection beyond 2025, providing a forward-looking perspective for organizations to proactively adapt their strategies and maintain long-term compliance and trust in the rapidly advancing AI ecosystem.

Anticipated Trends:

Stricter Regulations and Compliance: Governments worldwide will continue to implement stricter data privacy laws and AI regulations, building upon frameworks like GDPR and the EU AI Act. Organizations will be compelled to enhance their compliance frameworks significantly to avoid hefty fines and reputational damage.

Increased Focus on Data Minimization: Companies are expected to increasingly adopt data minimization practices, collecting only the data strictly necessary for specific purposes. This approach not only reduces the risk of data breaches but also enhances consumer trust by demonstrating a commitment to privacy. This principle is anticipated to become an even more central cornerstone of future data protection efforts.

Advanced Privacy-Preserving Technologies (PETs): Innovations in PETs, such as homomorphic encryption, differential privacy, and synthetic data, are projected to become more widespread. These technologies facilitate data analysis without compromising individual privacy. This trend is expected to grow, with more industries adopting PETs to strike a better balance between innovation and robust data protection.

Enhanced Consumer Control and Transparency: Consumers will increasingly demand greater control over their personal data. Organizations will respond by providing more transparent data practices, including clearer consent mechanisms and easier access to data deletion or modification options. The very concept of "control" over one's persona and personal information in the burgeoning world of Generative and Agentic AI will increasingly permeate and fuel regulatory debates.

Rise of Privacy-Focused Business Models: Organizations that prioritize and demonstrate strong privacy measures will be better equipped to adapt to evolving regulatory changes and cyber threats. They are likely to attract and retain customers more effectively through transparent privacy practices, transforming privacy from a compliance burden into a competitive advantage.

Integrated Cybersecurity and Privacy Programs: A cohesive approach that reduces organizational silos and improves threat visibility across systems will become standard. This integration will enhance response strategies, ensuring quicker containment and recovery from security incidents. AI and Machine Learning (ML) are poised to become essential components of advanced data privacy strategies, though their deployment will necessitate stringent privacy safeguards to ensure ethical and transparent data handling.

Geopolitical Fragmentation and International Data Transfers: Issues related to international data transfers will remain a high priority on the global privacy agenda, increasingly influenced by the geopolitics of AI development. This could lead to continued fragmentation of the cross-border data transfer landscape into distinct clusters of like-minded jurisdictions.

Increased Role of Data Protection Authorities (DPAs): Data Protection Authorities are expected to assume an even more prominent role in enforcing laws at the intersection of GDPR and new EU acts regulating the digital space, including the EU AI Act.

Focus on Generative AI Risks Beyond Personal Data: Regulators will dedicate significant attention to controlling the use of synthetic data that fuels harmful content. There will be a sharp focus on the rise of advanced emotional chatbots and the proliferation of deepfakes, potentially leading to the development of more robust and specific guidelines targeting generative AI.

Potential Reopening of GDPR: Some experts anticipate that the reopening of the GDPR for revisions might appear more convincingly on the regulatory agenda once procedural reforms are completed. This suggests the potential for significant long-term changes to this foundational privacy law.

The relentless pace of technological advancement in AI, coupled with the evolving and fragmenting regulatory landscape, necessitates that organizations move beyond mere reactive compliance to a strategy of proactive adaptation for long-term viability. Given the continuous emergence of new AI capabilities and the dynamic nature of regulatory responses, a static compliance posture will quickly become outdated. Organizations must embed "privacy-by-design" principles and "agile governance models" not just as current best practices but as fundamental operational philosophies. This allows them to anticipate and respond effectively to future legislative changes and emerging privacy risks. This approach implies a continuous investment in legal and technical expertise, ongoing monitoring of regulatory developments, and a culture that embraces ethical AI development as a core business value. Businesses that fail to proactively adapt risk not only fines but also significant reputational damage and a loss of customer trust in an increasingly privacy-aware market.

Beyond mere legal compliance, the future regulatory and market landscape will increasingly demand that ethical AI considerations, particularly those related to privacy, bias, and individual control, become integral to an organization's core business strategy and competitive advantage. The market is increasingly rewarding companies that demonstrate a genuine commitment to ethical data handling and responsible AI. Compliance is becoming a baseline, but competitive advantage will stem from exceeding these minimums, building deep trust with users by respecting their autonomy and providing meaningful control over their data. This shifts privacy from a legal burden to a strategic differentiator. Organizations that embed ethical AI principles—including robust privacy, fairness, and transparency—into their product development, marketing, and overall business operations will not only mitigate regulatory risks but also cultivate stronger customer loyalty, attract top talent, and enhance their brand reputation in the long run. This signifies a fundamental shift where ethical considerations are no longer an afterthought but a central pillar of sustainable business growth in the AI era.

IX. Conclusion: Proactive Compliance as a Strategic Imperative

Navigating the complex and rapidly evolving landscape of AI and GDPR compliance in 2025 and beyond is not merely a legal obligation but a strategic imperative for organizations. The intricate interplay between the established GDPR principles and the staggered implementation of the EU AI Act creates a multifaceted regulatory environment that demands a sophisticated and integrated approach.

This report has detailed how GDPR's foundational principles—lawfulness, fairness, transparency, purpose limitation, data minimization, accuracy, storage limitation, integrity, confidentiality, and accountability—directly govern the entire AI lifecycle. It has highlighted the inherent challenges, such as the "black box" dilemma, the tension between data minimization and AI's data hunger, the complexities of algorithmic bias, and the nuances of automated decision-making. These challenges are further compounded by the need for robust data provenance and the complexities of international data flows.

The analysis underscores that compliance is a continuous process, not a one-time event, particularly given the dynamic nature of AI models. It also reveals how adherence to one principle, such as data minimization, can positively impact compliance across multiple other areas, reinforcing the interconnectedness of these requirements. Furthermore, the report emphasizes that privacy-enhancing technologies are not just compliance tools but innovation enablers, allowing organizations to responsibly leverage sensitive data. The growing trend of regulatory enforcement, particularly targeting AI systems impacting vulnerable populations or processing sensitive data, highlights the critical importance of proactive documentation and robust governance frameworks as a defense mechanism.

To thrive in this landscape, organizations must adopt a holistic, adaptive, and multidisciplinary approach. This involves embedding data protection by design and by default into every stage of AI development, conducting thorough Data Protection Impact Assessments and AI Risk Assessments, and leveraging privacy-enhancing technologies. It also necessitates establishing robust data governance frameworks, ensuring human oversight, upholding data subject rights, investing in comprehensive employee training and AI literacy, and meticulously vetting third-party AI vendors.

By embracing proactive compliance and ethical AI development, organizations can achieve more than just risk mitigation. They can build enduring trust with data subjects and stakeholders, foster responsible innovation, and secure their position as leaders in the evolving digital economy. The future of AI demands not just technological prowess, but also unwavering commitment to ethical principles and regulatory adherence.

FAQ Section

1. What are the key requirements for AI systems under GDPR in 2025?

AI systems must ensure lawful data processing, provide transparency in decision-making, enable individual rights exercising, implement data minimization, and maintain accountability through proper documentation. Organizations must also conduct regular audits and implement privacy by design principles.

2. How can organizations balance AI innovation with GDPR compliance?

Organizations can implement privacy-preserving technologies like differential privacy and federated learning, maintain robust governance frameworks, provide transparent explanations for AI decisions, and invest in compliance infrastructure. These approaches enable innovation while protecting individual privacy rights.

3. What are the main challenges for cross-border AI data transfers?

Key challenges include varying jurisdictional requirements, data residency laws, adequacy decisions, and maintaining consistent compliance standards across regions. Organizations must implement appropriate safeguards like SCCs, BCRs, and technical measures to ensure lawful transfers.

4. How do privacy-enhancing technologies support GDPR compliance?

Privacy-enhancing technologies such as homomorphic encryption, differential privacy, federated learning, and synthetic data generation enable organizations to extract insights while minimizing personal data exposure. These technologies help achieve data minimization and purpose limitation requirements.

5. What documentation is required for AI compliance under GDPR?

Organizations must maintain records of processing activities, data impact assessments, consent management, model versions, training data sources, algorithm logic, and audit trails. Documentation should cover the entire AI lifecycle from development to deployment and ongoing operation.

6. How should organizations handle the 'right to explanation' for AI decisions?

Organizations must provide meaningful information about AI decision-making logic, data factors considered, and consequences of decisions. This can be achieved through explainable AI techniques, user-friendly interfaces, and tiered transparency approaches based on the complexity of requests.

7. What role do AI ethics committees play in GDPR compliance?

AI ethics committees provide governance oversight, evaluate algorithmic fairness, conduct bias audits, and ensure compliance with privacy principles. They bridge technical development with legal requirements and help organizations maintain ethical AI practices beyond minimum compliance requirements.

8. How frequently should AI systems be audited for GDPR compliance?

Regular audits should be conducted at least annually, with more frequent reviews for high-risk systems or after significant model updates. Continuous monitoring for bias, performance metrics, and privacy compliance should be implemented, with formal audits every 6-12 months depending on use case complexity.

9. What are the implications of using third-party AI services for GDPR compliance?

Organizations remain accountable for GDPR compliance when using third-party AI services. They must ensure appropriate contracts, conduct due diligence, verify compliance measures, and maintain control over data processing activities. Joint controller or processor relationships should be clearly defined and documented.

10. How can organizations prepare for future AI regulatory developments?

Organizations should implement flexible compliance frameworks, invest in privacy-preserving technologies, maintain comprehensive documentation, engage with regulatory developments, and build adaptable technical architectures. Regular training and awareness programs help staff stay current with evolving requirements.

Additional Resources

1. European Data Protection Board (EDPB) Guidelines

The EDPB has published comprehensive guidelines on AI and automated decision-making under GDPR. These authoritative documents provide detailed interpretations of regulatory requirements specifically for AI applications.

2. "Privacy-Preserving Machine Learning" by Manning et al.

This technical manual offers in-depth coverage of privacy-enhancing technologies applicable to AI systems, including practical implementation guides for differential privacy and federated learning.

3. UK Information Commissioner's Office - AI Guidance

The ICO's AI and data protection guidance provides practical frameworks for conducting AI audits, implementing explainable AI, and managing algorithmic accountability within GDPR requirements.

4. IEEE Standards Association - Ethical Design of Autonomous and Intelligent Systems

This comprehensive resource offers technical standards and best practices for building ethical AI systems that align with privacy regulations and human values.

5. Datasumi's AI Solutions Blog

Regular updates on practical implementation strategies for AI compliance, featuring case studies and technical insights from industry practitioners.