This report critically examines the intricate intersection of AI-powered advertising and the General Data Protection Regulation (GDPR). Artificial intelligence is profoundly transforming the advertising landscape, offering marketers unprecedented capabilities to predict customer behavior, analyze complex patterns, and optimize campaigns with real-time precision. This technological advancement enables highly tailored content delivery and programmatic efficiency, promising enhanced engagement and return on investment. However, this powerful innovation is inextricably linked with the imperative of adhering to stringent data protection regulations.

The inherent drive of AI in advertising towards greater efficiency, precision, and personalization, which relies on leveraging vast datasets to predict behavior and optimize campaigns in real-time , creates a fundamental tension with the GDPR. The GDPR is rooted in the protection of individual fundamental rights, demanding control, transparency, and accountability over personal data processing. This dynamic interaction is not merely an obstacle to overcome but a necessary force that shapes the ethical and legal boundaries of technological advancement. Organizations cannot simply adopt AI without a fundamental re-evaluation and re-architecture of their data governance frameworks to ensure legal alignment from the outset.

Non-compliance with GDPR carries significant legal, financial, and reputational risks. Penalties can be substantial, reaching up to €20 million or 4% of total global annual turnover, whichever is higher , as exemplified by significant fines imposed on major technology companies such as Amazon and Meta. Beyond monetary penalties, the risk of severe and lasting reputational damage is a critical consideration. Conversely, actively demonstrating robust data protection practices and a steadfast commitment to privacy can foster consumer trust. In a marketplace where data privacy concerns are escalating, trust serves as a powerful differentiator, potentially leading to enhanced user engagement, stronger brand loyalty, and ultimately, higher return on investment. Therefore, compliance transcends its traditional role as a mere cost center, emerging as a strategic enabler for sustainable business growth and market leadership.

In conclusion, the successful integration of AI in advertising necessitates a proactive, principles-based approach to GDPR compliance. This includes embedding privacy by design, conducting rigorous impact assessments, ensuring robust data governance, and upholding data subject rights as foundational elements for responsible and sustainable innovation.

Introduction: The Convergence of AI, Advertising, and Data Privacy

The Transformative Role of AI in Modern Advertising (Targeting, Personalization, Programmatic)

Artificial intelligence is fundamentally reshaping the advertising landscape, empowering marketers with unprecedented capabilities. It enables them to predict customer behavior, analyze complex patterns, and optimize ad campaigns with remarkable speed and precision in real-time. This transformative power is widely recognized across the industry, with 59% of global marketers identifying AI for campaign personalization and optimization as the most impactful trend by 2025, a prioritization evident across all major regions, including Latin America (63%), Asia-Pacific (62%), North America (60%), and Europe (50%).

At its core, AI-driven ad targeting employs sophisticated machine learning algorithms to analyze extensive consumer datasets, identify intricate behavioral patterns, and accurately predict which advertisements will resonate most effectively with specific individuals. Advanced AI technologies, such as Natural Language Processing (NLP) and computer vision, are enabling brands to gain a significantly deeper understanding of users' preferences and behaviors, thereby facilitating a higher degree of content personalization. AI achieves highly effective behavioral targeting by analyzing diverse data points, including clicks, time spent on a page, and purchase history, and it leverages predictive analytics to forecast which products or services a consumer is likely to engage with next. The capability for dynamic content personalization further allows businesses to adapt and tailor advertisements in real-time, dramatically increasing their relevance to individual users.

Programmatic advertising, which refers to the automated buying and selling of online ad space, is increasingly powered by AI. AI-powered programmatic ads optimize ad delivery based on real-time data signals such as location, time of day, device, and user behavior, leading to enhanced efficiency at scale and improved return on investment (ROI). AI enables marketers to move beyond basic audience segmentation, crafting "hyper-personalized" ads that reflect an individual’s unique preferences, behaviors, and even emotional triggers, reportedly delivering engagement rates up to five times higher than standard ads.

Furthermore, AI significantly enhances marketing measurement by swiftly processing vast, disparate datasets that would be unmanageable manually, thereby ensuring the accuracy and reliability of marketing data for smarter, data-driven decisions. This capability shifts marketing from a reactive discipline, often characterized by retrospective "expert hindsight analysis" of past campaign performance, to a proactive, forward-looking strategy that anticipates trends and delivers actionable intelligence in the moment.

The Imperative of GDPR Compliance in AI-Driven Data Processing

Despite the undeniable advantages and transformative capabilities of AI, the integration of these technologies in advertising introduces critical ethical considerations, particularly concerning data privacy and transparency, which remain paramount. A growing concern among consumers revolves around how their personal data is collected, processed, and utilized by AI systems, fueling ethical debates.

The GDPR explicitly aims to protect individuals' personal data and privacy. Given that AI systems inherently rely upon and process vast datasets, they must rigorously align with GDPR's stringent requirements. Non-compliance with GDPR can result in severe repercussions, including substantial financial penalties, which can reach up to 4% of total global annual turnover or €20 million, whichever amount is higher, in addition to significant and lasting reputational damage. It is crucial to understand that there is "no AI exemption" to data protection law; even the "incidental" processing of personal data falls under the scope of GDPR and counts as data processing. This underscores that AI development and deployment are not outside the existing regulatory framework but rather deeply embedded within it.

The effectiveness of AI in advertising is directly proportional to its capacity to analyze and leverage "vast datasets" and "more granular data points". This inherent appetite for data creates a direct and often challenging tension with core GDPR principles such as data minimization and purpose limitation. The more extensive the data collection and processing by AI, the higher the potential for privacy risks, and consequently, the greater the burden of compliance. This indicates that the very strength that makes AI so powerful in advertising is simultaneously its primary vulnerability from a GDPR compliance perspective, necessitating innovative approaches to data handling.

Traditional marketing often involved retrospective analysis, reviewing past campaign performance to identify shortcomings. AI fundamentally shifts this paradigm towards predictive analytics and real-time decision support, enabling marketers to anticipate trends and optimize in the moment. This proactive operational shift in marketing strategy necessitates a parallel and equally proactive integration of privacy considerations. Relying on reactive compliance—addressing privacy issues only after they arise—is insufficient when AI systems are continuously predicting and acting. This makes "Privacy by Design" not merely a legal requirement but an operational imperative, crucial for AI-driven marketing to function effectively, ethically, and in a legally compliant manner from its inception.

Foundational GDPR Principles in the AI Advertising Ecosystem

Overview of the 7 GDPR Principles

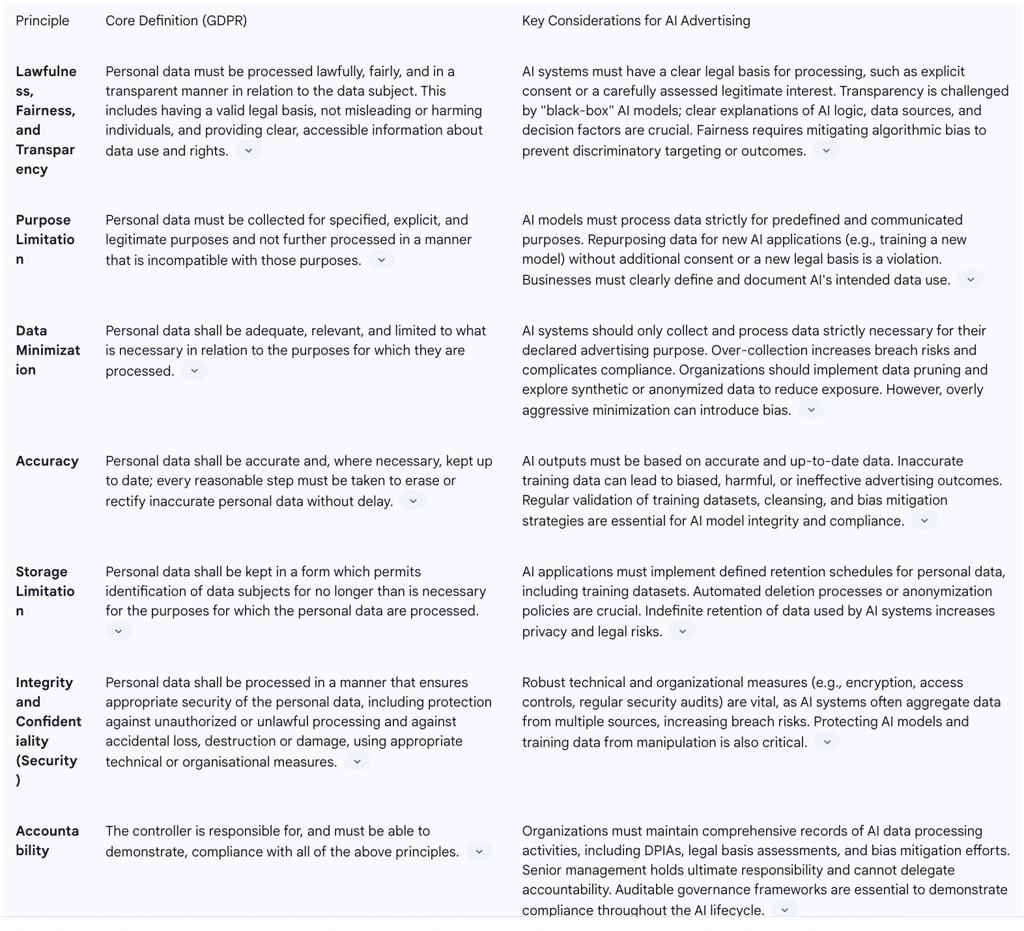

The GDPR is built upon seven foundational principles that govern the lawful processing of personal data: Lawfulness, fairness, and transparency; Purpose limitation; Data minimization; Accuracy; Storage limitations; Integrity and confidentiality; and Accountability. These principles are not merely guidelines but form the bedrock of GDPR compliance, influencing all other rules and obligations within the legislation.

Lawfulness, Fairness, and Transparency: Navigating Legal Bases (Consent, Legitimate Interests, Contract)

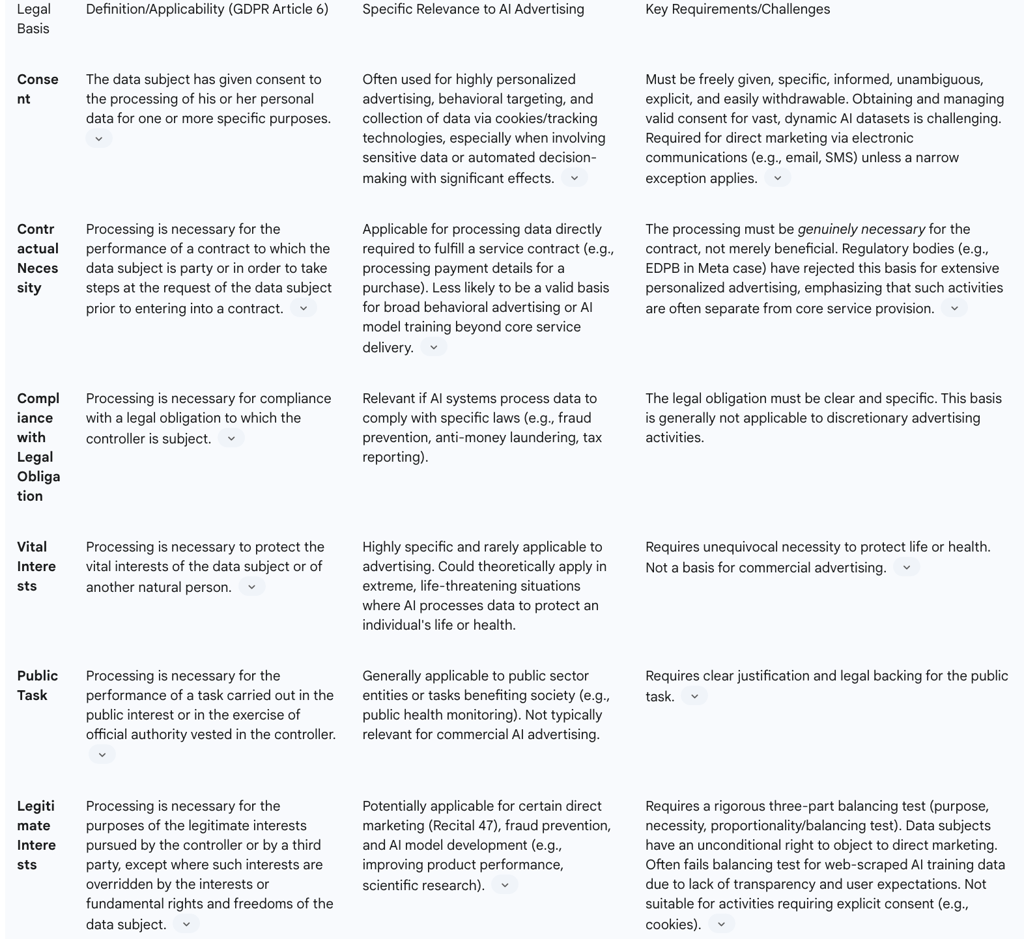

The principle of Lawfulness requires that all processing of personal data must have a valid legal basis. GDPR Article 6 provides six specific legal bases, of which organizations (the controller) must validly use and document at least one to justify data collection and use: informed consent from the data subject, performance of a contract with the data subject, compliance with a legal obligation, protection of vital interests, performance of a task carried out in the public interest or in the exercise of official authority, or legitimate interests.

Consent is a primary legal basis, requiring individuals to voluntarily agree to data processing activities. It must be explicit, specific, informed, unambiguous, freely given, and easily withdrawable at any time, placing individuals in control of their data. Obtaining valid consent can be challenging due to the need for clear transparency and simplicity in communication.

Contractual Necessity applies when the processing of personal data is genuinely necessary for the fulfillment of a contract to which the data subject is a party, or to take steps at the data subject's request prior to entering into a contract. A notable case involved Meta, which attempted to rely on this basis for behavioral advertising, but the Irish Data Protection Commission (DPC) and subsequently the European Data Protection Board (EDPB) found it insufficient for such broad processing.

Legitimate Interests provide a more flexible legal basis, allowing data processing for purposes related to an organization's necessary and legitimate interests, provided these interests do not override the fundamental rights and freedoms of the data subjects. Recital 47 of the GDPR clarifies that processing personal data for direct marketing purposes may be considered a legitimate interest. The French Data Protection Authority (CNIL) acknowledges legitimate interest as a probable legal basis for AI development, particularly given the challenges in obtaining explicit consent. This reliance is permissible when the interest pursued is legitimate (e.g., scientific research, improving a product, fraud prevention), the processing does not disproportionately affect data subjects' rights, and relevant mitigating measures (e.g., anonymization, synthetic data, opt-out mechanisms) are implemented. However, for direct marketing via electronic communications (e.g., email, SMS, MMS), the ePrivacy Directive generally mandates prior explicit consent of the recipient. A narrow exception exists if a company obtained the customer's email during a sale of goods or services, allowing its use for marketing similar products/services, provided the customer is clearly informed of and can easily exercise their right to opt-out. Crucially, when direct marketing involves the use of cookies or other tracking technologies, obtaining explicit consent from the user is a legal requirement. Furthermore, data subjects possess an unconditional right to object to the processing of their personal data for direct marketing purposes, regardless of the legal basis relied upon by the data controller. Once an objection is raised, the controller must cease processing data for direct marketing without further assessment. The ICO expects generative AI developers relying on legitimate interests for training data obtained via web scraping to demonstrate why alternative, less intrusive data collection methods (e.g., direct consent) are not suitable, as web scraping often fails the balancing test due to lack of transparency.

The principle of Fairness dictates that data must be processed in a way that is not misleading, unduly detrimental, unexpected, or harmful to the individual. It requires organizations to consider what people would reasonably expect regarding data use and to avoid any unjustified adverse effects on them. This also ties into ethical considerations around bias in AI, ensuring data is not used to discriminate.

Transparency refers to the obligation to provide clear, accessible, and easily understandable information to data subjects about how their data will be used, secured, and about their rights and how to exercise them. For instance, an e-commerce site collecting an email for marketing must detail email frequency and content. A significant challenge for AI models, particularly "black-box" systems, is achieving this level of transparency regarding their decision-making processes. The ICO explicitly stresses the importance of transparency and has indicated it will take action against organizations that fail to meet these standards. The EDPB also emphasizes that clear information must be provided to data subjects, especially in contexts involving automated decision-making.

Purpose Limitation and Data Minimization: Balancing AI's Data Needs with Compliance

The Purpose Limitation principle mandates that personal data must be collected for specified, explicit, and legitimate purposes and not subsequently processed in a manner incompatible with those initial purposes. Businesses are required to clearly define and document the intended use of data at the point of collection. Repurposing data for different uses or collecting new types of data for an existing use without obtaining explicit additional consent constitutes a violation of this core GDPR principle. In the context of AI, this means that models must process data strictly for predefined and legitimate purposes. For example, a recommendation system that uses personal data to generate unrelated marketing insights without proper consent would breach GDPR. The ICO expects developers to ensure that AI models are used solely for the purposes originally stated when data was collected, necessitating a re-evaluation of legal grounds or the acquisition of fresh consent if new purposes arise.

Data Minimization dictates that businesses should only collect and process the data that is strictly necessary, adequate, and relevant for their declared purpose. Over-collection of data not only increases the inherent risk in the event of a data breach or other compliance violation but also complicates compliance efforts and can raise customer concerns about the actual necessity of the data. For AI systems, this translates to avoiding the collection of excessive or irrelevant data to reduce privacy risks. Organizations should implement data pruning strategies and periodically review datasets to minimize exposure. To adhere to data minimization while still enabling AI development, organizations can sometimes utilize synthetic or anonymized data instead of real personal data. However, German data protection guidelines highlight a critical tension: "unbalanced data minimization can endanger the integrity of AI model modeling, i.e., lead to bias". This suggests that overly aggressive data minimization, without careful consideration, could inadvertently compromise the accuracy and fairness of AI models, which are themselves GDPR principles.

Accuracy and Storage Limitation: Ensuring Data Quality and Retention Policies

The Accuracy principle requires that personal data be accurate and, where necessary, kept up to date. Companies must take every reasonable step to erase or rectify inaccurate personal data without delay, particularly when requested by the data subject. Inaccurate information can lead to poor decision-making and, in some cases, harm to the individual whose data is being processed. For AI systems, it is paramount that their outputs are based on accurate data. Poor data quality can result in harmful or biased outcomes, which not only violate GDPR but also erode trust. Regular validation of training data and the implementation of bias mitigation strategies are critical for ensuring the accuracy and fairness of AI systems. The ICO strongly emphasizes the importance of regularly auditing training datasets and rigorously testing AI outputs for both accuracy and fairness.

The Storage Limitation principle dictates that personal data should be kept in a form that permits identification of data subjects for no longer than is necessary for the purposes for which the personal data are processed. To ensure compliance, businesses should maintain clearly defined retention schedules and have robust policies in place for secure deletion or anonymization of data. AI applications must adhere to this principle by not retaining personal data longer than necessary. Implementing automated deletion processes and employing data retention policies that are strictly aligned with GDPR requirements are crucial for compliance and user privacy. Specifically, AI training datasets that contain personal data must have clearly defined retention periods. Indefinite retention of data significantly increases privacy and legal risks. German guidelines further specify that when deletion under Article 17 GDPR becomes necessary, "technical complete deletion of relevant data is necessary," which may encompass input and output data used as training data, and could even require retraining existing AI models without the deleted information.

Integrity, Confidentiality, and Accountability: Securing Data and Demonstrating Compliance

The principle of Integrity and Confidentiality (Security) requires that personal data be processed in a manner that ensures appropriate security and confidentiality, including protection against unauthorized or unlawful processing and against accidental loss, destruction, or damage, using appropriate technical or organizational measures. Implementing robust technical and organizational measures (TOMs), such as encryption, access control, and regular security audits, is particularly crucial as AI systems often aggregate and process data across multiple sources, inherently increasing the risk of data breaches. Regular penetration tests and comprehensive employee training further strengthen data protection and security posture.

The Accountability principle places the responsibility on the data controller to not only comply with all the aforementioned GDPR principles but also to be able to demonstrate that compliance. This involves maintaining comprehensive records of data processing activities and ensuring that robust data protection measures are in place and actively managed. Regular audits, comprehensive documentation of processes and decisions, and clear reporting mechanisms are essential components for enhancing and demonstrating accountability. The EDPB explicitly emphasizes that controllers must demonstrate GDPR compliance, and this includes clearly defining roles and responsibilities before any data processing begins. The ICO further clarifies that senior management bears ultimate responsibility and "cannot simply delegate issues to data scientists or engineers," underscoring the top-down nature of accountability.

Key Considerations for Foundational GDPR Principles

While Recital 47 of GDPR suggests that direct marketing may be considered a legitimate interest , the practical reality for AI-powered advertising is significantly more intricate and fraught with risk. The ePrivacy Directive generally mandates explicit consent for electronic marketing communications , and the data subject's unconditional right to object to processing for direct marketing purposes fundamentally constrains the perceived flexibility of legitimate interest. Furthermore, recent regulatory guidance from authorities like the CNIL and ICO increasingly scrutinizes the reliance on legitimate interest, especially when AI training data is obtained through methods like web scraping, often concluding that it fails the balancing test due to a lack of transparency and misalignment with user expectations. The Meta case, where the DPC's initial acceptance of "contract" as a legal basis was ultimately overruled by the EDPB , serves as a stark reminder of this heightened regulatory scrutiny on legal bases. This creates a high-risk scenario where organizations might assume legitimate interest applies but face significant enforcement actions if their assessment of user expectations and the rigorous balancing test are not meticulously met and documented.

AI models achieve their sophisticated predictive power and hyper-personalization capabilities by thriving on vast datasets , often requiring extensive and diverse data inputs. This inherent appetite for data stands in direct tension with GDPR's principle of data minimization, which dictates that only data strictly necessary for a declared purpose should be collected and processed. Organizations are thus caught in a dilemma: either collect less data, potentially compromising the effectiveness and accuracy of their AI models, or collect more data, thereby significantly increasing their GDPR compliance risk. The German DPA's observation that "unbalanced data minimization can endanger the integrity of AI model modeling, i.e., lead to bias" reveals an even deeper, paradoxical conflict: an overly zealous application of data minimization might inadvertently lead to

less accurate or more biased AI systems, which itself would violate the accuracy and fairness principles of GDPR. This profound implication suggests that organizations must invest heavily in advanced privacy-enhancing technologies, such as synthetic data generation, differential privacy, or sophisticated anonymization techniques, to reconcile these conflicting demands, rather than simply reducing data collection.

The accountability principle extends beyond mere compliance; it demands the ability to demonstrate compliance. This is particularly challenging in the context of AI, where complex algorithms, intricate data flows, and dynamic model updates can obscure the precise details of data processing. The ICO's explicit statement that senior management cannot simply delegate accountability to data scientists or engineers , coupled with the EDPB's emphasis on defining roles and responsibilities before processing begins , signifies that a superficial "check-the-box" approach to compliance is wholly inadequate. Organizations must establish robust, auditable documentation and comprehensive governance frameworks that can withstand rigorous regulatory scrutiny, especially given the "black-box" nature often associated with certain AI systems. This implies a fundamental shift from reactive problem-solving to proactive, demonstrable, and transparent governance throughout the AI lifecycle.