GDPR implications for Chat-Based Healthcare and Telemedicine services

Chat-based services have emerged as a convenient and efficient means of communication between healthcare providers and patients.

The provision of healthcare through digital channels, particularly via telemedicine and chat-based services, represents a significant advancement in patient access and convenience. However, this innovation operates within a stringent legal framework governed by the General Data Protection Regulation (GDPR), which establishes a high standard for the protection of personal data. For digital health providers, understanding this framework is not merely a matter of legal compliance but a foundational requirement for building patient trust and ensuring the ethical stewardship of highly sensitive information. The regulation’s architecture is built upon a set of core principles, a special, elevated status for health data, and a dual-layered system for establishing the legality of any data processing activity.

1.1. Core Principles of the GDPR (Article 5): A Practical Application

The GDPR is anchored by seven core principles outlined in Article 5, which are not isolated rules but an interconnected framework that dictates the entire lifecycle of data processing. For a telemedicine service, these principles translate into specific operational mandates.

Lawfulness, Fairness, and Transparency: All processing of personal data must have a legitimate legal basis, be conducted fairly, and be transparent to the individual. In the context of a chat-based healthcare service, this means there can be no hidden data collection or obscure purposes. Privacy notices must be presented to the user in a clear, concise, and easily accessible format before they initiate a consultation. This information should plainly state what data is being collected, why it is being collected, and who it will be shared with.

Purpose Limitation: Personal data must be collected for specified, explicit, and legitimate purposes and not be further processed in a manner that is incompatible with those purposes. For example, if a patient provides information and images during a chat consultation for the purpose of diagnosing a skin condition, that data cannot be subsequently repurposed for marketing a new line of skincare products without obtaining a new, specific lawful basis.

Data Minimisation: The data collected must be adequate, relevant, and limited to what is absolutely necessary in relation to the purposes for which it is processed. A telemedicine platform must not engage in excessive data collection. For instance, if a patient needs to upload a single photograph for a diagnosis, the application should not request access to the user's entire device photo library. The principle dictates that the platform should only collect the minimum information required to provide the service safely and effectively.

Accuracy: Personal data must be accurate and, where necessary, kept up to date. Every reasonable step must be taken to ensure that personal data that is inaccurate, having regard to the purposes for which it is processed, is erased or rectified without delay. A telemedicine platform must provide clear and simple mechanisms for patients to correct their information, such as rectifying a misspelled name, an incorrect date of birth, or an outdated allergy record.

Storage Limitation: Personal data must be kept in a form which permits identification of data subjects for no longer than is necessary for the purposes for which the personal data are processed. Health records from a chat consultation cannot be retained indefinitely. Telemedicine providers must establish and enforce a clear data retention policy, grounded in national medical and legal requirements. Once this retention period expires, the data must be securely and permanently deleted or fully anonymized.

Integrity and Confidentiality: This principle mandates that personal data be processed in a manner that ensures its appropriate security, including protection against unauthorized or unlawful processing and against accidental loss, destruction, or damage. This requires the implementation of robust technical and organizational measures, such as end-to-end encryption for all chat communications and video calls, as well as encryption for all data stored at rest in databases or cloud storage.

Accountability: The data controller is responsible for, and must be able to demonstrate, compliance with all the preceding principles. This is not a passive obligation; it requires active measures. A telemedicine provider must maintain comprehensive documentation, including data processing records, data protection policies, evidence of consent, and records of staff training and audits, to prove its adherence to the GDPR.

These principles are not a checklist to be ticked off but form a cohesive and interdependent system. A failure to adhere to one principle often triggers a cascade of non-compliance across others. For instance, if a telemedicine app defines its purpose narrowly as "providing a one-off consultation for a specific ailment," this adherence to the Purpose Limitation principle directly informs its obligations under others. Because the purpose is limited, the app must only collect data strictly relevant to that ailment, thereby complying with Data Minimisation. Once the consultation is concluded and any legally mandated retention period has passed, the data is no longer necessary for that original purpose and must be deleted, fulfilling the Storage Limitation principle. If the app provider later decides to use this same data to train a new AI model without a new lawful basis, it not only violates the original Purpose Limitation but also creates a breach of the Storage Limitation principle, as there is no longer a valid reason for retaining the data. This demonstrates that compliance is a dynamic process that must be continuously re-evaluated with every new proposed use of patient data.

1.2. The Elevated Status of Health Data: "Special Category Data" (Article 9)

The GDPR affords the highest level of protection to certain types of personal data that are considered particularly sensitive. Health data sits prominently within this protected class, known as "special categories of personal data."

Definition: Article 4(15) of the GDPR defines 'data concerning health' as personal data related to the physical or mental health of a natural person, including the provision of healthcare services, which reveals information about his or her health status. This definition is intentionally broad and encompasses a wide array of information processed by a telemedicine service. It includes not only explicit medical records and diagnoses but also chat transcripts discussing symptoms, images of conditions uploaded by a patient, and biometric or genetic data.

The General Prohibition: Article 9(1) of the GDPR establishes a foundational rule: the processing of special categories of personal data is prohibited by default. This prohibition underscores the significant risks associated with the misuse of such data, including the potential for discrimination, social stigma, and other fundamental rights violations. This default ban means that organizations can only process health data if they can satisfy one of a limited number of specific and narrowly interpreted exceptions.

Included Data Types in a Telemedicine Context:

Data Concerning Health: This is the most common type and includes any information about a person's past, present, or future physical or mental health status. A chat log detailing a patient's symptoms, a doctor's notes, or a prescription issued through the platform all fall under this category.

Genetic Data: This refers to data relating to the inherited or acquired genetic characteristics of an individual, such as from the analysis of a biological sample. If a patient uploads a genetic test result to discuss with a clinician via chat, that data is protected under Article 9.

Biometric Data: This includes personal data resulting from specific technical processing relating to physical, physiological, or behavioral characteristics, such as facial images or fingerprint data, when used for the purpose of uniquely identifying a person. A telemedicine app using facial recognition for user login would be processing biometric data under Article 9.

Inferred Data: Crucially, the definition extends to data that may not be explicitly medical but from which a health status can be inferred. If a platform uses analytics to deduce that a user may be suffering from a mental health condition based on their chat patterns or search history, and then treats that user differently as a result (e.g., by targeting them with specific content), this inferred information can itself become special category health data. This creates a significant and often underestimated compliance risk for platforms employing advanced analytics or AI, as they may inadvertently begin processing special category data without having the necessary legal safeguards in place.

1.3. Establishing Lawful Bases for Processing Health Data (Articles 6 & 9)

To overcome the general prohibition in Article 9(1), a telemedicine provider must satisfy a stringent "dual-lock" legal requirement. It is not sufficient to meet only one condition; a lawful basis from Article 6 must be identified and documented, and this must be paired with a separate, specific condition for processing special category data from Article 9. These two conditions do not need to be thematically linked. For instance, an organization might rely on "performance of a contract" under Article 6 while relying on "provision of medical diagnosis" under Article 9.

Article 6 Lawful Bases Relevant to Telemedicine: Every act of data processing requires a lawful basis under Article 6. For telemedicine services, the most relevant are:

Consent (Article 6(1)(a)): The individual has given clear, affirmative consent for their data to be processed for a specific purpose.

Contract (Article 6(1)(b)): The processing is necessary for the performance of a contract to which the individual is party. This is highly relevant, as a patient using a telemedicine service is entering into a contract for the provision of healthcare.

Legal Obligation (Article 6(1)(c)): The processing is necessary to comply with a legal duty, such as a mandatory requirement to report certain infectious diseases to public health authorities.

Vital Interests (Article 6(1)(d)): The processing is necessary to protect someone's life, typically in an emergency situation where the individual is physically or legally incapable of giving consent.

Article 9 Conditions for Processing Health Data: In addition to an Article 6 basis, one of the following Article 9 conditions must be met to process health data:

Explicit Consent (Article 9(2)(a)): The individual has given explicit consent to the processing of their health data for one or more specified purposes. This requires a higher standard than standard consent, demanding a clear and affirmative statement from the user.

Health and Social Care (Article 9(2)(h)): Processing is necessary for the purposes of preventive or occupational medicine, for the assessment of the working capacity of the employee, medical diagnosis, the provision of health or social care or treatment, or the management of health or social care systems and services. This is a cornerstone condition for telemedicine providers, as it directly covers the core activities of diagnosis and treatment.

Public Interest in the Area of Public Health (Article 9(2)(i)): Processing is necessary for reasons of public interest in the area of public health, such as protecting against serious cross-border threats to health or ensuring high standards of quality and safety of healthcare.

Vital Interests (Article 9(2)(c)): As with Article 6, this applies when processing is necessary to protect the vital interests of an individual who is incapable of giving consent.

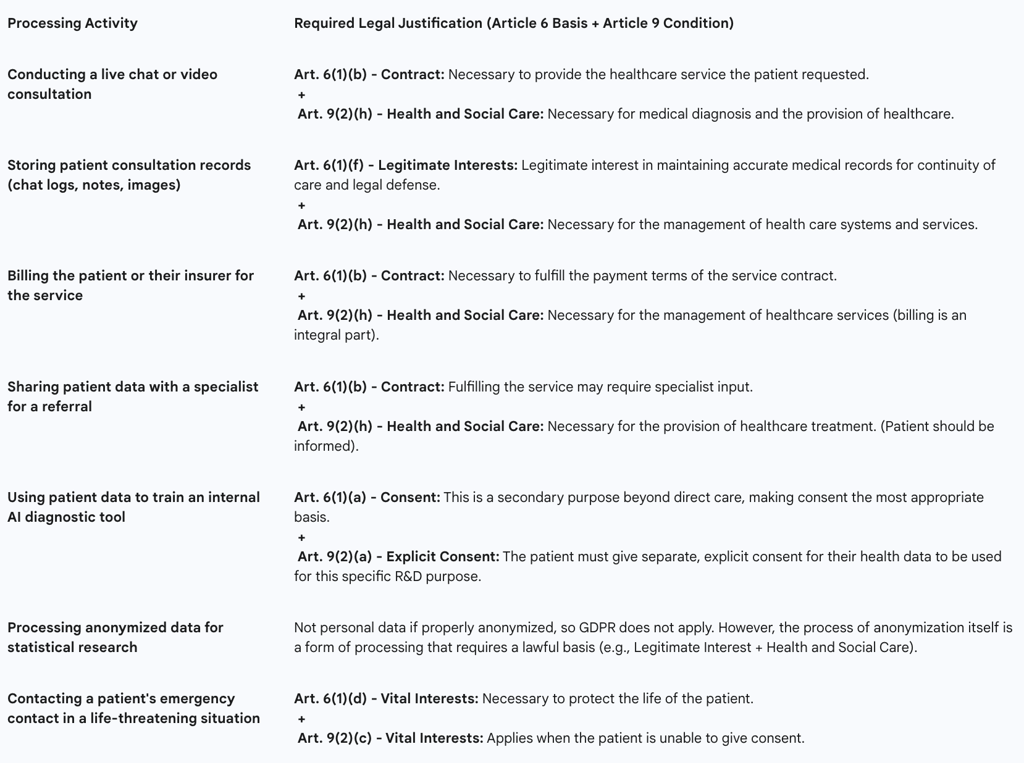

Mapping specific processing activities to the correct combination of legal bases is a critical compliance task. A single patient interaction on a telemedicine platform involves multiple distinct processing activities, each requiring its own justification. A common error is to apply a single, blanket legal basis to all activities, which can lead to non-compliance. The following table provides a practical mapping for common telemedicine scenarios.

Table 1: Lawful Bases for Processing Health Data in Telemedicine

Part II: Core Compliance Obligations for Telemedicine Services

Beyond the foundational principles, the GDPR imposes specific, actionable duties on organizations that process personal data. For telemedicine providers, these obligations are paramount and require the development of robust internal processes, clear patient-facing interfaces, and a state of constant readiness for critical events like data breaches. Operationalizing these duties effectively is central to achieving and maintaining compliance.

2.1. The Doctrine of Explicit Consent: Obtaining, Managing, and Documenting Patient Consent in a Digital Environment

When relying on consent as a legal basis for processing health data, the GDPR sets an exceptionally high bar. Under Article 9, "explicit consent" is required, which is a more stringent standard than the already high bar for standard consent under Article 6. It cannot be implied; it must be a direct, affirmative, and specific statement from the individual.

Practical Implementation in Chat and Telemedicine Interfaces:

Granularity and Unbundling: Consent requests must be granular. A single, all-encompassing "I agree" checkbox that bundles consent for treatment with consent for marketing and research is non-compliant. A user must be able to consent to the core service (the consultation) separately from any secondary purposes, such as allowing their anonymized data to be used for service improvement or clinical research.

Clarity, Simplicity, and Transparency: The language used in consent requests must be clear, plain, and free of legalistic jargon. It is essential to inform the patient not just that their data will be processed, but how and for what specific purpose. For example: "To help the doctor diagnose your condition, we need your explicit consent to record and save the transcript of this chat to your secure electronic health record".

Just-in-Time Consent: Rather than front-loading all consent requests into a single, overwhelming screen at sign-up, a better practice is to use "just-in-time" notices. For instance, when a user is about to upload a photograph during a chat, a pop-up should appear asking for specific consent to process that image for diagnostic purposes. This contextual approach ensures consent is truly informed.

Ease of Withdrawal: The GDPR mandates that withdrawing consent must be as easy as giving it. A telemedicine app must provide a clear, easily accessible mechanism within its privacy settings or user profile for a patient to withdraw their consent at any time. The platform must also clearly explain the consequences of withdrawal (e.g., "If you withdraw consent for us to process your health data, we will be unable to provide you with further consultations").

Consent for Minors: Processing the health data of children requires special care and, depending on the EU member state's law and the child's age, may require verifiable consent from a parent or guardian.

Documentation and Record-Keeping: The provider must maintain a comprehensive audit trail of consent. This log must be able to prove who consented, when they consented, how they consented (e.g., which checkbox was ticked), and the specific information they were shown at the time of consent. This documentation is a critical component of the accountability principle.

2.2. Operationalizing Data Subject Rights in a Digital Health Context

The GDPR fundamentally reframes the relationship between individuals and their data, granting them a suite of powerful rights. This framework transforms the patient from a passive subject of care into an active participant in the governance of their own health information. For a telemedicine provider, this necessitates designing platforms not just for clinical workflow, but for user-led data self-management. Simply providing an email address for requests is insufficient; a truly compliant and user-centric service embeds these rights directly into its interface.

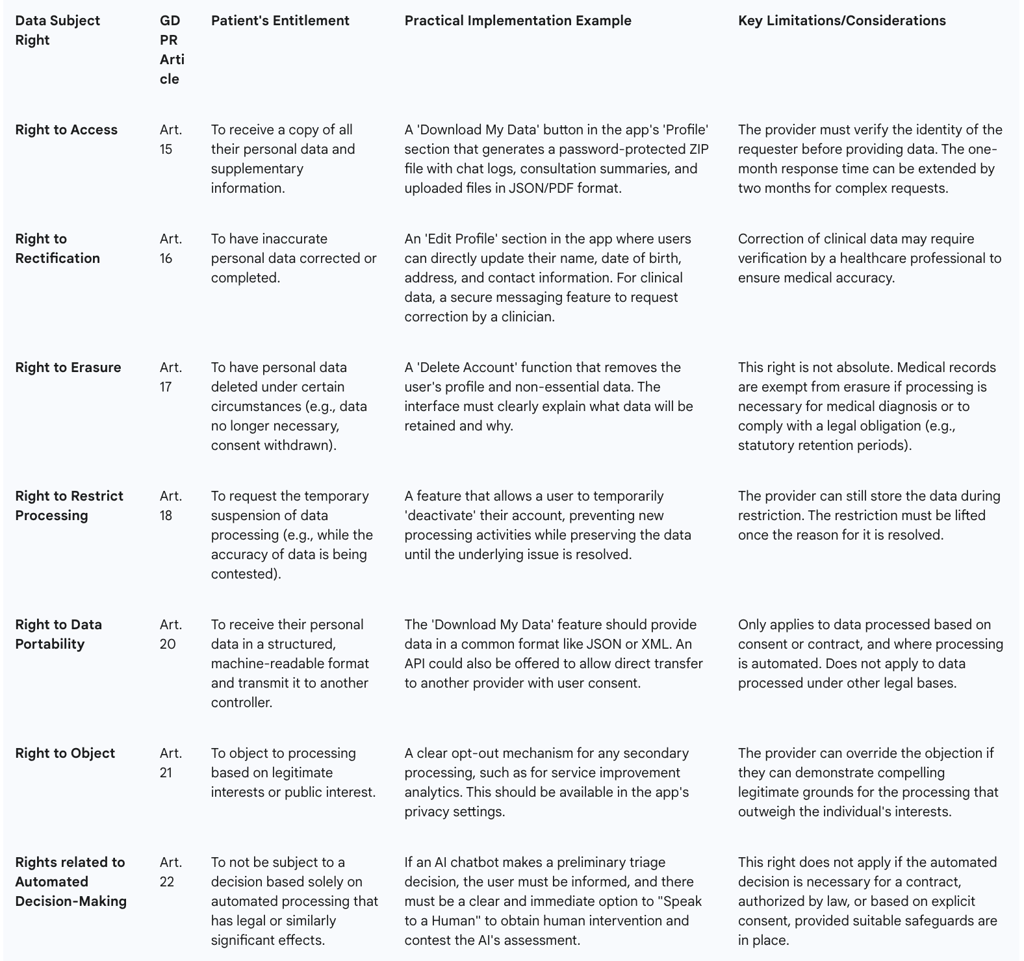

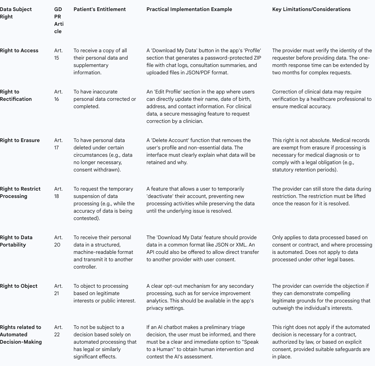

The Eight Fundamental Rights: Telemedicine providers must have procedures in place to facilitate all eight data subject rights: the right of access, rectification, erasure, restriction of processing, data portability, the right to object, and rights in relation to automated decision making and profiling.

Implementation within a Telemedicine Platform:

Right to Access (Article 15): Patients have the right to obtain confirmation that their data is being processed and to receive a copy of that data. The most effective way to implement this is through a "Download My Data" function within the user's account settings. This feature should allow the patient to securely export all their data—including chat transcripts, consultation notes, uploaded images, and billing information—in a common electronic format (e.g., a password-protected ZIP file containing JSON or CSV files).

Right to Rectification (Article 16): Patients must have a straightforward way to correct inaccurate or incomplete personal data. This should be a self-service function within the app's profile or settings page, allowing users to directly edit information like their name, contact details, or date of birth.

Right to Erasure ('Right to be Forgotten') (Article 17): A patient can request the deletion of their personal data. However, this right is not absolute and is one of the most complex to implement in a healthcare context. While a user should be able to delete their app account, the underlying medical records may be subject to mandatory legal retention periods. The platform must clearly communicate this limitation to the user. For example: "You can delete your account, which will remove your profile from our active systems. However, in accordance with national medical regulations, we are legally required to retain a secure archive of your consultation records for a period of [X] years".

Right to Data Portability (Article 20): This right allows individuals to obtain and reuse their personal data for their own purposes across different services. It empowers a patient to easily move their health records from one telemedicine provider to another. The platform must be capable of providing the data in a structured, commonly used, and machine-readable format (e.g., XML, JSON) to facilitate this transfer.

Procedural Requirements: Providers must establish and document internal workflows for handling all data subject requests. Requests must be handled electronically where possible, and a response must be provided without undue delay, and at the latest within one month. All staff, especially customer-facing teams, must be trained to recognize a data subject request and escalate it to the appropriate person or department immediately.

Table 2: Data Subject Rights and Practical Implementation in Telemedicine

2.3. Data Breach Management: The 72-Hour Notification Rule and Incident Response

The potential for a data breach involving sensitive health information is one of the most significant risks for any telemedicine provider. The GDPR imposes strict obligations for managing and reporting such incidents.

Definition of a Personal Data Breach: A breach is defined as a breach of security leading to the accidental or unlawful destruction, loss, alteration, unauthorized disclosure of, or access to, personal data transmitted, stored, or otherwise processed. This covers not only malicious cyberattacks but also accidental data loss or internal unauthorized access.

The 72-Hour Notification Rule (Article 33): In the event of a personal data breach, the telemedicine provider (as the data controller) must notify the relevant supervisory authority (e.g., the Information Commissioner's Office (ICO) in the UK) without undue delay and, where feasible, not later than 72 hours after becoming aware of it. If the notification is not made within 72 hours, it must be accompanied by a justification for the delay.

Notification to Affected Individuals (Article 34): If the breach is deemed likely to result in a high risk to the rights and freedoms of individuals, the provider must also communicate the breach to the affected individuals directly and without undue delay. Given the sensitivity of health data, a breach involving patient records is almost certain to meet this "high risk" threshold.

The 72-hour rule creates immense operational pressure, necessitating not just a legal policy but a pre-vetted, cross-functional "breach squad" comprising legal, IT security, public relations, and executive leadership that can be activated at a moment's notice. The core challenge is not the act of notification itself, but the ability to conduct a rapid investigation and risk assessment within that tight timeframe. When a security alert is triggered, the clock starts ticking from the moment the organization becomes "aware." The team must first conduct a forensic analysis to determine if personal data was actually compromised. If it was, they must then make a critical legal judgment call under extreme pressure: is the breach "likely to result in a high risk"? This assessment—which dictates whether thousands of patients must be notified—has enormous reputational and legal consequences. This demonstrates that compliance is not about having a policy document, but about having the technical and operational readiness to execute a complex investigation and legal analysis in under three days, requiring significant upfront investment in security technology and expert personnel.

2.4. Accountability in Practice: Records of Processing Activities (RoPAs) and the Role of the Data Protection Officer (DPO)

Accountability is the linchpin of the GDPR framework. It requires organizations to take responsibility for their data processing and to be able to demonstrate their compliance. Two key mechanisms for achieving this are the maintenance of a RoPA and the appointment of a DPO.

Records of Processing Activities (RoPA - Article 30): While there are limited exemptions for small businesses, most telemedicine providers, due to their processing of special category data, will be legally required to maintain a detailed internal record of their data processing activities. A RoPA is more than a simple data map; it is a comprehensive inventory that must include :

The name and contact details of the controller and DPO.

The specific purposes of the processing.

A description of the categories of data subjects (e.g., patients, clinicians) and categories of personal data (e.g., chat logs, diagnostic images).

The categories of recipients to whom the data has been or will be disclosed.

Details of any transfers of data to a third country.

Envisaged time limits for erasure of the different categories of data.

A general description of the technical and organizational security measures in place. The RoPA is a foundational compliance document that must be kept up-to-date and made available to the supervisory authority on request.

Data Protection Officer (DPO - Articles 37-39): The appointment of a DPO is mandatory for all public authorities and for organizations whose core activities consist of processing operations which require regular and systematic monitoring of individuals on a large scale, or of large-scale processing of special categories of data. This threshold will be met by most significant telemedicine providers.

Role and Responsibilities: The DPO is a designated expert responsible for overseeing the organization's data protection strategy and ensuring GDPR compliance. Their key tasks include advising the organization on its obligations, monitoring compliance through audits, providing advice regarding DPIAs, and acting as the primary point of contact for both supervisory authorities and individuals (data subjects).

Independence and Expertise: The DPO must be an expert in data protection law and practices. They must be able to perform their duties with a sufficient degree of autonomy and must report to the highest level of management. The DPO role can be filled by an employee or an external contractor.

Part III: Architecting for Compliance: Technical and Organizational Measures

The GDPR mandates a proactive and preventative approach to data protection, moving beyond reactive measures to embed privacy into the very fabric of systems and services. This philosophy is encapsulated in the legal requirement of "Data Protection by Design and by Default" and is supported by specific obligations to implement robust technical and organizational security measures. For telemedicine providers, this means that compliance cannot be an afterthought; it must be a core consideration from the initial concept of a service through to its development, deployment, and ongoing operation.

3.1. Privacy by Design and by Default (Article 25): A Foundational Approach for Health-Tech

Article 25 of the GDPR elevates Privacy by Design (PbD) from a best-practice concept to a legal obligation. It requires data controllers to implement appropriate technical and organizational measures to effectively integrate data protection principles into their processing activities from the outset.

Core Concepts:

Privacy by Design (PbD): This means that data protection considerations are embedded into the design and architecture of IT systems, business practices, and services from the very beginning of the development lifecycle. Privacy is not a feature to be added on later; it is a fundamental component of the system's DNA.

Privacy by Default: This principle requires that, by default, the most privacy-friendly settings are applied automatically. The user should not have to take any action to protect their privacy; it should be the baseline. This means processing only the data necessary for each specific purpose, for the shortest time necessary, and with the most limited accessibility.

The Seven Foundational Principles of PbD in a Healthcare Context:

Proactive not Reactive; Preventative not Remedial: Anticipate and prevent privacy risks before they occur. For a telemedicine app, this means conducting a Data Protection Impact Assessment (DPIA) before development begins, rather than dealing with a privacy flaw after launch.

Privacy as the Default Setting: The highest level of privacy protection is the default. For example, a new patient's profile is not publicly visible or searchable by default, and any data sharing for secondary purposes (like research) is set to "off" by default, requiring an explicit opt-in from the user.

Privacy Embedded into Design: Privacy is an essential component of the core functionality. User authentication, such as two-factor authentication (2FA), is built into the login process from the start, not added as a patch.

Full Functionality — Positive-Sum, not Zero-Sum: Avoid creating false dichotomies between privacy and other objectives like security or usability. The goal is to achieve all legitimate objectives, engineering solutions that deliver both functionality and privacy without unnecessary trade-offs.

End-to-End Security — Lifecycle Protection: Data is protected throughout its entire lifecycle, from collection to secure destruction. This involves applying security measures like encryption at all stages and enforcing strict data retention policies.

Visibility and Transparency — Keep it Open: All stakeholders should be ableto see that the system operates according to its promises and objectives. This involves clear privacy policies, transparent consent mechanisms, and maintaining detailed logs and documentation that can be independently verified.

Respect for User Privacy — Keep it User-Centric: The system should be designed with the user's interests at its core, offering them strong privacy defaults, clear notices, and user-friendly controls to manage their data.

From an economic perspective, Privacy by Design is a crucial risk mitigation and cost-control strategy. Integrating privacy controls early in the development lifecycle is significantly more efficient and less expensive than attempting to retrofit them into a live system. Failure to do so creates "privacy debt," which, much like technical debt, becomes exponentially more costly and disruptive to remediate over time. A telemedicine app built without considering granular consent or data deletion workflows may require a complete and expensive re-architecture to become compliant, potentially delaying its market entry or even forcing a product recall.

3.2. Essential Security Safeguards: Encryption, Access Controls, and Pseudonymization

To comply with the principle of "integrity and confidentiality" (Article 5(1)(f)) and the security requirements of Article 32, telemedicine providers must implement a suite of specific technical and organizational measures to protect health data.

Encryption: All personal health data must be protected by strong encryption. This is non-negotiable for any credible healthcare service.

Encryption in Transit: Data must be encrypted while it is moving between the patient's device, the provider's servers, and the clinician's device. This is typically achieved using strong, up-to-date protocols like Transport Layer Security (TLS) for all API calls, chat messages, and video streams.

Encryption at Rest: Data must also be encrypted when it is stored in databases, on servers, or in cloud storage. This ensures that if an attacker gains physical or logical access to the storage medium, the data remains unreadable without the corresponding decryption keys.

Access Controls: Access to patient data must be strictly controlled and limited on a "need-to-know" basis.

Role-Based Access Control (RBAC): This is a fundamental security practice where access rights are assigned based on an individual's role within the organization. For example, a clinician should only be able to access the records of patients under their direct care, while a member of the billing team should only see the minimum data necessary to process payments, without access to sensitive clinical notes.

Audit Logs: All access to personal data must be logged. These audit trails should record who accessed the data, what data they accessed, when they accessed it, and from where. These logs are essential for detecting and investigating potential security incidents and for demonstrating accountability.

Pseudonymization: This is a data protection technique that involves processing personal data in such a way that it can no longer be attributed to a specific individual without the use of additional information. This is achieved by replacing direct identifiers (like name, address, or patient ID number) with a pseudonym or token. The "key" that links the pseudonym back to the individual's identity must be kept separately and securely. Pseudonymization is a highly recommended measure, particularly when data is used for secondary purposes like research, analytics, or system testing, as it significantly reduces the privacy risk if the dataset is compromised.

Other Essential Measures: A holistic security posture also includes:

Regular Penetration Testing and Vulnerability Scanning: Proactively identifying and remediating security weaknesses in the platform.

Secure Software Development Lifecycle (SSDLC): Integrating security checks and best practices into every stage of the development process.

Regular Software Updates and Patch Management: Ensuring all systems, libraries, and applications are kept up-to-date to protect against known vulnerabilities.

3.3. The Critical Role of Data Protection Impact Assessments (DPIAs) in High-Risk Environments

The Data Protection Impact Assessment (DPIA) is the central risk management tool mandated by the GDPR for high-risk processing activities. For a telemedicine service, which inherently involves large-scale processing of sensitive health data using novel technologies, conducting a DPIA is not optional—it is a legal requirement.

When is a DPIA Mandatory? Article 35 of the GDPR requires a DPIA to be conducted before any processing that is likely to result in a high risk to the rights and freedoms of individuals. Supervisory authorities like the ICO have clarified that this requirement is automatically triggered by activities such as :

Processing special category data (e.g., health data) on a large scale.

Systematic monitoring of individuals (e.g., through wearable devices connected to the platform).

Using new or innovative technologies (e.g., AI-powered chatbots or diagnostic tools).

A failure to conduct a required DPIA can result in significant fines.

The DPIA Process: A DPIA is a systematic process designed to describe the processing, assess its necessity and proportionality, and help manage the risks to individuals' data protection rights. While templates are available from regulators like the ICO and NHS England , the core process involves several key steps :

Identify the Need and Purpose: Clearly define the project's aims and why a DPIA is necessary.

Describe the Processing: Provide a detailed description of the data flows. This should cover the nature (how data is collected, used, stored), scope (what data is processed, volume, duration), context (relationship with individuals, their expectations), and purpose of the processing. Data flow diagrams are highly effective here.

Consultation: Consult with relevant stakeholders. This must include the organization's DPO and may also involve IT security experts, legal counsel, and, where appropriate, the individuals (patients) whose data will be processed.

Assess Necessity and Proportionality: Justify why the processing is necessary to achieve the stated purpose and demonstrate that it is a proportionate measure. Consider if there are less intrusive ways to achieve the same outcome.

Identify and Assess Risks: Systematically identify the potential risks to individuals' rights and freedoms (e.g., risk of unauthorized access, risk of discrimination from a breach, risk of harm from inaccurate data). Assess the likelihood and severity of each risk.

Identify Mitigating Measures: For each identified risk, detail the specific technical and organizational measures that will be implemented to reduce or eliminate it (e.g., implementing end-to-end encryption to mitigate the risk of interception).

Sign-off and Record Outcomes: The DPO must provide advice on the DPIA, and the relevant business owner must formally sign off on the assessment, accepting any identified residual risks. If high risks remain that cannot be mitigated, the organization must consult with the supervisory authority before commencing the processing.

The DPIA as a Living Document: A DPIA is not a one-off, "check-the-box" exercise. It is a dynamic tool that must be reviewed and updated whenever there is a significant change to the nature, scope, context, or purposes of the processing, such as the introduction of a new AI feature or a change in data sharing partners.

While often viewed as a compliance burden, the DPIA process can be transformed into a powerful strategic asset. By systematically identifying, assessing, and mitigating privacy risks, an organization demonstrates a tangible commitment to ethical data stewardship. Publishing a clear, plain-language summary of a DPIA's findings and mitigations can serve as a powerful transparency tool, directly addressing the privacy concerns that often cause patients to hesitate in adopting digital health technologies. This can build significant trust and differentiate a service in a competitive market.

Part IV: Navigating the Data Ecosystem: Roles, Responsibilities, and Liabilities

In the complex digital health ecosystem, data rarely stays within a single organization. It flows between healthcare providers, software vendors, cloud platforms, and other third parties. The GDPR establishes a clear, albeit challenging, framework for assigning roles, responsibilities, and liabilities in this data processing chain. Correctly identifying whether an organization is a "data controller," "data processor," or "joint controller" is a critical first step, as this determination dictates its legal obligations and potential exposure to fines.

4.1. Defining Roles: Data Controller, Processor, and Joint Controllership in Telemedicine

The roles of controller and processor are not determined by the titles used in a contract, but by the factual reality of which entity exercises decision-making power over the data processing.

Data Controller: The controller is the natural or legal person which, alone or jointly with others, determines the purposes and means of the processing of personal data. In essence, the controller is the primary decision-maker, deciding

why and how data should be processed.

In Telemedicine: A hospital, clinic, or individual practitioner providing care to a patient through a digital platform is almost always a data controller. They have the direct relationship with the patient and determine the purpose of the data collection: to provide a medical diagnosis and treatment.

Data Processor: The processor is a separate entity that processes personal data on behalf of the controller and strictly according to the controller's documented instructions. The processor does not have independent decision-making power over the purpose of the processing.

In Telemedicine: A cloud infrastructure provider (e.g., Amazon Web Services, Microsoft Azure) that simply hosts the telemedicine platform's data is a classic example of a processor. A software vendor providing a "white-label" chat application that it does not analyze or use for its own purposes would also be a processor.

The Critical Distinction and the "Dual-Role" Risk: The line between processor and controller can blur, creating a significant compliance risk. If an entity engaged as a processor begins to use the data for its own purposes, it steps outside the controller's instructions and becomes a controller in its own right for that specific processing activity.

This "dual-role" scenario is common in the health-tech SaaS industry. For example, a telemedicine platform vendor might be a processor when delivering the core service to the clinic (the controller). However, if that same vendor aggregates and anonymizes patient chat data to train its own AI symptom checker or to publish industry trend reports, it is determining a new purpose and means of processing. For this secondary activity, the vendor is acting as a data controller and must independently fulfill all controller obligations (e.g., establishing a lawful basis, conducting a DPIA). This creates a risk of "compliance creep," where a seemingly simple product improvement decision by an engineering team can inadvertently shift the company's fundamental legal status and trigger a host of new, unfunded compliance duties.

Joint Controllership: When two or more entities jointly determine the purposes and means of processing, they are considered joint controllers. This requires a transparent arrangement between them that sets out their respective responsibilities for GDPR compliance. This might occur in a research partnership where a university and a telemedicine company collaborate to develop and validate a new diagnostic algorithm using patient data.

The controller/processor distinction is often the greatest point of legal and commercial friction between health-tech companies and their healthcare clients. Providers, as controllers, seek to transfer as much liability as possible to their vendors. Vendors, wishing to remain processors, want to strictly limit their role to following instructions. This tension can lead to protracted contract negotiations. A sophisticated approach is required, often involving agreements that clearly delineate different processing activities and assign the correct role (Controller, Processor, or Joint Controller) to each party for each specific activity, rather than relying on a single, one-size-fits-all DPA.

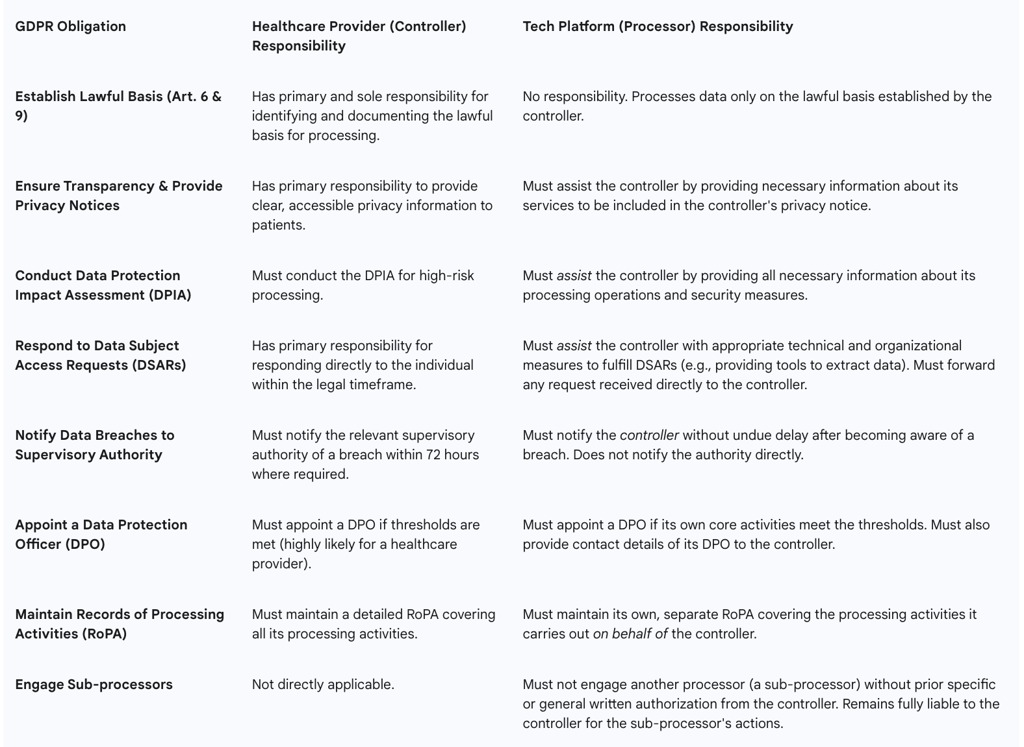

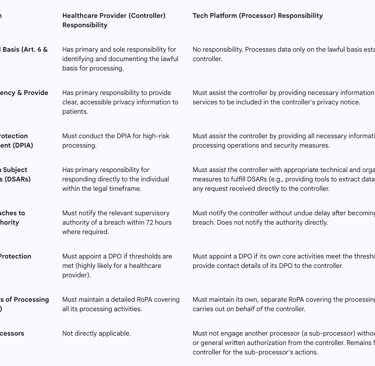

Table 3: Controller vs. Processor Responsibilities in a Telemedicine Partnership

4.2. Vendor Management and the Imperative of Robust Data Processing Agreements (DPAs)

Under the GDPR, a data controller is not absolved of responsibility simply by outsourcing a processing activity. The controller remains ultimately accountable for the protection of the data and must exercise careful due diligence when selecting and managing its vendors.

Controller's Due Diligence: A controller must only use processors that provide "sufficient guarantees" to implement appropriate technical and organizational measures in a manner that will meet the requirements of the GDPR. This requires a thorough vetting process before engaging any vendor, assessing their security posture, compliance certifications, and data protection expertise.

The Data Processing Agreement (DPA): The relationship between a controller and a processor must be governed by a legally binding contract, commonly known as a Data Processing Agreement or DPA. Article 28(3) of the GDPR specifies the minimum clauses that this agreement must contain. These include stipulating :

The subject matter, duration, nature, and purpose of the processing.

The types of personal data and categories of data subjects involved.

The obligation for the processor to act only on the controller's documented instructions.

A duty of confidentiality for all personnel authorized to process the data.

The requirement to implement appropriate security measures (as per Article 32).

Strict conditions for engaging sub-processors, requiring prior written consent from the controller.

The processor's duty to assist the controller in responding to data subject rights requests.

The processor's duty to assist the controller in relation to data breach notifications and DPIAs.

The requirement for the processor to delete or return all personal data to the controller at the end of the service contract.

The obligation for the processor to make available to the controller all information necessary to demonstrate compliance and to allow for and contribute to audits and inspections.

This DPA is a critical legal instrument that formalizes the data protection responsibilities of both parties and is a mandatory component of any compliant vendor relationship in the telemedicine supply chain.

Part V: International Data Transfers in a Post-Brexit Landscape

The global nature of digital services, particularly those reliant on cloud infrastructure, means that personal data is frequently transferred across international borders. The GDPR, and its UK equivalent, imposes strict rules on these transfers to ensure that the high level of data protection afforded to individuals is not undermined when their data leaves their home jurisdiction. For telemedicine platforms serving an international patient base or using global technology vendors, navigating these rules is a critical compliance challenge.

5.1. The Framework for International Data Transfers under UK GDPR

The fundamental principle governing international transfers is one of restriction. Under both the EU and UK GDPR, personal data cannot be transferred to a country outside of its originating legal area (the European Economic Area for the EU GDPR, and the UK for the UK GDPR), known as a "third country," unless that transfer is covered by a specific legal safeguard. This restriction applies even if the data is being transferred within the same corporate group, for example, from a UK entity to its own servers located in the United States.

The Post-Brexit Landscape: Following its departure from the European Union, the UK incorporated the EU GDPR into its domestic law, creating the "UK GDPR". The core principles and rules governing international data transfers remain largely identical. However, the UK now operates as an independent data protection jurisdiction. This means the UK government makes its own adequacy decisions regarding third countries and has issued its own legal instruments for data transfers, which must be used for data originating in the UK.

Data Flows Between the UK and the EU/EEA:

Transfers from the EU to the UK: In a crucial development for UK businesses, the European Commission adopted an "adequacy decision" for the United Kingdom in June 2021. This decision recognizes that the UK's data protection framework provides a level of protection that is "essentially equivalent" to that of the EU. As a result, personal data can flow freely from the EU and EEA to the UK without the need for additional safeguards. This is vital for any UK-based telemedicine provider that serves patients in the EU. However, this adequacy decision is not permanent and is subject to periodic review.

Transfers from the UK to the EU/EEA: The UK government has, in turn, recognized all EU and EEA member states as providing an adequate level of data protection. This allows for the unrestricted flow of personal data from the UK to the EU/EEA.

5.2. Mechanisms for Lawful Transfer: Adequacy, Standard Contractual Clauses (SCCs), and Binding Corporate Rules (BCRs)

For transfers from the UK to third countries that have not been deemed adequate (such as the United States, India, or China), one of the following legal mechanisms must be put in place.

Adequacy Decisions (Article 45): The UK Secretary of State has the power to issue "adequacy regulations" for third countries, territories, or specific sectors within a country. If a country is deemed adequate, data can be transferred there as if it were within the UK. The UK has recognized the adequacy of a number of jurisdictions, including the EU/EEA, Canada (for commercial organizations), Switzerland, and Japan. Crucially, it has also established a "data bridge" with the United States, granting adequacy to US organizations certified under the UK Extension to the EU-US Data Privacy Framework.

Standard Contractual Clauses (SCCs) (Article 46): In the absence of an adequacy decision, SCCs are the most widely used mechanism for international data transfers. These are standardized, pre-approved model contracts that impose GDPR-level data protection obligations on the data importer in the third country.

The Impact of the Schrems II Judgment: A landmark ruling by the Court of Justice of the European Union (Schrems II) established that simply signing SCCs is not sufficient. The data exporter (the UK telemedicine provider) has an obligation to conduct a case-by-case risk assessment, now commonly known as a Transfer Impact Assessment (TIA) or Transfer Risk Assessment (TRA). This assessment must evaluate whether the laws and practices of the destination country—particularly concerning government access to data for surveillance purposes—would prevent the data importer from complying with its obligations under the SCCs. If the TIA identifies risks, the exporter must implement "supplementary measures" (such as strong, end-to-end encryption where the importer cannot access the keys) to mitigate those risks. If the risks cannot be mitigated, the transfer is unlawful. This requirement transforms the transfer process from a simple contractual exercise into a complex geopolitical risk assessment, placing a significant due diligence burden on the exporter.

The UK's Transfer Instruments: The UK has issued its own set of transfer tools to be used for restricted transfers under the UK GDPR: the International Data Transfer Agreement (IDTA), which is a standalone agreement, and the International Data Transfer Addendum (the Addendum), which can be appended to the EU's SCCs to make them valid for UK data transfers.

Binding Corporate Rules (BCRs) (Article 47): BCRs are a comprehensive set of internal data protection policies and procedures adopted by a multinational corporate group. Once approved by a data protection authority, BCRs allow for the free flow of personal data within that group, including to entities in non-adequate third countries. BCRs are considered the "gold standard" for intragroup transfers as they demonstrate a deep, structural commitment to data protection. However, the process of drafting, implementing, and gaining approval for BCRs is lengthy, complex, and expensive, making them a viable option primarily for large, mature multinational organizations.

5.3. UK-EU Data Flows: The Adequacy Decision and Strategic Considerations

The current free flow of data between the UK and the EU is the bedrock of many digital health services operating across these jurisdictions. However, its continuity is not guaranteed.

The Precarious Nature of UK Adequacy: The EU's adequacy decision for the UK contains a "sunset clause," meaning it will automatically expire after a set period unless it is renewed. The decision is also subject to ongoing review by the European Commission. The UK's legislative path is diverging from the EU's with the introduction of the new Data (Use and Access) Act , which relaxes certain data protection rules. This divergence creates a tangible political and legal risk that the European Commission could, in a future review, conclude that the UK no longer provides an "essentially equivalent" level of protection and revoke the adequacy decision. Telemedicine providers must therefore engage in strategic contingency planning, such as having SCCs prepared to be executed with their EU partners and clients should the adequacy decision fall away.

Operating with "Compliance Duality": This strategic uncertainty creates a hidden cost for the UK digital health sector. UK-based telemedicine companies must operate with a form of "compliance duality." For their UK-only operations, they can align with the potentially more flexible UK GDPR as amended by the new Data Act. However, to future-proof their business and maintain seamless operations with the EU, they must ensure their architecture, policies, and contracts remain robust enough to meet the stricter standards of the EU GDPR at a moment's notice. This may involve maintaining a "shadow compliance" with EU standards as a business continuity measure, adding a layer of complexity and cost to their governance framework.

This complex environment means a UK telemedicine provider using a US-based cloud service must, in effect, become an expert on US surveillance law (such as the Foreign Intelligence Surveillance Act) to conduct its TIA. It must assess whether US government agencies could compel the cloud provider to hand over UK patient data in a manner that violates the principles of the UK GDPR. This shifts the compliance burden dramatically; the provider is no longer just signing a contract but is actively assessing and taking responsibility for the legal regime of another sovereign nation.

Part VI: The Next Frontier: AI, Ethics, and Future Regulatory Horizons

The integration of Artificial Intelligence (AI) into chat-based healthcare and telemedicine is rapidly moving from a theoretical possibility to a practical reality. AI-powered chatbots, diagnostic aids, and clinical decision support systems promise to enhance efficiency and improve patient outcomes. However, this technological frontier introduces a new and complex layer of data protection challenges. Navigating this landscape requires a deep understanding of how existing GDPR principles apply to AI, a proactive approach to confronting the profound ethical risks of algorithmic bias, and a commitment to the emerging mandate for explainability.

6.1. Integrating Artificial Intelligence: GDPR Implications for AI-Powered Chatbots

The use of AI to process personal health data is subject to all the core principles of the GDPR, but it places particular strain on several key provisions.

Lawful Basis for Training and Deployment: The lifecycle of an AI model involves distinct processing activities, each requiring a lawful basis. Training an AI model on existing patient data is a separate purpose from deploying that model to provide care to a new patient. For training, which is a secondary use of data, relying on "legitimate interest" under Article 6 is a possibility, but the ICO has made it clear this requires a rigorous, documented three-part test: identifying a legitimate interest, demonstrating the processing is necessary, and balancing this against the individual's rights and interests. Given the sensitivity of health data, obtaining specific, explicit consent (Article 9(2)(a)) is often the more robust and appropriate path for training purposes.

Transparency and Information Rights (Articles 13 & 14): The principle of transparency is paramount when AI is involved. Patients have the right to be clearly and proactively informed that they are interacting with an AI system. This information must include the purpose of the AI (e.g., "This AI chatbot will ask you questions to help summarize your symptoms for the doctor"), the types of data it processes, and, crucially, "meaningful information about the logic involved" in any automated decisions.

Rights Related to Automated Decision-Making (Article 22): This article provides a critical safeguard against the risks of purely automated judgments. If an AI system makes a decision concerning an individual that has a "legal or similarly significant effect" (e.g., an AI automatically making a diagnosis, triaging a patient to a lower-priority queue, or denying access to a service) without any meaningful human oversight, the individual has specific rights. These include the right to obtain human intervention, to express their point of view, and to contest the automated decision. Telemedicine platforms using such AI must build user-friendly mechanisms to facilitate these rights.

Data Minimisation vs. AI Accuracy: A fundamental tension exists between the GDPR's principle of data minimisation and the nature of many machine learning models. AI systems, particularly deep learning models, often achieve higher accuracy and performance when trained on vast and comprehensive datasets. This operational need for more data directly conflicts with the legal principle of collecting only the data that is strictly necessary for a specific purpose. This conflict is not easily resolved and forces organizations into a complex ethical and legal balancing act. They must be able to justify, through a DPIA, why the collection of a larger dataset is a necessary and proportionate measure to achieve a sufficiently accurate and safe clinical outcome. This requires a meticulous, documented assessment of the trade-off between privacy and clinical utility.

6.2. Confronting Algorithmic Bias in Healthcare AI: Risks and Mitigation

One of the most profound ethical and legal challenges of AI in healthcare is the risk of algorithmic bias. A biased AI system can perpetuate and even amplify existing health inequalities, leading to discriminatory and harmful outcomes for certain patient populations.

Sources of Bias: Algorithmic bias is not a spontaneous technical glitch; it is a systemic issue that is typically inherited from biased data or flawed model design.

Data Bias: This is the most common source. If the data used to train an AI model is not representative of the diverse patient population it will be used on, the model will inevitably perform less accurately for underrepresented groups. There are numerous real-world examples of this causing harm:

Skin Cancer Detection: AI models for diagnosing skin cancer have shown lower accuracy for individuals with darker skin tones because the training datasets consist overwhelmingly of images of light skin.

Healthcare Resource Allocation: A widely used commercial algorithm in the US was found to be racially biased because it used historical healthcare cost as a proxy for health need. Since less money is historically spent on Black patients, the algorithm systematically underestimated their health needs, leading to healthier white patients being prioritized for care management programs over sicker Black patients.

Medical Devices: Pulse oximeters have been shown to be less accurate on darker skin, potentially leading to undetected hypoxemia in Black patients. This biased data, if used to train an AI, would build this inequity directly into the model.

Algorithmic and Human Bias: The choices made by developers in designing, building, and testing the model can also introduce or amplify bias. This can be influenced by the unconscious biases of the development team itself.

Legal Implications under GDPR: A biased AI that results in a lower standard of care or discriminatory outcomes for individuals based on their race, gender, or other characteristics constitutes a clear violation of the GDPR's core principle of "fairness."

Mitigation Strategies: Addressing bias requires a holistic, multi-faceted approach that extends beyond technical fixes:

Data Governance and Diversity: Actively working to collect, curate, and use diverse and representative training datasets that reflect the target patient population.

Fairness Audits and Impact Assessments: Before deploying an AI model, and on a regular basis thereafter, organizations must conduct rigorous testing of its performance across different demographic subgroups (e.g., by race, gender, age, socioeconomic status) to identify and measure any performance disparities.

Technical Bias Mitigation: Employing advanced statistical and machine learning techniques to mitigate bias, which can be applied during data pre-processing, during the model training process (in-processing), or by adjusting the model's outputs (post-processing).

Transparency and Documentation: Being transparent with clinicians and patients about the known limitations of the AI model, including the demographic characteristics of its training data and any known performance disparities.

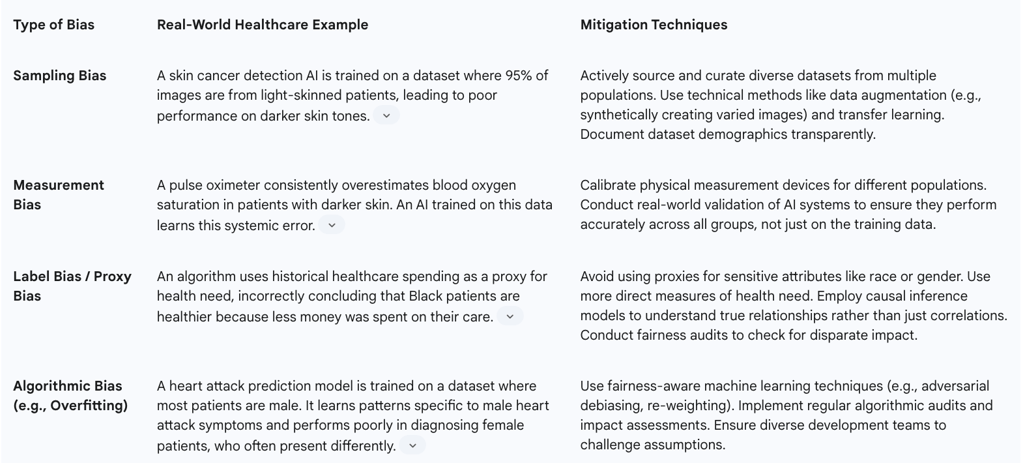

Table 4: Algorithmic Bias Mitigation Strategies in Healthcare AI

6.3. The Mandate for Explainability (XAI) in Clinical Decision Support Systems

Many advanced AI models, such as deep neural networks, operate as "black boxes," making it difficult for humans to understand how they arrive at a particular prediction or recommendation. This lack of transparency poses a significant barrier to the safe and ethical deployment of AI in high-stakes environments like healthcare. Explainable AI (XAI) refers to the set of methods and techniques used to make these models more understandable.

The Mandate for Explainability:

Building Trust and Facilitating Adoption: Clinicians are professionals who are trained to exercise their own judgment. They are unlikely to trust, and therefore unlikely to use, an AI tool if it provides a recommendation without any supporting rationale. Explainability is a prerequisite for clinical adoption.

Ensuring Patient Safety and Accountability: If an AI model makes an erroneous recommendation, explainability is crucial for understanding the cause of the error, correcting the system, and establishing accountability for any harm caused.

Meeting GDPR Requirements: While the GDPR does not contain an explicit "right to explanation" in all circumstances, the principles of transparency and fairness, along with the rights related to automated decision-making (Article 22), create a de facto requirement for a meaningful degree of explainability.

Practical Approaches to XAI:

Local vs. Global Explanations: It is important to distinguish between explaining the entire model's logic (global explainability) and explaining a single prediction (local explainability). In a clinical setting, local explanations are often more valuable. A doctor does not necessarily need to know how the entire AI works, but they do need to know why it is flagging this specific patient's X-ray as high-risk.

Interpretable Models ("Glass-Box"): One approach is to use models that are inherently simpler and more interpretable, such as decision trees or logistic regression models. These models may sometimes come with a trade-off in predictive accuracy.

Post-Hoc Explanation Methods: Another approach is to use complex, high-performance black-box models and then apply a separate XAI technique to provide explanations. Techniques like LIME (Local Interpretable Model-agnostic Explanations) and SHAP (SHapley Additive exPlanations) can highlight which features in the input data (e.g., which parts of an image, which words in a chat) were most influential in the model's decision.

6.4. The UK Data (Use and Access) Act: A New Chapter in UK Data Protection

The UK's data protection landscape is evolving. The Data (Use and Access) Act, which received Royal Assent in 2024, introduces significant amendments to the UK GDPR. The Act's stated aim is to reduce the compliance burden on businesses and unlock the economic benefits of data, but in doing so, it creates a degree of divergence from the EU GDPR.

Key Changes Relevant to Health-Tech:

Scientific Research: The Act significantly broadens the definition of "scientific research" to explicitly include commercial and privately funded activities. It also relaxes consent requirements, allowing for consent to be obtained for broad areas of research where specific purposes cannot be fully identified at the time of data collection. This change is designed to make the UK a more attractive environment for life sciences and health-tech R&D.

Legitimate Interests: A new category of "recognised legitimate interests" is created. For processing activities falling under this category (e.g., for purposes of national security or preventing crime), organizations will no longer need to conduct the traditional balancing test, simplifying the process.

Data Subject Access Requests (DSARs): The threshold for an organization to refuse a DSAR is lowered from "manifestly unfounded or excessive" to the more flexible standard of "vexatious or excessive." Organizations are also only required to conduct a "reasonable and proportionate" search for the requested information.

Accountability Mechanisms: The mandatory requirement for a Data Protection Officer (DPO) is replaced with a more flexible requirement for a "Senior Responsible Individual" (SRI) who must be part of the organization's senior management. The detailed requirements for maintaining Records of Processing Activities (RoPAs) are also relaxed for organizations not engaged in high-risk processing.

Health Information Standards: The Act grants the Secretary of State for Health and Social Care the power to create legally binding information standards for IT systems used in the NHS and private healthcare in England, a move aimed at improving data interoperability.

The UK's Data (Use and Access) Act represents a calculated strategic gamble. By creating a more permissive data protection regime, particularly for scientific research, the government aims to stimulate innovation and establish the UK as a global leader in health-tech and life sciences. However, this very divergence from the EU GDPR places the UK's adequacy status at considerable risk. This creates a potential "two-tier" compliance reality for UK health-tech companies, who may need to operate under the more flexible UK rules for domestic research while maintaining a stricter, EU GDPR-aligned framework for any collaboration involving EU partners or data. This could bifurcate the research landscape and introduce significant operational complexity for any UK-based company with international ambitions.

Part VII: Strategic Recommendations and Compliance Roadmap

Achieving and maintaining GDPR compliance in the fast-evolving world of telemedicine is a continuous journey, not a final destination. It requires more than a legalistic, check-box approach; it demands a strategic commitment to data protection that is woven into the organization's culture, technology, and business processes. The following recommendations provide a roadmap for building a sustainable compliance program that not only mitigates legal and financial risk but also fosters the patient trust that is essential for success in the digital health market.

7.1. A Best-Practice Checklist for End-to-End GDPR Compliance in Telemedicine

This checklist synthesizes the core requirements discussed throughout this report into a practical, actionable framework. Organizations can use it to audit their existing practices or to guide the development of a new compliance program from the ground up.

1. Foundational Governance & Accountability:

[ ] Determine GDPR Applicability: Formally document whether and how the UK and/or EU GDPR applies to your services.

[ ] Appoint a DPO/SRI: Appoint a Data Protection Officer (if required under EU GDPR) or a Senior Responsible Individual (under UK law) with the necessary expertise and independence.

[ ] Data Inventory & RoPA: Create and maintain a comprehensive data inventory and a formal Record of Processing Activities (RoPA) detailing all personal data processing.

[ ] Develop Core Policies: Establish and approve key data protection policies, including a general data protection policy, a data retention policy, and an information security policy.

2. Lawfulness, Fairness & Transparency:

[ ] Map Lawful Bases: For every processing activity, identify and document a valid lawful basis under Article 6 and, for health data, a specific condition under Article 9.

[ ] Craft Clear Privacy Notices: Develop layered, clear, and easily accessible privacy notices that inform patients about how their data is used, their rights, and who to contact. Avoid legal jargon.

[ ] Implement Granular Consent Mechanisms: Where relying on consent, ensure the process is explicit, granular, unbundled, and easy to withdraw. Maintain a verifiable audit trail of all consents obtained.

3. Data Security & Privacy by Design:

[ ] Conduct DPIAs: Conduct a Data Protection Impact Assessment (DPIA) for all high-risk processing activities (including all core telemedicine services and any AI implementation) before processing begins.

[ ] Embed Privacy by Design: Integrate data protection principles into the entire development lifecycle of your platform and services.

[ ] Implement Robust Security Measures:

[ ] Ensure end-to-end encryption for all data in transit.

[ ] Ensure strong encryption for all data at rest.

[ ] Implement strict role-based access controls (RBAC) and the principle of least privilege.

[ ] Maintain comprehensive and immutable audit logs for all access to personal data.

[ ] Conduct regular penetration testing and vulnerability assessments.

4. Data Subject Rights & Breach Management:

[ ] Establish DSAR Procedures: Develop and document clear internal procedures for receiving, verifying, and responding to Data Subject Access Requests (DSARs) within the one-month time limit.

[ ] Build User-Facing Tools: Where feasible, build self-service tools into your platform to allow users to exercise their rights (e.g., access, rectify, or delete their data) directly.

[ ] Develop an Incident Response Plan: Create a detailed data breach incident response plan that outlines roles, responsibilities, and procedures for containment, investigation, and notification.

[ ] Train Staff: Ensure all staff are trained to recognize a data breach and a data subject request and know how to report them immediately. Conduct regular drills of the response plan.

5. Third Parties & International Transfers:

[ ] Vendor Due Diligence: Implement a formal vendor management program that includes thorough data protection and security due diligence on all third-party processors.

[ ] Execute DPAs: Ensure a compliant Data Processing Agreement (DPA) is in place with every vendor that processes personal data on your behalf.

[ ] Map International Data Flows: Identify all cross-border transfers of personal data.

[ ] Implement Transfer Mechanisms: For transfers to non-adequate third countries, implement a valid transfer mechanism (e.g., UK IDTA/Addendum, EU SCCs) and conduct and document a Transfer Impact Assessment (TIA) for each transfer.

7.2. Building a Culture of Data Privacy to Foster Patient Trust and Mitigate Risk

Legal compliance is the floor, not the ceiling. The most resilient and successful telemedicine providers will be those that move beyond a check-box mentality to build a genuine culture of data privacy. In the sensitive domain of healthcare, trust is the most valuable currency, and a demonstrable commitment to privacy is the primary means of earning it.

Executive Leadership as Privacy Champions: A sustainable privacy culture must be driven from the top down. The organization's leadership must champion data protection not as a burdensome cost center or a purely legal obligation, but as a core business value and a key differentiator in the market. When privacy is positioned as central to the company's mission of providing safe and effective care, it informs better decision-making at every level.

Continuous and Role-Specific Training: Annual, generic data protection training is insufficient to address the complex risks of a health-tech environment. A mature privacy culture invests in continuous, role-specific education. Developers need training on secure coding and Privacy by Design principles. Clinicians using the platform need training on maintaining confidentiality during remote consultations. Customer support staff need in-depth training on identifying and handling data subject requests. This targeted approach ensures that every employee understands their specific role in protecting patient data.

Embedding "Privacy Thinking" into Business Processes: Privacy should not be the sole responsibility of the DPO or the legal team. It must be integrated into the daily workflows of the entire organization.

Product Development: Every new feature in the product roadmap must pass through a privacy review gate.

Procurement: No new vendor should be engaged without passing a formal data protection due to diligence check.

Marketing: All marketing campaigns must be reviewed to ensure they are based on valid consent and respect user preferences. When privacy becomes a standard consideration in every business process, compliance becomes a natural outcome rather than a forced effort.

Transparency as a Competitive Advantage: In a market crowded with digital health solutions, many of which have opaque or concerning data practices, radical transparency can be a powerful tool for building patient trust. This means going beyond the minimum legal requirements of a privacy notice. It could involve publishing a plain-language summary of your DPIA, creating a "Privacy Center" on your website that clearly explains your data practices, or providing users with a clear dashboard to see exactly who has accessed their data. By demonstrating a robust and ethical approach to data stewardship, a provider can directly address the privacy anxieties that prevent many patients from embracing telemedicine, turning a regulatory obligation into a powerful driver of user adoption and loyalty.

7.3. Preparing for Regulatory Scrutiny and Future-Proofing Your Service

The regulatory landscape for digital health is not static; it is in a constant state of evolution. A forward-looking compliance strategy must be agile and prepared for future changes.

Monitoring the Evolving Regulatory Matrix: The future of digital health regulation will be defined by the complex intersection of multiple legal frameworks. A compliant telemedicine provider can no longer operate in a single legal silo. It requires an integrated compliance function that understands the interplay between:

Data Protection Law: The GDPR, the UK Data Act, and their ongoing interpretations by courts and regulators.

Medical Device Regulation: In the UK, an AI diagnostic tool could be classified as a medical device, bringing it under the purview of the Medicines and Healthcare products Regulatory Agency (MHRA) and requiring adherence to specific safety and performance standards.

AI-Specific Legislation: Emerging laws like the EU AI Act will impose a new, risk-based set of obligations on AI systems, particularly those in high-risk sectors like healthcare. Navigating this multi-layered regulatory matrix is the primary strategic compliance challenge for the next decade of health-tech.

Documentation as Your Primary Defense: In the event of a regulatory investigation, a patient complaint, or litigation following a data breach, the organization's ability to produce comprehensive, contemporaneous documentation will be its primary line of defense. Meticulously maintained DPIAs, RoPAs, DPAs, consent records, training logs, and incident response reports are the tangible evidence used to demonstrate accountability and due diligence. A lack of documentation is often viewed by regulators as a lack of compliance itself.

Embracing Ethical AI as a Long-Term Strategy: As AI becomes more deeply integrated into healthcare, future-proofing a service means moving beyond mere legal compliance to embrace a broader framework of AI ethics. This involves a proactive and continuous commitment to ensuring fairness, mitigating bias, promoting explainability, and maintaining human oversight. Organizations that build their AI systems on a foundation of ethics and accountability will be better positioned to earn the long-term trust of patients and clinicians, and more resilient to the inevitable increase in regulatory scrutiny of these powerful technologies.

FAQ

1. What are the core principles of the GDPR, and how do they apply to digital healthcare services like telemedicine?

The General Data Protection Regulation (GDPR) is built upon seven core principles that govern the entire lifecycle of personal data processing. For digital healthcare providers, these principles translate into specific operational mandates:

Lawfulness, Fairness, and Transparency: All data processing must have a legitimate legal basis, be conducted fairly, and be transparent to the individual. This means no hidden data collection; privacy notices must be clear, concise, and easily accessible, detailing what data is collected, why, and with whom it's shared.

Purpose Limitation: Data must be collected for specified, explicit, and legitimate purposes and not be further processed incompatibly with those purposes. For instance, data collected for a diagnosis cannot be repurposed for marketing without new consent.

Data Minimisation: Only data that is adequate, relevant, and absolutely necessary for the stated purpose should be collected. Telemedicine platforms should avoid excessive data collection, such as requesting access to an entire photo library for a single image diagnosis.

Accuracy: Personal data must be accurate and kept up to date. Providers must offer clear mechanisms for patients to correct their information promptly.

Storage Limitation: Data should not be kept longer than necessary for the purposes for which it was collected. Telemedicine providers must have clear data retention policies based on legal and medical requirements, ensuring secure deletion or anonymisation once the retention period expires.

Integrity and Confidentiality: This principle mandates robust technical and organisational measures to ensure data security, including protection against unauthorised processing, accidental loss, destruction, or damage. Examples include end-to-end encryption for communications and data at rest.

Accountability: Data controllers must be able to demonstrate compliance with all principles. This requires maintaining comprehensive documentation, including processing records, privacy policies, consent evidence, and staff training records.

These principles are interconnected; a failure in one can lead to non-compliance across others, requiring continuous re-evaluation of data use.

2. Why is health data considered 'special category data' under GDPR, and what are the implications for telemedicine providers?

Under GDPR, health data is afforded the highest level of protection as it falls under "special categories of personal data" (Article 9). This elevated status is due to its sensitive nature and the significant risks associated with its misuse, such as discrimination or social stigma.

Broad Definition: 'Data concerning health' (Article 4(15)) broadly includes any personal data related to physical or mental health, including healthcare services provided. In telemedicine, this encompasses chat transcripts, symptom descriptions, uploaded images, diagnoses, biometric data (e.g., facial recognition for login), and even inferred data (e.g., deducing a mental health condition from chat patterns).

General Prohibition: Article 9(1) generally prohibits the processing of special category data by default. This means telemedicine providers can only process health data if they meet one of a limited number of specific and narrowly interpreted exceptions.

Dual-Lock Requirement: To process health data, a provider must satisfy a stringent "dual-lock" legal requirement: identifying a lawful basis from Article 6 (e.g., consent, contract, legal obligation, vital interests) and a separate, specific condition for processing special category data from Article 9.

Key Article 9 conditions relevant to telemedicine include:

Explicit Consent (Article 9(2)(a)): Requires a clear, affirmative statement from the user, a higher standard than standard consent.

Health and Social Care (Article 9(2)(h)): Directly covers core activities like medical diagnosis, provision of health or social care, and management of health systems.

Public Interest in Public Health (Article 9(2)(i)): For purposes like protecting against cross-border health threats.

Vital Interests (Article 9(2)(c)): In emergencies where the individual cannot give consent.

This dual requirement ensures robust justification for handling such sensitive information.

3. What is 'explicit consent' in the context of health data, and how should telemedicine services implement it?

'Explicit consent' (Article 9(2)(a)) is a more stringent standard than regular consent under GDPR, particularly crucial for sensitive health data. It cannot be implied and must be a direct, affirmative, and specific statement from the individual.

Practical implementation for telemedicine services includes: