Privacy Impact Assessments for AI systems under GDPR

AI systems have gained prominence for their ability to enhance customer interactions, streamline operations, and drive growth.

The proliferation of Artificial Intelligence (AI) systems that process personal data marks a fundamental turning point in data protection governance. These systems move beyond the predictable, rule-based logic of traditional information technology, introducing probabilistic, evolving, and often opaque models of processing. This paradigm shift amplifies existing privacy risks and creates novel challenges that demand a more rigorous and forward-looking approach to accountability. Within the framework of the EU's General Data Protection Regulation (GDPR), the Data Protection Impact Assessment (DPIA) emerges not merely as a compliance exercise, but as the primary legal and ethical mechanism for identifying, comprehending, and mitigating the heightened risks posed by AI.

This report provides an exhaustive analysis of the legal obligations, regulatory guidance, and practical methodologies for conducting DPIAs for AI systems under the GDPR. Its central argument is that for the vast majority of AI applications processing personal data, the DPIA is not an optional consideration but a mandatory legal prerequisite. Furthermore, a standard, generic DPIA is insufficient for this purpose. The assessment process must be substantively adapted to address the unique risk profile of AI, including the challenges of algorithmic bias, the "black box" problem, the spontaneous creation of inferred data, and the profound technical difficulties in upholding data subject rights.

The key findings of this report are as follows:

The DPIA is a distinct legal mandate: The distinction between a general Privacy Impact Assessment (PIA) and a DPIA is legally significant. The DPIA is a specific, non-negotiable requirement under Article 35 of the GDPR, triggered by high-risk processing activities. Its focus is squarely on the potential harm to the fundamental rights and freedoms of individuals, a more stringent and rights-centric standard than that of a typical PIA.

AI systems are presumptively high-risk: The characteristics inherent to most AI systems—such as the use of innovative technology, large-scale profiling, the combination of datasets, and the potential for automated decisions with significant legal or personal effects—directly align with the high-risk criteria defined in the GDPR and by supervisory authorities like the UK's Information Commissioner's Office (ICO) and the European Data Protection Board (EDPB). This alignment effectively establishes the DPIA as a default requirement for AI development and deployment.

AI-specific risks demand an augmented DPIA: A standard DPIA designed for traditional IT systems will fail to capture the novel risks of AI. A credible AI DPIA must be augmented to systematically assess and mitigate unique challenges, including allocative and representational harms from algorithmic bias, the erosion of transparency due to model opacity, the tension between AI's data appetite and the principle of data minimization, the creation of new, potentially sensitive personal data through inference, and the practical impediments to fulfilling rights such as erasure and access.

The emerging regulatory ecosystem requires a unified approach: The forthcoming EU AI Act introduces a complementary Fundamental Rights Impact Assessment (FRIA) for high-risk AI systems. This creates a dual-assessment landscape. However, the AI Act allows for the integration of the FRIA into the DPIA. Therefore, a robust, well-executed AI DPIA under GDPR can and should serve as the foundational framework for satisfying the broader compliance obligations of the AI Act, necessitating a unified and efficient assessment strategy from organizations.

Ultimately, for any organization developing or deploying AI within the European Union's jurisdiction, mastering the AI-specific DPIA is an indispensable component of legal compliance, ethical governance, and strategic risk management.

Section II: Foundational Concepts: Distinguishing PIAs from GDPR-Mandated DPIAs

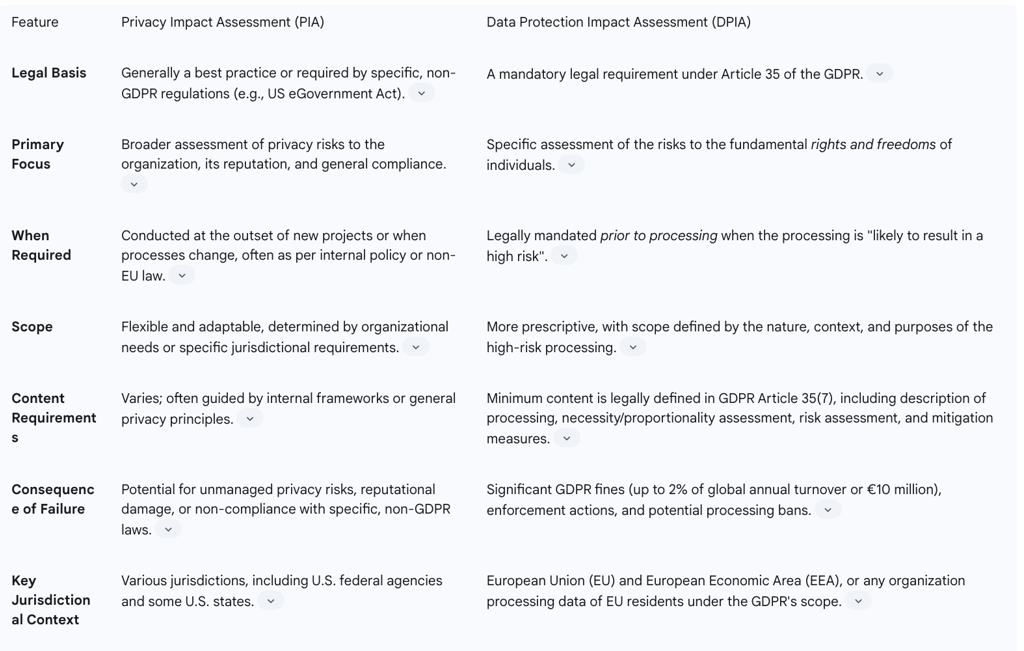

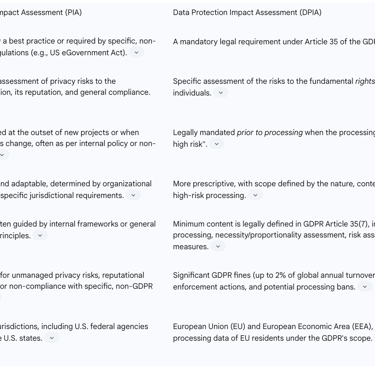

To navigate the complex requirements for assessing AI systems, it is imperative to first establish a precise understanding of the terminology involved. While the terms Privacy Impact Assessment (PIA) and Data Protection Impact Assessment (DPIA) are often used interchangeably in general discourse, they have distinct origins, scopes, and legal implications. Under the GDPR, the DPIA is a term of art with specific legal force that goes far beyond the general best-practice nature of a PIA.

The Privacy Impact Assessment (PIA): A Best-Practice Genesis

The concept of the Privacy Impact Assessment (PIA) predates the GDPR and originated as a flexible, systematic process to evaluate the potential effects of a project, system, or policy on individual privacy. PIAs are generally considered a best-practice tool for internal risk management, helping organizations to identify and address privacy gaps in their strategies.

The scope and methodology of a PIA are often adaptable, tailored to an organization's internal privacy programs or specific non-EU legal frameworks. A notable example is the requirement for U.S. federal government agencies to conduct PIAs under the eGovernment Act of 2002. The primary objective of a PIA is frequently centered on the organization itself: to build and maintain trust with users, protect the organization's reputation, and ensure alignment with a broader set of privacy laws and principles. While it assesses how an organization collects, uses, and shares personal information, its focus is not as legally prescribed as that of a DPIA.

The Data Protection Impact Assessment (DPIA): A Legal Mandate under GDPR

In contrast, the Data Protection Impact Assessment (DPIA) is a specific and legally binding process mandated by Article 35 of the GDPR. It is not an optional best practice but a compulsory obligation for any data processing that is "likely to result in a high risk to the rights and freedoms of natural persons". This legal status is the most critical differentiator.

The focus of a DPIA is narrower and more intense than that of a typical PIA. Its explicit purpose is to analyze and mitigate risks to the fundamental rights and freedoms of individuals, which include but are not limited to the right to data protection. The content, triggers, and process for a DPIA are more prescriptive, being defined in Article 35 of the GDPR and further specified in guidelines from supervisory authorities like the ICO and the EDPB. Failure to conduct a DPIA when required, or conducting one improperly, is a breach of the GDPR and can lead to significant regulatory enforcement action, including fines of up to €10 million or 2% of the organization's worldwide annual turnover, whichever is greater.

The introduction of the DPIA under GDPR represents a fundamental philosophical shift in risk assessment. While a PIA often centers on mitigating risks to the organization—such as reputational damage, customer churn, or non-compliance penalties—the DPIA legally re-centers the entire exercise on identifying and mitigating risks to the individual. An organization-centric PIA might focus on preventing a data breach to avoid regulatory fines and negative press coverage. A rights-centric DPIA, however, must go further. It must consider a broader spectrum of potential harms to the individual, such as discrimination arising from a biased algorithm, loss of autonomy from opaque automated decisions, or significant social and economic disadvantage. These are profound harms to the data subject, even if they do not translate directly into a financial loss or compliance breach for the company. This legal and ethical re-evaluation is paramount when assessing AI systems, where the potential for such societal and individual harms is significantly amplified.

The following table provides a comparative analysis of the two assessments, highlighting their distinct legal and practical characteristics.

Section III: The Legal Cornerstone: Deconstructing GDPR Article 35

Article 35 of the GDPR is the legal foundation upon which all obligations related to DPIAs are built. A meticulous analysis of its text is essential for understanding the specific requirements that apply to AI systems. The article was drafted with a deliberate, technology-neutral, and forward-looking perspective, anticipating the rise of complex, data-intensive processing like that performed by AI.

Article 35(1): The "High Risk" Threshold

The core obligation is established in Article 35(1): "Where a type of processing in particular using new technologies, and taking into account the nature, scope, context and purposes of the processing, is likely to result in a high risk to the rights and freedoms of natural persons, the controller shall, prior to the processing, carry out an assessment of the impact of the envisaged processing operations on the protection of personal data".

This clause is profoundly significant for AI. The explicit mention of "new technologies" directly implicates AI, machine learning, and other advanced algorithmic systems. The requirement to conduct the assessment "prior to the processing" firmly embeds the DPIA within the principle of "Data Protection by Design and by Default" (Article 25), making it a proactive risk management tool rather than a reactive compliance check.

Article 35(3): Non-Exhaustive List of Mandatory DPIA Scenarios

Article 35(3) provides a non-exhaustive list of processing activities that are automatically considered to require a DPIA. These scenarios are not descriptions of traditional IT processing but are emblematic of modern AI applications:

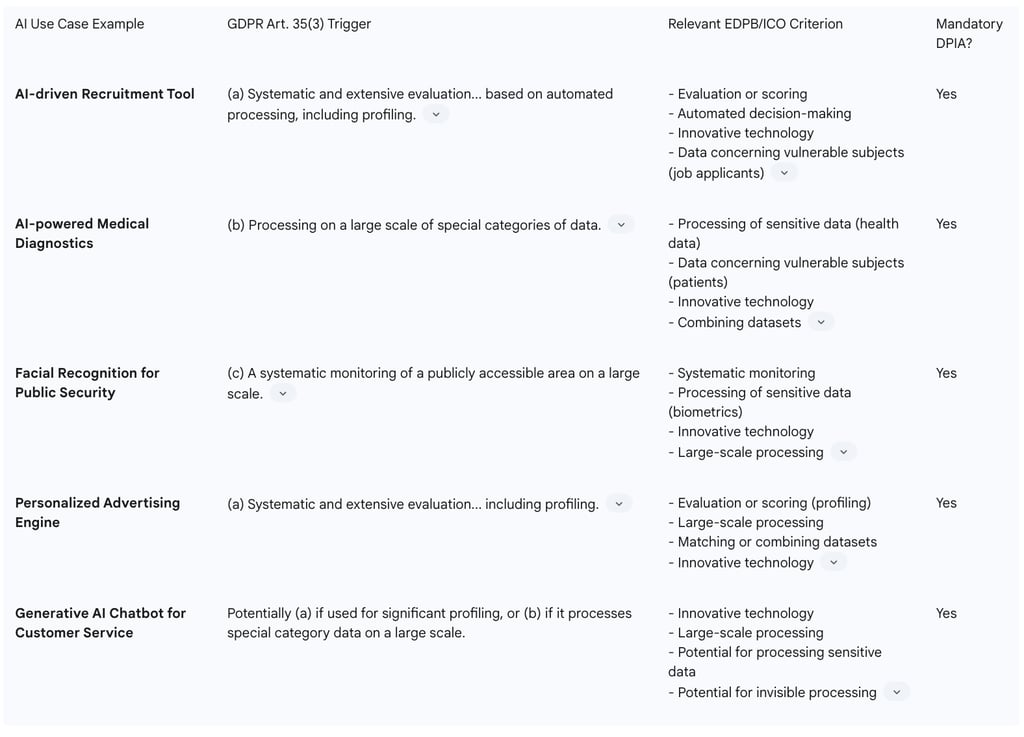

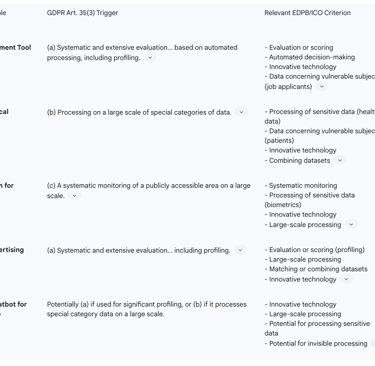

(a) A systematic and extensive evaluation of personal aspects... based on automated processing, including profiling... on which decisions are based that produce legal effects... or similarly significantly affect the natural person". This is the most direct and common trigger for AI systems. It precisely describes the function of AI in domains such as automated recruitment screening, online credit application decisions, and insurance premium calculations, all of which use profiling to make decisions that have significant consequences for individuals.

(b) Processing on a large scale of special categories of data... or of personal data relating to criminal convictions". AI systems are increasingly deployed in sectors that inherently handle such data. Examples include AI-powered diagnostic tools in healthcare processing patient data, biometric identification systems using facial or fingerprint data, and AI tools used in the justice system that process criminal offense data.

(c) A systematic monitoring of a publicly accessible area on a large scale". This directly applies to AI-driven surveillance technologies, such as the use of live facial recognition cameras in public spaces or the analysis of crowd behavior through video feeds.

The language of Article 35, particularly the reference to "new technologies" and the specific examples in Article 35(3), demonstrates that the framework was not designed to be static. It was intentionally crafted to be flexible and robust enough to govern future technological developments. The legislators foresaw the rise of data-intensive, algorithmically-driven systems that learn, infer, and make automated decisions. Subsequent guidance from regulatory bodies like the EDPB and ICO, which explicitly names AI and machine learning as triggers for DPIAs, confirms this intent. This establishes that applying the GDPR's DPIA requirement to AI is not an unforeseen regulatory burden or a legal retrofit, but rather the intended application of a future-proofed law.

Article 35(7): Minimum Content Requirements for a DPIA

Article 35(7) prescribes the minimum elements that a DPIA must contain, forming a blueprint for the assessment process. These are:

(a) A systematic description of the envisaged processing operations and the purposes of the processing, including, where applicable, the legitimate interest pursued by the controller. This requires a clear and detailed account of what data is being processed, how, and why.

(b) An assessment of the necessity and proportionality of the processing operations in relation to the purposes. This forces the controller to justify the processing and demonstrate that it is not excessive.

(c) An assessment of the risks to the rights and freedoms of data subjects. This is the core risk analysis component, focusing on potential harm to individuals.

(d) The measures envisaged to address the risks, including safeguards, security measures and mechanisms to ensure the protection of personal data and to demonstrate compliance. This is the risk mitigation component, where the controller outlines the technical and organizational controls to be implemented.

The Role of the DPO and Consultation with Supervisory Authorities (Articles 35(2) & 36)

The GDPR establishes a clear governance structure around the DPIA. Article 35(2) mandates that the controller "shall seek the advice of the data protection officer (DPO), where designated". The DPO's role is not passive; they are expected to provide expert advice on whether a DPIA is necessary, the appropriate methodology, and whether the proposed mitigation measures are sufficient to address the identified risks.

Furthermore, Article 36 introduces a critical backstop. If a DPIA reveals a high residual risk that the controller cannot mitigate through available measures, the controller is legally obligated to consult the relevant supervisory authority (such as the ICO in the UK or the CNIL in France) before commencing the processing. The authority then has a period (typically six weeks) to provide advice and may use its powers to prohibit the processing if it is deemed to be in breach of the GDPR. This prior consultation mechanism acts as a powerful regulatory check on exceptionally risky data processing activities.

Section IV: The AI Imperative: Identifying High-Risk AI Systems Requiring a DPIA

While Article 35 of the GDPR provides the legal foundation, guidance from the European Data Protection Board (EDPB) and national supervisory authorities provides the practical criteria for determining when a DPIA is mandatory. A synthesis of this guidance reveals that the deployment of AI systems processing personal data almost invariably triggers the DPIA requirement.

Synthesizing ICO and EDPB Guidance

The EDPB, building on the work of its predecessor, the Article 29 Working Party (WP29), has established a set of nine criteria to help controllers identify processing "likely to result in a high risk". As a general rule, if a processing activity meets at least two of these criteria, a DPIA is presumed to be required. These criteria are:

Evaluation or scoring: This includes profiling and predicting aspects of a person's life, such as work performance, economic situation, health, or behavior.

Automated decision-making with legal or similarly significant effect: Decisions that impact a person's legal rights or have a major effect on their circumstances.

Systematic monitoring: Large-scale, systematic observation of individuals, particularly in public spaces.

Sensitive or highly personal data: Processing of special category data (e.g., health, biometrics) or data of a highly personal nature (e.g., financial data).

Data processed on a large scale: While not strictly defined, this considers the number of data subjects, volume of data, duration, and geographical extent of the processing.

Matching or combining datasets: Linking data from different sources, especially when done in a way the data subject would not reasonably expect.

Data concerning vulnerable data subjects: Processing the data of individuals who may be unable to freely consent or object, such as children, employees, or patients.

Innovative use or applying new technological or organizational solutions: Using technologies in a new way or applying new technologies that may create novel risks.

Preventing data subjects from exercising a right or using a service or a contract: When the processing is used to decide on an individual's access to services, such as a bank deciding whether to grant a loan.

AI as "Innovative Technology": The Automatic Trigger

The eighth criterion, "innovative use or applying new technological solutions," is particularly pertinent. The UK's ICO, in its official list of processing operations requiring a DPIA, explicitly names "Artificial intelligence, machine learning and deep learning" as examples of such innovative technology.

Crucially, the guidance from both the ICO and the EDPB clarifies that a DPIA is required for any processing that involves an innovative technology in combination with any other criterion from the list. This "two-factor" test establishes a very low threshold for requiring a DPIA for AI systems. It is exceptionally difficult to conceive of a functional and commercially viable AI system that processes personal data but does not also meet at least one other high-risk criterion.

For instance, a marketing personalization engine uses AI (innovative technology - factor 1). It also inherently performs "profiling" and "combines datasets" from various sources like browsing and purchase history (evaluation/scoring and matching datasets - factor 2). Therefore, a DPIA is mandatory. Similarly, an AI-powered content moderation tool uses AI (innovative technology - factor 1). It processes data on a "large scale" and may infer "sensitive data" like political opinions from user posts (large-scale processing and sensitive data - factor 2). Again, a DPIA is mandatory.

This logic leads to a clear conclusion for DPOs and legal counsel: the internal discussion should not be if a DPIA is needed for an AI project, but how to conduct a robust and comprehensive one. The screening process becomes a formality to document the clear legal triggers, and organizational resources should be allocated directly to the assessment itself.

Common AI Use Cases and Their Triggers

The following table illustrates how common AI use cases map directly onto the mandatory DPIA triggers defined by the GDPR and regulatory guidance.

Section V: Unique AI-Centric Risks: A Deep Dive for Comprehensive Impact Assessments

A DPIA for an AI system cannot be a simple transposition of the methodology used for traditional IT systems. The very nature of AI introduces a new class of risks that are more complex, less predictable, and potentially more harmful. A traditional system processes data in a linear, predictable fashion based on pre-programmed rules. An AI system, by contrast, fundamentally transforms data. It learns from it, its internal logic evolves, and it creates entirely new data through inference. This transformative capability is the source of the unique risks that must be the central focus of any credible AI DPIA.

5.1 The Fairness, Bias, and Discrimination Challenge

Risk: AI models, particularly those based on machine learning, learn from the data they are trained on. If this training data reflects historical or societal biases, the AI system will not only learn but can also perpetuate and amplify these biases at scale. This can lead to discriminatory and unfair outcomes in high-stakes decisions related to employment, credit, housing, and even criminal justice, creating harms that extend beyond data protection into the realms of anti-discrimination and fundamental human rights law.

DPIA Consideration: The DPIA must move beyond a simple inventory of data fields to a qualitative assessment of the training data itself. It must document the data's provenance, assess its representativeness for the population it will be applied to, and explicitly consider the potential for embedded bias. The assessment must detail the "fairness" metrics and statistical tests used to detect bias and document the mitigation strategies employed, such as re-weighting data, using algorithmic fairness toolkits, or implementing robust human oversight. The ICO's guidance explicitly states that DPIAs provide an opportunity to assess whether processing will lead to fair outcomes.

A significant legal tension arises in this context. The EU AI Act anticipates and permits the processing of special category data (e.g., race, ethnicity) when "strictly necessary" for the purpose of detecting and correcting bias in high-risk AI systems. However, the GDPR generally prohibits the processing of such data unless one of the specific conditions in Article 9 is met, such as explicit consent or substantial public interest. An AI DPIA must confront this legal uncertainty, carefully documenting the chosen legal basis for any such processing and the safeguards put in place to justify it.

5.2 The Transparency and Explainability Dilemma (The 'Black Box' Problem)

Risk: Many of the most powerful AI models, such as deep learning neural networks, function as "black boxes." Their internal decision-making processes are so complex that the precise logic leading to a specific output is often inscrutable, even to the data scientists who designed them. This opacity poses a direct challenge to the GDPR's core principle of transparency (Article 5(1)(a)) and undermines the rights of data subjects under Article 22, which grants them the right to "obtain human intervention," "express his or her point of view," and receive "meaningful information about the logic involved" in automated decisions.

DPIA Consideration: The DPIA must frankly assess the trade-off between the model's predictive accuracy and its interpretability. It is not acceptable to simply state that the model is a black box. The assessment must document what level of explanation can realistically be provided to data subjects, regulators, and internal reviewers. It must explore and document the consideration of less opaque alternatives. Furthermore, it must identify concrete measures to enhance explainability, such as using Explainable AI (XAI) techniques (e.g., LIME, SHAP) to provide post-hoc explanations, or choosing simpler, more inherently transparent models where the risks are high and explainability is paramount. The DPIA must also detail the processes for "meaningful human oversight," ensuring that human reviewers have the necessary information, authority, and competence to challenge and override the AI's decision.

5.3 The Data Appetite Paradox: Data Minimisation and Purpose Limitation

Risk: AI systems are "data-hungry"; their performance and accuracy often improve with access to larger and more varied datasets. This creates a fundamental tension with two core GDPR principles: data minimization (Article 5(1)(c)), which requires that data processing be "adequate, relevant and limited to what is necessary," and purpose limitation (Article 5(1)(b)), which restricts processing to "specified, explicit and legitimate purposes". The drive to maximize data for model training can easily lead to violations of these principles.

DPIA Consideration: The DPIA must rigorously challenge the "more data is better" assumption. It must contain a detailed justification for the volume and variety of data being used for both training and deployment. The assessment must answer critical questions: Is every data field truly necessary to achieve the stated purpose? Could a sufficiently accurate model be trained with less data? Have less privacy-intrusive alternatives, such as using fully anonymized or synthetic data, been considered and, if rejected, why?. Regarding purpose limitation, if an organization wishes to repurpose data collected for one purpose (e.g., service delivery) for a new purpose (e.g., training a new AI model), the DPIA must conduct a compatibility assessment or establish a new, valid lawful basis for this secondary processing.

5.4 The Inference Risk: The Spontaneous Creation of New Personal Data

Risk: A defining characteristic of AI is its ability to perform inference—to derive new information about individuals that was not explicitly provided. AI systems can infer highly sensitive information, such as health conditions, political affiliations, sexual orientation, or financial vulnerability, from seemingly innocuous data like browsing history, social media activity, or location patterns. This inferred data is still personal data under the GDPR. If the inferred information qualifies as special category data, its creation and subsequent processing are subject to the strict conditions of Article 9.

DPIA Consideration: The DPIA must be a forward-looking exercise that proactively identifies the potential for the AI system to generate new, inferred data. It cannot limit its scope to only the data that is initially collected. The assessment must analyze whether the creation of such inferred data is an intended purpose of the system or an unintended but foreseeable byproduct. Crucially, the DPIA must establish and document a valid lawful basis for the processing of this newly created personal data, especially if it is of a sensitive nature.

5.5 Data Subject Rights in an AI Context

Risk: The technical architecture of AI systems can create significant practical barriers to upholding fundamental data subject rights. For example, the "right to erasure" (Article 17) becomes highly complex. When an individual's data is used to train a machine learning model, its patterns and correlations become embedded within the model's parameters. Simply deleting the original data record does not erase its influence from the trained model; true erasure would often require costly and complex retraining of the entire model. Similarly, the "right of access" (Article 15) and the right to information about automated decisions (Article 22) require providing "meaningful information about the logic involved," which is challenged by the black box problem.

DPIA Consideration: The DPIA must honestly assess the technical feasibility of fulfilling each data subject right in the context of the specific AI system. It must document the precise procedures for handling such requests. For the right to erasure, this might involve a combination of measures: committing to excluding the data from future retraining cycles, applying output filters to prevent the model from revealing information about the subject, or, in some cases, documenting a compelling argument as to why full erasure is technically infeasible and what alternative measures are being offered to protect the individual's interests. For the right to access and explanation, the DPIA must detail how meaningful information about the AI's logic will be generated and communicated to the data subject in a clear and understandable way.

Section VI: Conducting the AI DPIA: A Step-by-Step Procedural and Substantive Guide

Operationalizing the principles and risk analyses discussed previously requires a structured, systematic process. The seven-step framework recommended by the UK's ICO provides a robust scaffold for conducting a DPIA. However, for AI systems, each step must be enriched with specific considerations that address the technology's unique characteristics. The DPIA must be treated as a "living document," subject to continuous review, especially given the dynamic nature of machine learning models.

Step 1: Identify the Need for a DPIA

Procedure: The first step is to determine if the processing is "likely to result in a high risk" and therefore requires a DPIA. This is typically done using a screening checklist based on the criteria set out by the GDPR and supervisory authorities.

AI-Specifics: For any project involving AI or machine learning processing personal data, this step is largely a formality to confirm and document the legal obligation. As established in Section IV, AI systems are almost certain to meet the "innovative technology" criterion plus at least one other high-risk factor (e.g., profiling, large-scale processing, combining datasets). The output of this step should be a clear, documented justification for conducting the full DPIA, referencing the specific triggers met (e.g., "This project uses a deep learning model, an innovative technology, to perform large-scale profiling of user behavior, thus meeting two criteria from the EDPB list and mandating a DPIA under Article 35.").

Step 2: Describe the Processing

Procedure: This step involves creating a comprehensive description of the processing activity, mapping data flows, and detailing the nature, scope, context, and purposes of the processing.

AI-Specifics: A generic description is insufficient. The description must be granular and tailored to the AI lifecycle:

Nature of Processing: Go beyond simple verbs like "collect" or "store." Describe the specific AI model architecture (e.g., "a transformer-based large language model," "a random forest classifier"). Detail the distinct phases of the AI lifecycle: data acquisition and preparation, model training, validation and testing, deployment (inference), and ongoing monitoring and retraining.

Scope of Data: Systematically list the categories of personal data processed at each stage. Specify the source of the data (e.g., directly from users, scraped from public sources, third-party providers). Detail the volume of data and the number of data subjects affected. Crucially, document the retention periods not only for the raw data but also for the trained models, logs, and any intermediary data.

Context of Processing: Describe the relationship with the data subjects (e.g., customers, employees, children). Assess their reasonable expectations regarding the use of AI. Consider any wider public concerns or ethical debates surrounding the specific application of AI (e.g., public sentiment on facial recognition or generative AI).

Purposes of Processing: Clearly articulate the specific, explicit, and legitimate purpose the AI system is intended to achieve. If relying on legitimate interests as the lawful basis, this purpose must be clearly defined here.

Step 3: Consultation

Procedure: The GDPR requires consultation with relevant stakeholders. This includes seeking the mandatory advice of the DPO. Where appropriate and feasible, it also involves consulting with data subjects or their representatives, as well as other internal and external experts.

AI-Specifics: For an AI DPIA, the range of necessary consultees expands. Consultation with the AI developers, data scientists, and MLOps engineers is non-negotiable to accurately understand the model's functionality, data dependencies, limitations, and potential for bias. Seeking input from ethicists can help identify and assess broader societal harms. Consultation with data subjects or consumer advocacy groups can be vital for understanding reasonable expectations and the potential for "representational harms" like stereotyping or denigration, which technical teams might overlook.

Step 4: Assess Necessity and Proportionality

Procedure: This step involves a critical evaluation of whether the processing is truly necessary to achieve the stated purpose and whether the chosen method is a proportionate means of doing so.

AI-Specifics: This is a point of intense scrutiny for AI systems. The DPIA must contain documented evidence that less privacy-intrusive alternatives were considered and a clear rationale for their rejection. Alternatives could include using a simpler, rule-based algorithm, achieving the goal with less personal data, or using synthetic or fully anonymized data. The assessment must justify any trade-offs made, for example, arguing that the increased privacy intrusion of using a complex AI model is proportionate to a significant and demonstrable benefit (e.g., a life-saving improvement in diagnostic accuracy) that could not be achieved otherwise.

Step 5: Identify and Assess Risks

Procedure: This involves identifying the potential risks to the rights and freedoms of individuals, then assessing the likelihood and severity of the potential harm for each risk.

AI-Specifics: The risk register must be expanded to explicitly include the AI-centric risks detailed in Section V:

Source of Harm: Discrimination, unfairness due to algorithmic bias.

Source of Harm: Financial loss, denial of opportunity, or psychological distress from incorrect or unexplainable automated decisions.

Source of Harm: Loss of confidentiality or identity theft from re-identification of pseudonymized data.

Source of Harm: Unwarranted intrusion into private life from the creation and use of sensitive inferred data.

Source of Harm: Loss of autonomy and control due to the inability to effectively exercise data subject rights (e.g., erasure, access).

Source of Harm: Risk of physical or psychological harm, or significant social or economic disadvantage.

Step 6: Identify Measures to Mitigate Risks

Procedure: For each risk identified in the previous step, the controller must identify and document the technical and organizational measures planned to address it. The goal is to reduce the risk to an acceptable level.

AI-Specifics: Mitigation measures must be specifically designed to counter AI risks:

To Mitigate Bias: Implement bias detection and measurement tools during development and monitoring; ensure training data is diverse, representative, and accurate; use fairness-aware machine learning techniques; conduct regular algorithmic audits; establish clear governance for human review of potentially biased outcomes.

To Mitigate Opacity: Implement XAI tools to generate post-hoc explanations; provide individuals with clear, layered explanations of how the AI works and how decisions are made; design and implement robust human-in-the-loop or human-on-the-loop oversight processes with the authority to intervene and override decisions.

To Mitigate Data Appetite: Implement strict data minimization protocols from the outset; use privacy-enhancing technologies (PETs) such as pseudonymization, encryption, differential privacy, and federated learning; use synthetic data for training where feasible; implement robust data filtering to remove unnecessary personal data before training begins.

To Mitigate Security Risks: Conduct adversarial testing to identify vulnerabilities to attacks like data poisoning or model inversion; implement specific security protocols for the AI model and its underlying infrastructure.

Step 7: Sign Off and Record Outcomes

Procedure: The final step involves integrating the DPO's advice, obtaining sign-off from appropriate senior management on the identified residual risks, and formally documenting the entire DPIA process and its outcomes. The DPIA must then be kept under regular review.

AI-Specifics: The dynamic nature of AI makes the "regular review" component critical. The DPIA is not a one-time, "fire-and-forget" document. It must be revisited and updated whenever there is a significant change to the AI system—such as retraining the model with a new dataset, a change in the model's architecture, or applying the system to a new use case—as these changes can fundamentally alter the risk profile.

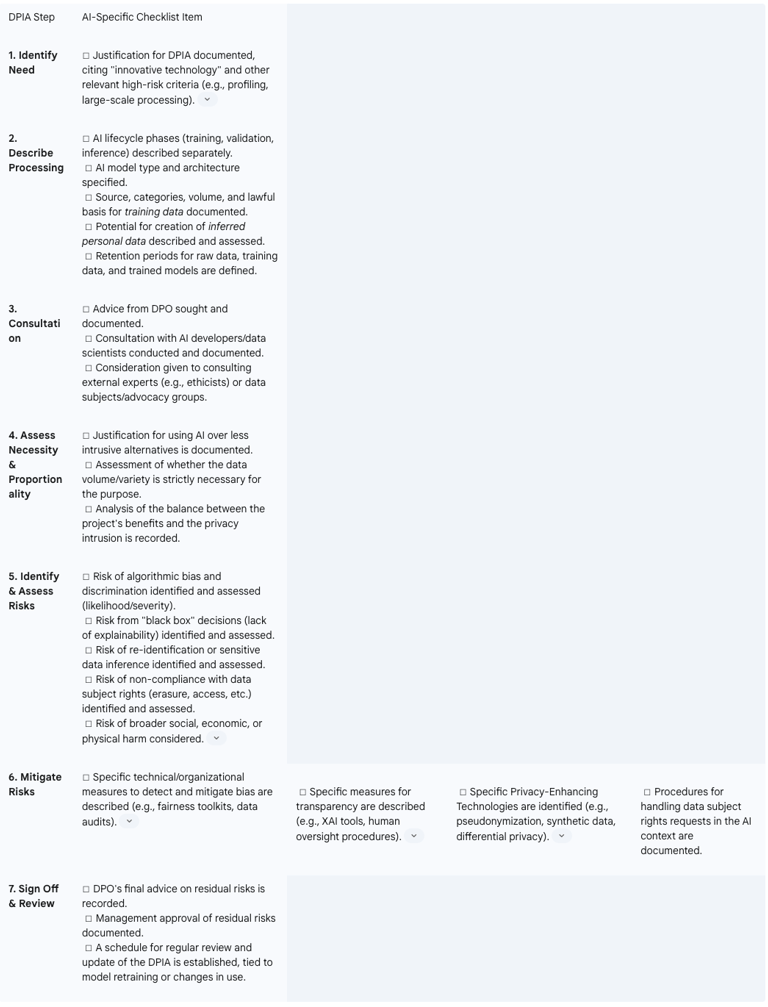

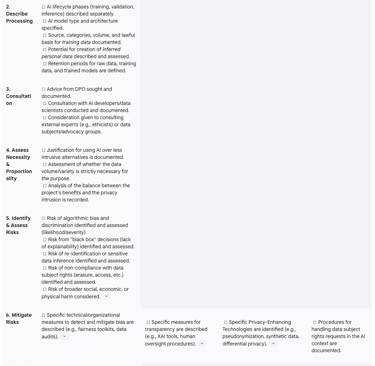

AI-DPIA Content Checklist

The following table provides a practical checklist to ensure an AI DPIA is comprehensive, addressing both standard GDPR requirements and the unique challenges of AI.

Section VII: The Broader Ecosystem: Interplay with the EU AI Act and Future Outlook

The GDPR does not exist in a vacuum. The regulatory landscape for technology is evolving, and the most significant development impacting AI governance is the EU AI Act. This landmark regulation establishes a parallel, risk-based framework for AI systems, creating a dual-compliance environment for organizations. Understanding the interplay between the GDPR's DPIA and the AI Act's new impact assessment requirement is crucial for developing an efficient and holistic governance strategy.

The EU AI Act's Risk-Based Approach

The EU AI Act categorizes AI systems based on their potential risk to health, safety, and fundamental rights. It creates four tiers: unacceptable risk (banned systems), high-risk, limited risk (subject to transparency obligations), and minimal risk. The most stringent obligations apply to "high-risk" AI systems, a category that includes AI used in critical areas such as employment, education, access to essential services, law enforcement, and justice administration. There is a significant and intentional overlap between the systems classified as high-risk under the AI Act and those likely to require a DPIA under the GDPR.

The Fundamental Rights Impact Assessment (FRIA)

For deployers of high-risk AI systems, the AI Act mandates the completion of a Fundamental Rights Impact Assessment (FRIA) prior to putting the system into service. The purpose of the FRIA is to identify and assess the specific risks the AI system poses to the fundamental rights of individuals or groups who may be affected. This is a broader remit than a pure data protection assessment, explicitly encompassing rights such as non-discrimination, freedom of expression, and the right to an effective remedy, alongside privacy and data protection.

DPIA and FRIA: A Convergent Path

Recognizing the potential for duplicative regulatory burdens, the AI Act provides a practical solution. Article 27(4) of the AI Act explicitly states that where a deployer is already obligated to carry out a DPIA under Article 35 of the GDPR, the FRIA "shall be conducted as part of that data protection impact assessment".

This creates a clear path for convergence. While the conceptual focuses of the two assessments differ slightly—the DPIA is centered on risks arising from the processing of personal data, while the FRIA is centered on risks arising from the use of the AI system more broadly—their core components are highly aligned. Both require a description of the system and its purpose, an identification of risks to individuals' rights, and the documentation of mitigation measures.

The practical implication is that organizations should not view these as two separate, siloed tasks. A well-constructed AI DPIA, as described in this report, already incorporates the assessment of risks like discrimination, fairness, and the impact on individual autonomy—all of which are central concerns of a FRIA. Therefore, the DPIA process provides a natural and legally sound foundation for AI Act compliance.

By mastering the AI-specific DPIA under the GDPR, an organization positions itself to meet the impact assessment requirements of the AI Act with greater efficiency. The DPIA is not being superseded; it is evolving into the foundational building block for a more comprehensive AI risk and rights assessment framework. The DPO and legal teams, who are already custodians of the DPIA process, are thus logically positioned at the center of this broader AI governance challenge. The most effective strategy is to design a single, integrated impact assessment template and process that satisfies both regulations. This can be achieved by augmenting the existing DPIA methodology to explicitly name and assess the wider range of fundamental rights as articulated in the EU Charter of Fundamental Rights, thereby fulfilling the FRIA requirement within the established GDPR compliance workflow.

Section VIII: Concluding Analysis and Strategic Recommendations

The deployment of Artificial Intelligence systems that process personal data represents a significant escalation in risk to individual rights and freedoms. The General Data Protection Regulation, through the mandatory Data Protection Impact Assessment under Article 35, provides a robust, if challenging, framework for governing this new technological frontier. The analysis throughout this report demonstrates that for nearly all AI systems, the DPIA is not a discretionary choice but a legal imperative. Furthermore, the unique characteristics of AI—its data appetite, its opacity, its capacity for bias and inference—demand that this assessment be substantively different from and more rigorous than those conducted for traditional technologies.

The key legal obligations and practical challenges can be summarized as follows:

The legal distinction between a PIA and a DPIA is paramount; the latter is a legally enforceable requirement under GDPR focused on risks to individuals.

The criteria for high-risk processing, as defined in Article 35 and elaborated by the ICO and EDPB, are almost always met by AI systems, making the DPIA a default requirement.

A compliant AI DPIA must systematically address novel risks including algorithmic bias, the 'black box' problem, purpose limitation conflicts, the creation of inferred sensitive data, and technical challenges to data subject rights.

The emerging EU AI Act complements the GDPR, requiring a Fundamental Rights Impact Assessment that can and should be integrated into the DPIA process, making the DPIA the cornerstone of broader AI governance.

To navigate this complex landscape effectively, organizations must move beyond a check-the-box compliance mentality and adopt a strategic, proactive approach to AI governance. The following recommendations provide a path forward for legal and compliance leaders:

Embed DPIAs into the AI Lifecycle: The AI DPIA should not be a bureaucratic gate that a project must pass through at the end of its development. Instead, it must be an iterative, living document that begins at the project's conception—the "problem formulation" stage—and is continuously updated through development, training, deployment, and eventual decommissioning. This "privacy by design" approach ensures that risks are identified and mitigated early, when changes are less costly and more effective.

Establish Cross-Functional AI Governance Teams: A successful AI DPIA cannot be conducted in a silo by the legal or privacy team alone. It requires deep, collaborative engagement between legal counsel, the DPO, data scientists, software engineers, MLOps specialists, and business stakeholders. Each group brings a critical perspective needed to understand the multifaceted risks of AI, from the technical nuances of an algorithm to its real-world impact on customers.

Develop a Unified DPIA/FRIA Framework: Rather than waiting for the AI Act's obligations to come into full force and creating a separate process, organizations should act now to develop a single, integrated impact assessment framework. This framework should use the GDPR DPIA as its foundation and expand its scope to explicitly assess the broader set of fundamental rights required by the FRIA. This unified approach will prevent redundant work, ensure consistency, and streamline the compliance process for high-risk AI systems.

Invest in Transparency and Explainability: Organizations should not accept the "black box" problem as an insurmountable technical barrier. Active investment in Explainable AI (XAI) technologies, model-agnostic interpretation tools, and robust human oversight processes is a strategic necessity. The ability to provide meaningful explanations for AI-driven decisions will be a key factor in demonstrating accountability to regulators and building trust with individuals.

Prioritize Continuous Monitoring and Auditing: An AI model is not a static piece of software. Its performance can drift over time, it can be retrained on new data, and it can be repurposed for new uses. Each of these events can change its risk profile. Organizations must implement a formal, regular cadence for reviewing and updating AI DPIAs, particularly after significant model updates. This ensures the assessment remains an accurate reflection of the current risks and that mitigation measures remain effective.

By adopting these strategic recommendations, organizations can transform the AI DPIA from a perceived regulatory obstacle into a powerful tool for responsible innovation, enabling them to harness the benefits of artificial intelligence while upholding their fundamental commitment to the protection of personal data and human rights.

FAQ

What is the primary purpose of a Data Protection Impact Assessment (DPIA) under GDPR for AI systems?

The primary purpose of a DPIA under the General Data Protection Regulation (GDPR) for Artificial Intelligence (AI) systems is to identify, comprehend, and mitigate the heightened risks posed by AI to the fundamental rights and freedoms of individuals. It is not merely a compliance exercise but a mandatory legal and ethical mechanism. Unlike a general Privacy Impact Assessment (PIA), which often focuses on risks to the organisation, the DPIA legally re-centres the assessment on potential harm to the individual.

Why is a DPIA almost always mandatory for AI systems that process personal data?

A DPIA is almost invariably mandatory for AI systems processing personal data because AI characteristics inherently align with the GDPR's "high-risk" criteria. Article 35 of the GDPR explicitly mentions "new technologies," directly implicating AI. Furthermore, guidance from the European Data Protection Board (EDPB) and the UK's Information Commissioner's Office (ICO) specifies that AI, machine learning, and deep learning are "innovative technologies." When combined with other high-risk factors common to AI, such as large-scale profiling, automated decision-making with significant effects, or processing of sensitive data, a DPIA becomes a default requirement.

How does a Data Protection Impact Assessment (DPIA) for AI systems differ from a standard DPIA or a general Privacy Impact Assessment (PIA)?

A Data Protection Impact Assessment (DPIA) for AI systems differs significantly from a standard DPIA or a general Privacy Impact Assessment (PIA) in its scope and focus. While a PIA is a flexible, best-practice tool often focused on organisational risks, a DPIA is a mandatory legal requirement under GDPR Article 35, specifically concerned with risks to individuals' fundamental rights and freedoms. For AI, this distinction is amplified: a standard DPIA is insufficient because AI introduces novel risks like algorithmic bias, the "black box" problem (opacity), inherent data appetites conflicting with data minimisation, the spontaneous creation of inferred data, and technical challenges to upholding data subject rights (e.g., the right to erasure). An AI DPIA must be augmented to systematically assess and mitigate these unique, complex, and often unpredictable risks.

What are the key AI-specific risks that a DPIA must address?

A comprehensive AI DPIA must specifically address several unique risks:

Fairness, Bias, and Discrimination: AI models can perpetuate and amplify societal biases from their training data, leading to unfair and discriminatory outcomes in critical areas like employment or credit.

Transparency and Explainability (The 'Black Box' Problem): Many powerful AI models are opaque, making their internal decision-making inscrutable. This challenges GDPR's transparency principle and data subjects' rights to understanding automated decisions.

Data Appetite Paradox (Data Minimisation and Purpose Limitation): AI's need for large, varied datasets often conflicts with GDPR principles of collecting only necessary data for specified purposes.

Inference Risk (Creation of New Personal Data): AI can infer new, potentially highly sensitive personal data (e.g., health conditions, political views) from seemingly innocuous information, requiring a valid lawful basis for its processing.

Data Subject Rights Challenges: The technical architecture of AI systems can make it difficult to uphold rights like erasure (as data patterns are embedded in the model) or access (due to the black box problem).

What are the minimum content requirements for an AI DPIA as per GDPR Article 35(7)?

As per GDPR Article 35(7), an AI DPIA must contain:

A systematic description of the envisaged processing operations and their purposes, including details of the AI model architecture and lifecycle (training, validation, inference).

An assessment of the necessity and proportionality of the processing operations in relation to their purposes, justifying the use of AI over less intrusive alternatives and the volume of data used.

An assessment of the risks to the rights and freedoms of data subjects, specifically identifying and evaluating AI-centric harms like algorithmic bias, opacity, and the creation of inferred data.

The measures envisaged to address these risks, including safeguards, security measures, and mechanisms to ensure data protection and demonstrate compliance, such as bias detection tools, Explainable AI (XAI) techniques, Privacy-Enhancing Technologies (PETs), and robust human oversight.

What role does the Data Protection Officer (DPO) play in an AI DPIA?

The Data Protection Officer (DPO) plays a crucial, mandatory role in an AI DPIA. Article 35(2) of the GDPR requires the controller to "seek the advice of the data protection officer (DPO), where designated." The DPO provides expert guidance on whether a DPIA is necessary, advises on the appropriate methodology, and assesses whether the proposed mitigation measures are sufficient to address identified risks. If a DPIA reveals a high residual risk that cannot be mitigated, the controller is legally obligated to consult the relevant supervisory authority, a process in which the DPO typically plays a central part.

How does the forthcoming EU AI Act interact with the GDPR's DPIA requirements?

The forthcoming EU AI Act introduces a complementary Fundamental Rights Impact Assessment (FRIA) for high-risk AI systems. Recognizing the potential for duplicative burdens, Article 27(4) of the AI Act explicitly states that if a deployer is already obligated to conduct a DPIA under GDPR Article 35, the FRIA "shall be conducted as part of that data protection impact assessment." This means a robust, well-executed AI DPIA under GDPR can serve as the foundational framework for satisfying the broader compliance obligations of the AI Act. Organisations should aim for a unified, integrated impact assessment template that assesses both data protection risks and broader fundamental rights.

What strategic recommendations are given for organisations to effectively manage AI-related data protection risks?

To effectively manage AI-related data protection risks, organisations are recommended to:

Embed DPIAs into the AI Lifecycle: Treat the AI DPIA as an iterative, "living document" that begins at project conception and is continuously updated through development, training, deployment, and decommissioning, ensuring a "privacy by design" approach.

Establish Cross-Functional AI Governance Teams: Foster deep collaboration between legal, DPO, data scientists, engineers, and business stakeholders to understand and address multifaceted AI risks.

Develop a Unified DPIA/FRIA Framework: Integrate the requirements of the EU AI Act's Fundamental Rights Impact Assessment into the existing GDPR DPIA process to create a single, efficient, and comprehensive assessment.

Invest in Transparency and Explainability: Actively invest in Explainable AI (XAI) technologies, model-agnostic interpretation tools, and robust human oversight processes to address the "black box" problem and build trust.

Prioritise Continuous Monitoring and Auditing: Implement a formal, regular cadence for reviewing and updating AI DPIAs, especially after significant model updates or changes in use, as these can alter the risk profile.