Real-World GDPR Enforcement: Fines and Lessons

This report delves into real-world GDPR enforcement actions, focusing on landmark fines to extract critical lessons for organizations navigating this complex regulatory landscape.

The General Data Protection Regulation (GDPR) has profoundly reshaped global data privacy practices since its enactment in May 2018. Its robust enforcement mechanism, spearheaded by national Data Protection Authorities (DPAs) and coordinated by the European Data Protection Board (EDPB), has resulted in significant financial penalties and stringent compliance orders. This report delves into real-world GDPR enforcement actions, focusing on landmark fines to extract critical lessons for organizations navigating this complex regulatory landscape.

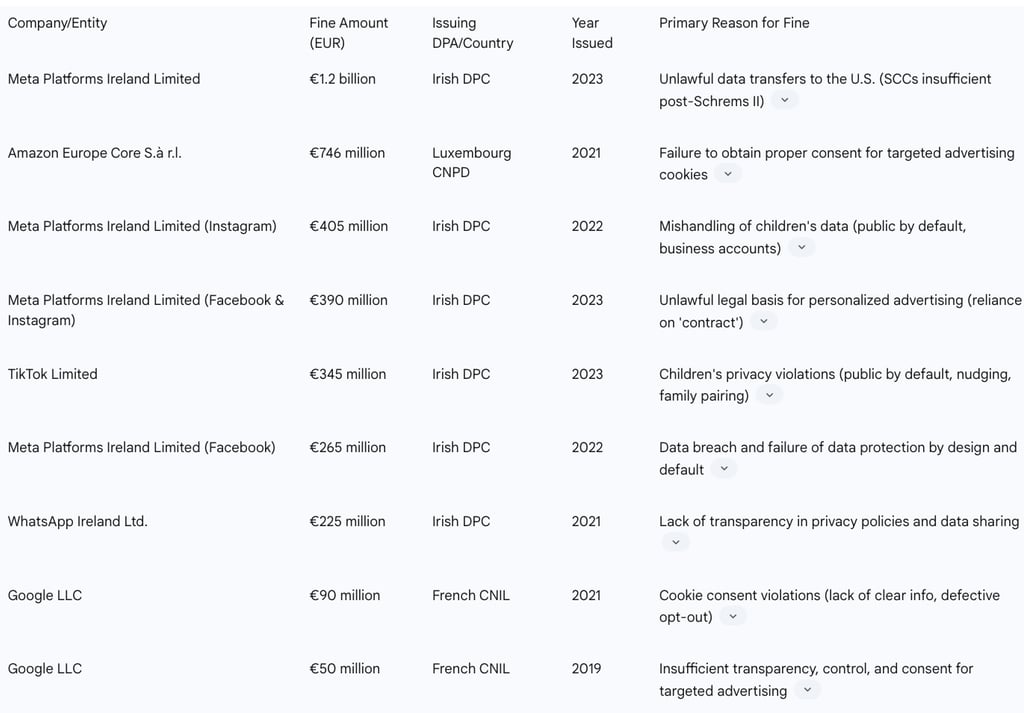

The report highlights a clear trend of escalating fines, particularly against major tech companies like Meta, Amazon, and TikTok, with the largest single fine reaching €1.2 billion. These penalties predominantly stem from violations related to international data transfers, inadequate consent for targeted advertising, insufficient protection of children's data, and failures in implementing privacy by design and default.

The analysis reveals that core GDPR principles—transparency, lawfulness of processing, data minimization, and accountability—are consistently at the heart of enforcement actions. Companies face challenges in interpreting and applying these principles, especially in dynamic areas like cross-border data flows and personalized advertising.

Proactive and continuous compliance is paramount. Organizations must prioritize transparent data practices, validate legal bases for processing, fortify data security, and embed privacy by design into all operations. Furthermore, the increasing focus on executive personal liability underscores the need for robust leadership oversight and a culture of accountability.

Introduction: The Evolving Landscape of GDPR Enforcement

This report aims to move beyond a mere enumeration of GDPR fines, offering an in-depth analysis of the circumstances, specific GDPR articles violated, and the regulatory reasoning behind the most significant penalties. By dissecting these real-world cases, this analysis seeks to provide actionable guidance for businesses to enhance their data protection posture and mitigate compliance risks.

The GDPR establishes a multi-layered enforcement framework. National Data Protection Authorities (DPAs) in each EU Member State are primarily responsible for investigating and correcting violations and levying penalties. These authorities possess broad investigative powers, including conducting audits, performing on-site inspections, and accessing premises and data. They also have corrective powers, such as issuing warnings, reprimands, restricting or banning data processing, ordering data rectification or deletion, suspending data transfers, and imposing administrative fines. The European Data Protection Board (EDPB) plays a crucial role in ensuring consistent application of the GDPR across the EU, particularly for cross-border processing activities, through its consistency mechanism, which involves dispute resolution procedures under Article 65. This cooperation and consistency mechanism is vital for ensuring uniform enforcement across the diverse legal landscapes of EU member states.

Understanding the patterns and nuances of GDPR enforcement is critical for proactive compliance. It allows organizations to anticipate areas of regulatory scrutiny, learn from the experiences of others, and adapt their data processing practices to avoid costly penalties, reputational damage, and operational disruption. The escalating scale of fines and the broadening scope of enforcement demonstrate that GDPR compliance is not a static endeavor but an ongoing, dynamic requirement that demands continuous vigilance and adaptation.

Analysis of Landmark GDPR Fines: Case Studies

This section provides a detailed examination of the most significant GDPR fines, dissecting the specific violations, the regulatory context, and the broader implications for businesses.

Table 1: Top GDPR Fines by Amount (2018-Present)

Meta Platforms Ireland Limited (€1.2 Billion): The Precedent of International Data Transfers

On May 22, 2023, the Irish Data Protection Commission (DPC) imposed a record-breaking €1.2 billion fine on Meta Ireland for unlawful transfers of personal data from the EU/EEA to the United States. This decision followed an inquiry into Meta's Facebook service, specifically regarding its reliance on Standard Contractual Clauses (SCCs) in conjunction with supplementary measures for data transfers since July 16, 2020. The DPC found these arrangements insufficient to address the requirements stemming from the Court of Justice of the EU's

Schrems II judgment.

The primary violation concerned Chapter V of the GDPR, which governs transfers of personal data to third countries. The EDPB found the infringement "very serious" due to its systematic, repetitive, and continuous nature, involving a massive volume of personal data from millions of European users.

The record fine was a direct result of a binding dispute resolution decision by the European Data Protection Board (EDPB) on April 13, 2023. The Irish DPC's initial draft decision had not included a fine, but supervisory authorities in other countries raised objections, leading to the EDPB's intervention under Article 65(1)(a) of the GDPR. The EDPB instructed the DPC to impose a fine, suggesting a starting point between 20% and 100% of the applicable legal maximum, given the gravity of the infringement. Meta was also ordered to suspend any future transfers of personal data to the U.S. within five months and cease unlawful processing, including storage, in the U.S. of personal data of EEA users transferred in violation of the GDPR, within six months. Meta has announced its intention to appeal the decision.

This substantial penalty underscores the enduring impact of the Schrems II judgment and highlights the fragility of Standard Contractual Clauses (SCCs) as standalone mechanisms for international data transfers. The Schrems II ruling invalidated the EU-U.S. Privacy Shield and significantly questioned the sufficiency of SCCs without additional safeguards to protect data from U.S. surveillance laws. This fine demonstrates that even with such supplementary measures, European regulators consider SCCs inadequate for transfers to countries like the U.S. that lack an adequacy decision. This implies that the fundamental legal framework for EU-U.S. data transfers remains highly precarious, compelling companies to either cease such transfers, seek alternative mechanisms (such as the subsequently adopted EU-U.S. Data Privacy Framework), or operate under substantial regulatory risk. The sheer magnitude of the fine signals regulators' unwavering commitment to upholding the Schrems II ruling, establishing a critical precedent for any organization engaged in cross-border data transfers.

Furthermore, the fact that the Irish DPC's initial draft decision did not include a fine, and the record penalty only materialized after objections from other concerned supervisory authorities (CSAs) and a binding EDPB decision, illuminates the crucial role of the EDPB as an escalation and consistency mechanism. The EDPB acts as a powerful check and balance, ensuring a consistent and often more stringent application of GDPR across the EU, especially for complex, cross-border cases involving major players. This mechanism prevents "forum shopping" by companies, where they might seek a more lenient DPA, and ensures that the lead DPA does not issue unduly mild decisions, effectively increasing the fines and compliance requirements.

Amazon Europe Core S.à r.l. (€746 Million): The Imperative of Valid Consent for Advertising

On July 16, 2021, the Luxembourg National Commission for Data Protection (CNPD) imposed a €746 million fine on Amazon Europe Core S.à r.l.. This stemmed from complaints by a French advocacy group, alleging Amazon's failure to obtain clear permission from users before processing personal data for targeted advertising, specifically concerning the storage of advertisement cookies. The decision, upheld by the Luxembourg Administrative Tribunal on March 18, 2025, concluded that Amazon violated transparency standards and failed to meet its information obligations, infringing upon individuals' rights to object to data processing.

The core violations related to transparency and consent requirements under GDPR, particularly Article 6 (lawfulness of processing) and potentially Articles related to information provision (e.g., Art. 12, 13) and the ePrivacy Directive (Cookie Law). Amazon failed to provide clear information on data usage and did not prompt users for consent for data processing. The CNPD's ruling emphasized that Amazon did not obtain "freely given" consent for cookies and failed to clearly inform users about data processing. The decision underscored the importance of explicit consent and transparency for targeted advertising practices. Amazon has appealed the decision, asserting its practices align with customer privacy.

This significant penalty, while often attributed to GDPR, explicitly involved violations related to "freely given" consent for "advertisement cookies" and was preceded by a French CNIL fine under the ePrivacy Directive. This highlights that GDPR's consent requirements (Article 6) are heavily influenced by and frequently overlap with the ePrivacy Directive ("Cookie Law") when it comes to online tracking technologies. The fine demonstrates that implied consent or pre-ticked boxes for non-essential cookies are unacceptable, and that consent must be granular, informed, and unambiguous. The "freely given" aspect means users must have a genuine choice, without coercion or negative consequences for refusal. This case serves as a clear indication that organizations must meticulously design their cookie consent mechanisms to ensure active, informed user agreement for all non-essential data processing.

The Amazon case originated from "10,000 complaints filed by the French group La Quadrature du Net" and an "advocacy group". This demonstrates that organized privacy advocacy groups and a high volume of individual complaints can be a significant catalyst for DPA investigations and major enforcement actions. This situation illustrates that companies cannot afford to ignore widespread user dissatisfaction or activist campaigns, as these can directly translate into regulatory scrutiny and severe financial penalties. This also suggests that implementing user-friendly complaint mechanisms and being responsive to user concerns are not merely good practices but essential components of a robust risk mitigation strategy.

Meta Platforms Limited (Instagram) (€405 Million): Protecting Children's Data Privacy

In September 2022, the Irish DPC fined Instagram €405 million for breaching GDPR in relation to the handling of children's data. The investigation, which began in September 2020, focused on two key issues: children (aged 13-17) being allowed to activate "business accounts" with default privacy settings that publicly disclosed their personal contact information (e.g., phone numbers, email addresses), and personal Instagram accounts of children being "public by default" during the registration process.

The core violation was the unlawful processing of children's personal data without a valid legal basis, specifically infringing Article 6(1) GDPR. Meta had relied on "performance of contract" (Art. 6(1)(b)) and "legitimate interest" (Art. 6(1)(f)) as legal bases, both of which the EDPB found invalid for the public disclosure of children's data.

The DPC's decision was influenced by a binding dispute resolution decision from the EDPB (July 28, 2022), which followed objections from several concerned supervisory authorities regarding the legal basis and fine determination. The EDPB explicitly stated that the processing was not necessary for contract performance and failed the balancing test for legitimate interest. This was the second highest GDPR fine at the time and the first EU-wide decision specifically on children's data protection rights, sending a strong signal that "companies targeting children have to be extra careful".

This fine, explicitly labeled as the "first EU-wide decision on children's data protection rights," clearly establishes children's data as a priority enforcement area for GDPR regulators. This indicates that organizations offering services or products likely to be accessed by children must exercise heightened diligence and implement specific safeguards. The regulatory focus extends beyond mere compliance with general data processing principles to a more protective stance, recognizing the particular vulnerabilities of minors. This means that platforms must proactively design their services with children's best interests at the forefront of their data handling practices.

The regulatory expectation for "privacy by default" and "privacy by design" for vulnerable user groups, such as children, is strongly affirmed by this case. The finding that children's accounts were "public by default" and that "business accounts" exposed their contact information directly contradicts the principle of privacy by design, which mandates that data protection measures be integrated into the design of processing systems from the outset. This demonstrates that merely offering privacy settings is insufficient; rather, the default settings must be the most privacy-protective, particularly for children. The decision emphasizes that companies bear the responsibility to implement technical and organizational measures that ensure data protection principles are met by default, without requiring active user intervention to secure their privacy.

Other Significant Fines and Emerging Trends

Beyond the largest penalties, a consistent pattern of enforcement actions highlights critical areas of GDPR compliance.

Meta Platforms Ireland Limited (Facebook & Instagram) (€390 Million): Legal Basis for Personalized Advertising

On January 4, 2023, the Irish Data Protection Commission (DPC) fined Meta a combined €390 million for breaches related to its personalized advertising practices on Facebook and Instagram. The investigation stemmed from complaints alleging that Meta "forced" users to consent to personal data processing for behavioral advertising by conditioning access to services on accepting updated Terms of Service. Meta had asserted that users entering into a contract by accepting these terms allowed it to rely on "performance of a contract" as a legal basis for processing personal data for advertising.

The DPC's investigation found that Meta’s practices violated Article 5(1)(a) of the GDPR, which requires personal data to be processed lawfully, fairly, and in a transparent manner, as its Terms of Use failed to clearly disclose data processing activities, purposes, and legal bases. Crucially, the European Data Protection Board (EDPB), after consultation, explicitly held that Meta could not continue to rely on "performance of a contract" as a legal basis for behavioral advertising. The EDPB also directed the DPC to conduct a separate investigation into the processing of special categories of data. Meta was directed to bring its data processing activities into compliance within three months.

This ruling represents a strict interpretation of "performance of contract" as a legal basis for processing personal data for personalized advertising. The regulatory bodies determined that behavioral advertising, while potentially part of a service, is not inherently "necessary" for the performance of the core contract (i.e., providing a social media platform). This means that companies cannot bundle consent for essential service provision with consent for personalized advertising under the guise of contractual necessity. This decision sets a clear precedent that if personalized advertising is not strictly essential to the primary service, a separate, explicit, and freely given consent is required.

The implications for behavioral advertising models across the EU are profound. This ruling effectively dismantles the practice of "forced consent" by requiring a clear "yes/no" option for personalized ads, with users retaining the ability to change their minds at any time. This regulatory stance compels advertisers and platforms to re-evaluate their data collection and processing strategies, moving away from reliance on broad contractual terms to secure user data for advertising. It necessitates a shift towards more transparent and granular consent mechanisms, ensuring that users genuinely opt-in to personalized experiences, thereby establishing a more level playing field with other advertisers who already adhere to opt-in consent models.

TikTok Limited (€345 Million): Children's Privacy and Platform Design

On September 15, 2023, the Irish Data Protection Commissioner (DPC) fined TikTok Technology Limited €345 million for GDPR violations related to the processing of children's personal data. The investigation, initiated in September 2021, focused on TikTok's default public settings for child profiles, inadequate information provision, and issues with its "Family Pairing" feature.

The DPC found that the default setting for children's accounts was public, meaning any TikTok user or even non-members could view their identity, account data, and videos. Children had to actively opt out to choose a private setting, and TikTok failed to provide understandable information about the consequences of public settings, using ambiguous wording. The German supervisory authority specifically noted that TikTok was "nudging" users towards privacy-negative settings, which was deemed an infringement of the fairness principle (Art. 5(1) GDPR). Additionally, the "Family Pairing" option lacked a verification process, allowing any user to assign themselves as a "Parent user". While age verification for users under 13 was also examined, an infringement could not be concluded for the specific period due to insufficient information on the "state of the art" standard. TikTok was ordered to bring its data processing into compliance within three months.

The specific design flaws, such as the "public by default" setting and the "nudging" of users towards privacy-intrusive options, directly led to this substantial fine. The regulatory determination was that these design choices violated fundamental GDPR principles, including fairness (Article 5(1) GDPR) and the requirements for clear information (Articles 12 & 13 GDPR). This demonstrates that the manner in which a platform is designed, particularly its default settings and user interface, is under direct regulatory scrutiny. Companies must ensure that their design actively promotes and preserves user privacy, especially for vulnerable populations like children, rather than subtly encouraging data sharing.

This case has broad implications for platforms popular with minors, emphasizing the critical need for age-appropriate design and robust age verification processes. Although an infringement on age verification was not concluded in this specific case, the DPC's detailed analysis of the issue highlights its importance. The regulatory focus is on ensuring that platforms not only comply with the age limits stated in their terms of service but also implement effective technical and organizational measures to prevent underage users from accessing the platform or from having their data processed inappropriately. This means that companies must invest in sophisticated age assurance mechanisms and design their services to inherently protect children's data, reflecting the specific protections afforded to minors under GDPR.

Meta Platforms Ireland Limited (Facebook) (€265 Million): Data Breach and Privacy by Design Failures

On November 28, 2022, the Irish Data Protection Commission (DPC) fined Meta €265 million following a data breach that saw personal details of over half a billion Facebook users leaked online. The DPC's investigation, launched in April 2021, focused on a dataset of 533 million users that appeared on an online hacking forum. Meta stated the data was "scraped" rather than hacked, exploiting a vulnerability in its tools prior to September 2019, which allowed automated software to extract public information from the site.

The DPC found Meta in breach of Article 25 of the GDPR rules, which pertains to Data Protection by Design and Default. The regulatory reasoning for the significant sanction was based on the large size of the data set and previous instances of scraping on the platform, indicating that the issues could have been identified and addressed more timely. In addition to the fine, Meta was reprimanded and ordered to take remedial actions to bring its processing into compliance. Meta cooperated with the DPC and stated it had made changes to its systems to remove the ability to scrape features using phone numbers.

This case emphasizes the stringent accountability for data scraping and the critical importance of "privacy by design and default" in preventing breaches. The DPC's finding that Meta breached Article 25 GDPR underscores that companies are expected to implement appropriate technical and organizational measures to ensure data protection from the very outset of processing operations and by default. This means that vulnerabilities, even those exploited through "scraping" of publicly available data, are viewed as failures in proactive security and design, rather than merely external attacks. The expectation is that systems are built to inherently resist such data extraction, illustrating a shift from reactive incident response to proactive risk mitigation through design.

The regulatory expectation for continuous security improvements and responsiveness to identified vulnerabilities is clearly demonstrated. The DPC noted that the issues leading to the breach could have been identified "in a more timely way," given previous instances of scraping. This highlights that organizations are not only responsible for addressing known vulnerabilities but also for maintaining an ongoing vigilance and adapting their security posture in response to evolving threats and past incidents. It implies a duty to continuously audit, assess, and enhance security measures, ensuring that lessons from prior incidents, whether internal or industry-wide, are integrated into their data protection framework.

WhatsApp Ireland Ltd. (€225 Million): Transparency in Privacy Policies

On September 2, 2021, the Irish Data Protection Commission (DPC) announced a €225 million fine against WhatsApp Ireland Ltd. for failing to meet the transparency requirements of Articles 12-14 of the GDPR. This fine represented a more than four-fold increase from the DPC's initial proposed fine, following a binding dispute resolution decision by the EDPB.

The DPC's investigation, which began in December 2018, found that WhatsApp failed to provide sufficiently clear, transparent, or adequate information about its processing activities to both users and non-users. Specific deficiencies included a lack of granularity in identifying the legal basis for each processing activity (Art. 13(1)(c) GDPR) and insufficient clarity regarding data transfers to non-EEA jurisdictions (Art. 13(1)(f) GDPR). The EDPB also found that the cumulative effect of these failures resulted in a breach of the overarching transparency principle under Article 5(1)(a) GDPR. WhatsApp was ordered to bring its processing activities into compliance within three months and update its privacy notices.

This case underscores the stringent requirements for transparency and clarity in privacy notices. The DPC's finding that WhatsApp failed to provide "appropriately clear, transparent or sufficient information" and to identify the legal basis with "sufficient granularity" demonstrates that vague or overly broad language is insufficient. Privacy notices must be detailed enough to allow data subjects to understand precisely how their data is processed, for what purposes, and under what legal basis. The ruling also highlighted that information should be "easily accessible," meaning users should not have to navigate multiple, potentially inconsistent, linked documents to piece together their privacy rights. This sets a high bar for user-friendly and comprehensive privacy communications.

The DPC's role as the lead supervisory authority and the EDPB's influence in escalating fines is prominently featured in this case. The initial proposed fine was significantly increased after objections from other EU regulators and a binding EDPB decision. This illustrates the cooperative and consistency mechanism under GDPR Article 60, where cross-border cases are reviewed by multiple DPAs. The EDPB's intervention, which included considering the consolidated turnover of WhatsApp's parent company (Facebook Inc.) for fine calculation, demonstrates its power to ensure that penalties are "effective, proportionate and dissuasive" across the entire undertaking, regardless of the lead DPA's initial assessment. This reinforces that large multinational corporations cannot expect leniency based on their primary establishment's DPA alone.

Google LLC (€50 Million & €100 Million): Consent for Advertising and Cookies

Google has faced multiple significant fines from the French CNIL related to consent for advertising and cookies. In January 2019, Google LLC was fined €50 million for breaches of GDPR, specifically for insufficient transparency, inadequate information, and lack of valid consent concerning targeted advertising. The CNIL found that required information was not easily accessible, being "excessively disseminated" and requiring too many clicks, and that consent obtained was not valid because it was neither specific nor unambiguous.

Later, in December 2020, Google LLC and Google Ireland Ltd. were collectively fined €100 million by the CNIL for cookie consent violations. This fine was imposed for depositing cookies on users' devices without prior consent or sufficient information. The CNIL's investigation revealed that non-essential marketing cookies were automatically installed without consent, the cookie banner provided inadequate information, and the opt-out mechanism was partially defective. The Conseil d'Etat upheld this fine, noting the significant profits from advertising cookies and Google's market position.

These rulings unequivocally prohibit the use of pre-ticked boxes and emphasize the need for clear, informed, and unambiguous consent for cookies, particularly for advertising purposes. The CNIL's findings that cookies were automatically installed without consent and that information provided was inadequate or misleading demonstrate that implied consent or default settings are unacceptable for non-essential cookies. Users must be given a genuine choice, presented clearly and concisely, before any non-essential tracking technologies are deployed. This means that cookie banners must not only inform users but also provide easily actionable options to accept or refuse, and any refusal mechanism must be fully effective.

The continuous regulatory scrutiny on ad-tech and online tracking remains a prominent trend. The repeated fines against Google for issues related to advertising and cookies, even after initial enforcement actions, underscore that this area is a persistent focus for data protection authorities. This indicates that the ad-tech industry's complex data flows and reliance on user tracking will continue to be subject to rigorous examination. Companies operating in this space must anticipate ongoing regulatory pressure to demonstrate strict adherence to consent requirements, transparency obligations, and the principles of data minimization in their advertising practices. This necessitates a proactive and adaptive approach to compliance, rather than a reactive one.

Lessons Learned and Strategic Recommendations for Businesses

The analysis of significant GDPR fines reveals recurring themes and offers critical lessons for organizations aiming to enhance their data protection posture.

Prioritize Lawfulness, Fairness, and Transparency

The foundational principles of GDPR, particularly lawfulness, fairness, and transparency (Article 5(1)(a) GDPR), are consistently at the core of enforcement actions. Organizations must ensure that every data processing activity has a clear, valid legal basis, whether it be explicit consent, contractual necessity, legitimate interest, or another lawful ground. The Meta €390 million fine demonstrates that relying on "performance of contract" for personalized advertising is often deemed unlawful, requiring explicit consent instead. Furthermore, transparency is paramount: privacy notices must be concise, intelligible, easily accessible, and provide granular details about data processing activities, purposes, and recipients, as highlighted by the WhatsApp €225 million fine. Vague language or overly complex information structures are no longer acceptable.

Strengthen Consent Mechanisms

Valid consent under GDPR must be freely given, specific, informed, and unambiguous. The Amazon €746 million fine and Google's €100 million and €50 million fines underscore that implied consent, pre-ticked boxes, or conditioning service access on consent for non-essential processing are unacceptable. Organizations must implement robust consent management platforms that allow users genuine choice, granular control over data processing activities (especially for cookies and targeted advertising), and the ability to easily withdraw consent at any time. The process for obtaining and managing consent must be meticulously documented and auditable.

Ensure Robust Data Transfer Mechanisms

The €1.2 billion fine against Meta for unlawful data transfers to the U.S. serves as a stark reminder of the enduring impact of the Schrems II judgment. Organizations transferring personal data outside the EU/EEA must critically assess the sufficiency of their transfer mechanisms. Reliance on Standard Contractual Clauses (SCCs) requires a thorough transfer impact assessment (TIA) to determine if supplementary measures are necessary to ensure an equivalent level of data protection, particularly against government surveillance in the recipient country. Businesses should stay abreast of new data transfer frameworks, such as the EU-U.S. Data Privacy Framework, and ensure their implementation is legally sound and effectively safeguards data.

Implement Privacy by Design and Default

The GDPR mandates that data protection be built into the design of systems and business practices from the outset, and that the most privacy-protective settings are the default. The Instagram €405 million fine for public-by-default settings for children's accounts and the Facebook €265 million fine for data scraping due to design flaws highlight this obligation. Organizations must conduct Data Protection Impact Assessments (DPIAs) for high-risk processing activities, integrate privacy considerations into every stage of product development, and ensure that default configurations minimize data collection and maximize privacy without requiring user intervention.

Focus on Children's Data Protection

Enforcement actions, particularly against Instagram and TikTok, demonstrate that children's data is a heightened priority for regulators. Organizations whose services are likely to be accessed by children must implement specific safeguards. This includes ensuring age-appropriate design, providing clear and child-friendly privacy information, obtaining valid parental consent where required, and implementing robust age verification mechanisms to prevent underage access or inappropriate data processing. Default settings for children's accounts must be private and secure.

Enhance Data Security and Breach Preparedness

The €265 million fine against Meta for a data breach resulting from data scraping underscores the importance of implementing appropriate technical and organizational measures to ensure the security of personal data (Article 32 GDPR). This includes robust access controls, encryption, regular security audits, and prompt patching of vulnerabilities. Furthermore, organizations must have comprehensive data breach response plans in place, enabling timely detection, assessment, and notification to supervisory authorities and affected data subjects within the mandated 72-hour timeframe.

Cultivate a Culture of Accountability

GDPR emphasizes accountability, requiring organizations to demonstrate compliance with its principles (Article 5(2) GDPR). Recent enforcement trends, including warnings of potential personal liability for executives, highlight the need for robust leadership oversight. Boards and C-suite executives must actively engage in and oversee data protection practices, ensuring sufficient resources are allocated, and compliance is integrated into corporate governance. This involves clear internal policies, regular staff training, and a commitment from the top down to data privacy as a core business value.

Proactive Engagement with DPAs

While enforcement actions can be severe, some DPAs offer "cure periods" for organizations to correct non-compliance issues without immediate fines. Proactive engagement with supervisory authorities, transparent communication during investigations, and demonstrating a genuine commitment to addressing identified issues can potentially mitigate penalties. Organizations should view DPAs not just as enforcers but also as sources of guidance, seeking clarity on complex compliance matters where appropriate.

Conclusion

The landscape of GDPR enforcement is dynamic and increasingly stringent, marked by escalating fines and a broadening scope of regulatory scrutiny. The landmark penalties against major technology companies serve as powerful reminders that no organization, regardless of its size or influence, is exempt from compliance. The consistent focus on fundamental principles—transparency, lawfulness of processing, data minimization, privacy by design, and accountability—underscores that these are not merely legal formalities but core tenets that must be embedded into every aspect of an organization's data handling practices.

The lessons derived from these real-world cases are clear: a reactive approach to GDPR compliance is no longer tenable. Organizations must adopt a proactive, continuous, and holistic strategy that prioritizes robust data governance, meticulous attention to legal bases for processing, secure international data transfers, and a steadfast commitment to protecting vulnerable data subjects, particularly children. The increasing emphasis on executive accountability further highlights that data privacy is a strategic imperative, demanding active oversight from senior leadership. By internalizing these lessons and implementing comprehensive compliance measures, businesses can mitigate significant financial, reputational, and operational risks, fostering trust with their users and navigating the complex regulatory environment with greater confidence.