Unified AI Governance Integrated Impact Assessment

This report argues that the fragmentation of AI governance is a primary strategic risk and that the definitive solution is a single, integrated operational framework where continuous, multi-dimensional impact assessment drives a unified governance process.

6/27/202550 min read

The rapid proliferation of Artificial Intelligence (AI) presents a dual challenge for modern enterprises and public institutions: how to harness its transformative potential while navigating a fragmented and rapidly evolving landscape of legal requirements, ethical expectations, and strategic risks. The current approach, characterized by siloed governance functions and reactive compliance checks, is no longer tenable. It creates inefficiencies, introduces compliance gaps, and fails to foster the public trust necessary for sustainable innovation. This report argues that the fragmentation of AI governance is a primary strategic risk and that the definitive solution is a single, integrated operational framework where continuous, multi-dimensional impact assessment drives a unified governance process.

This report deconstructs the core concepts of Unified AI Governance (UAG) and the Integrated Impact Assessment (IIA), demonstrating that they are not separate activities but two sides of the same coin. The IIA serves as the proactive, evidence-gathering engine that provides the empirical data needed for the governance framework to make informed, defensible decisions. This symbiotic relationship forms the basis of the proposed integrated lifecycle model, a structured, seven-phase process that embeds impact assessments at critical junctures—from strategic ideation to system decommissioning. This model ensures that ethical, legal, and societal considerations are not afterthoughts but are woven into the fabric of AI development and deployment.

By analyzing the world's most influential regulatory and standards frameworks—including the EU AI Act, the UK's principles-based approach, the NIST AI Risk Management Framework (RMF), and the certifiable ISO/IEC 42001 standard—this report demonstrates that their demands, while diverse, are convergent. They share common goals of risk management, transparency, accountability, and human oversight. A unified process, as architected herein, is not only possible but is the most efficient path to satisfying these multifaceted global requirements simultaneously.

The primary recommendation of this report is for organizational leadership to champion this integrated approach not as a compliance burden but as a strategic enabler. By building a unified framework grounded in continuous impact assessment, organizations can move beyond risk mitigation to actively build trustworthy AI. This fosters the stakeholder confidence, regulatory goodwill, and operational resilience required to lead in the age of artificial intelligence. The framework presented in this document provides a comprehensive and actionable blueprint for achieving this critical objective

Part I: Deconstructing the Core Concepts

This foundational part of the report establishes a precise and shared understanding of the two central pillars of our framework: Unified AI Governance and Integrated Impact Assessment. By deconstructing these concepts, we lay the groundwork for their synthesis into a single, operational process.

Chapter 1: Defining Unified AI Governance (UAG)

The term "AI governance" is often used loosely, referring to a disparate collection of policies and ethical guidelines. To build a robust operational framework, a more rigorous and functional definition is required. Unified AI Governance (UAG) is not merely a set of rules but a cohesive and integrated system of risk management, ethical oversight, and practical controls designed to steer AI development and deployment toward beneficial and responsible outcomes. It is a strategic imperative, not a reactive compliance function.

The Strategic Imperative of Unification

The core purpose of UAG is to create a strategic framework that proactively balances innovation with risk management, ensuring that every AI deployment aligns with an organization's compliance obligations, security posture, transparency commitments, and ethical standards. IDC defines AI governance as a comprehensive system of laws, policies, frameworks, practices, and processes that enable organizations to manage AI risks while simultaneously driving business value. A critical aspect of this definition is the mandate that governance must be integrated into the core strategy of the organization rather than being treated as a reactive, check-the-box exercise. Organizations that fail to adopt this unified approach face significant consequences, including operational inefficiencies, heightened legal exposure, and severe reputational damage.

The rapid, and sometimes unchecked, adoption of generative AI has amplified these risks. Without a comprehensive, risk-based assessment of their capabilities, organizations risk creating a "cybersecurity house-of-cards," exposing themselves to data leaks, biased model outputs, and significant penalties as governments tighten AI security laws. The strategic necessity of unification is therefore driven by the pain of fragmentation. A recent survey found that integrating fragmented systems is the single greatest challenge for organizations implementing AI governance, cited by 58% of respondents. This operational reality is compelling a market shift toward integrated solutions. IBM, for instance, has launched a platform that combines its watsonx.governance and Guardium AI Security products, creating what it describes as the industry's first software platform to unify AI security and governance functions. This system is designed specifically to manage the challenges of deploying autonomous AI agents at scale while maintaining security and compliance standards. This evolution from abstract principles to tangible, technology-enabled business functions signals that UAG is no longer just a conceptual ideal but an operational necessity.

Pillars of Unified Governance

To structure this strategic approach, models like IDC's Unified AI Governance Model provide a useful architecture built on four key pillars. These pillars represent the core domains that any comprehensive governance framework must address:

Transparency and Explainability: This pillar demands that organizations document AI system designs, data provenance, and decision-making processes. The goal is to make AI systems understandable to stakeholders so they can evaluate how decisions are made. Without transparency, trust is impossible.

Security and Resilience: This involves implementing robust measures to protect against data leaks, adversarial attacks, and other AI-driven threats. It ensures the operational resilience of AI systems, safeguarding them from both internal vulnerabilities and external attacks.

Compliance and Privacy Protection: This pillar focuses on adherence to the complex web of global and regional regulations, such as the EU's General Data Protection Regulation (GDPR), and ensuring that the collection and use of personal data in AI systems are lawful and ethical.

Human-in-the-Loop (HITL) Governance: This principle requires the establishment of clear mechanisms for human oversight, particularly for critical or high-stakes decisions. It ensures that autonomous systems do not operate without a pathway for human intervention and accountability.

Key Governance Actions

Translating these pillars into practice requires a set of specific, actionable components that form the machinery of a UAG framework. An effective framework is not just a policy document; it is a living system built on concrete actions. These core actions include:

Establishing Accountability: Clearly defining roles and responsibilities for AI oversight is paramount. This includes creating a dedicated team or ethics committee with diverse expertise from legal, compliance, data privacy, and technology to oversee AI initiatives and manage risks.

Developing AI Policies and Ethics Codes: Organizations must formulate comprehensive policies that cover the entire AI lifecycle. This includes creating a code of conduct that translates core values like fairness and accountability into actionable principles for developers and users.

Implementing Robust Data Governance: Since AI is fueled by data, strong data governance is the foundation of UAG. This involves establishing data quality standards, validation processes, privacy protection measures, and conducting regular audits for bias and data traceability.

Utilizing Continuous Monitoring: A UAG framework must include systems for the real-time tracking of AI model performance, reliability, and ethical adherence. This allows for the early detection of issues like model drift, data quality degradation, or anomalous behavior before they escalate.

Conducting Regular Assessments: The framework must mandate regular internal assessments and third-party audits, especially for high-risk systems. These conformity assessments ensure that AI systems remain compliant with evolving regulations and internal policies throughout their lifecycle.

In summary, Unified AI Governance is an active, integrated, and technology-supported system. It moves beyond passive policy to create an operational framework that embeds responsibility into the core of an organization's AI strategy. The market's response to the challenges of fragmentation—through the development of unified platforms and control frameworks—indicates that the future of successful AI deployment lies in this holistic and unified approach.

Chapter 2: Defining the Integrated Impact Assessment (IIA)

If Unified AI Governance provides the structure and rules for responsible AI, the Integrated Impact Assessment (IIA) provides the evidence-based, forward-looking analysis that informs those rules. The IIA is a systematic, holistic, and proactive evaluation process that serves as the empirical backbone of any credible governance framework. It is the mechanism through which an organization understands the potential consequences of its AI systems before they materialize.

A Holistic Philosophy of Interconnectedness

At its core, an IIA is a process designed to evaluate the potential economic, social, environmental, and human-centric consequences of a policy, program, or project. It is distinct from traditional, single-focus assessments (like a financial review or a privacy assessment) in its holistic approach. The IIA operates as a "philosophy" that emphasizes the profound interconnectedness of our actions and their consequences, recognizing that an impact in one domain can create unforeseen ripple effects in others. By providing a 360-degree view of potential outcomes, the IIA ensures that a broad spectrum of repercussions is considered, fostering a more inclusive and balanced decision-making process.

This holistic perspective is essential for AI, where the potential for harm is multifaceted and can manifest in subtle ways. An AI system that infringes on human rights by discriminating on grounds of gender, age, or ethnicity is a clear example of where a narrow, purely technical assessment would fail. The IIA forces a broader inquiry, prompting questions not just about whether an AI system works, but whether it is just, fair, and beneficial for all affected stakeholders.

The Multi-Dimensional Scope of an AI IIA

A comprehensive IIA for an AI system must be multi-dimensional, systematically probing for impacts across a wide range of domains. These domains form a checklist of critical considerations for any proposed AI deployment:

Societal and Human Rights: This is arguably the most critical dimension. The assessment must delve into the human-centric impacts, evaluating potential consequences on individual well-being, social cohesion, public safety, and fundamental rights such as privacy, fairness, and equality. It specifically requires considering the protection needs of vulnerable groups, including children, the elderly, and workers, who may be disproportionately affected.

Economic: This domain assesses the financial implications, both positive and negative. It considers factors such as job creation or displacement, economic growth, productivity gains, and potential financial risks or instability arising from the AI system's deployment.

Environmental: With growing awareness of the significant energy consumption of large AI models, this dimension is increasingly vital. The IIA must evaluate the potential ecological impacts, including effects on environmental sustainability, energy usage, and ecosystems.

Legal and Ethical: The assessment must rigorously check for compliance with applicable laws and regulations. Beyond legal compliance, it must evaluate the system's alignment with core ethical principles like fairness, accountability, and transparency, ensuring it does not perpetuate or exacerbate harmful biases.

A Formalized and Structured Process

The concept of an AI impact assessment is rapidly maturing from a generic ethical check into a formalized, auditable process. This evolution is being driven by the development of international standards that provide a clear and structured methodology. The draft international standard ISO/IEC 42005, which details the requirements for an AI System Impact Assessment (AIIA), is a landmark in this formalization. It provides a seven-section structure that serves as a robust template for conducting a comprehensive IIA :

System Information: A clear description of the AI system, its features, its purpose, and its intended and potential unintended uses.

Data Information: A thorough analysis of the data used to train and operate the system, assessing its quality, provenance, completeness, representativeness, and potential for bias.

Algorithm and Model Information: Details on the origin of the algorithms (in-house, third-party, etc.) and the approach taken in their development.

Deployment Environment: A definition of where and how the model will be used, including geographical areas, linguistic considerations, and any constraints in the deployment context.

Interested Parties: A comprehensive identification of all stakeholders who may be affected by the system, both directly and indirectly.

Benefits and Harms Analysis: This is a substantial section requiring a detailed ledger of all potential benefits and harms for each identified stakeholder group across the various impact domains (social, economic, etc.).

System Failures and Misuse Scenarios: An analysis of what could happen if the system fails, as well as the potential for accidental or intentional misuse or abuse of the system.

Lifecycle Integration: A Continuous Process

Crucially, the IIA is not a one-time, pre-launch checklist. To be effective, it must be a living process that is integrated throughout the entire AI lifecycle. The assessment should be initiated at the earliest stages of decision-making—when an AI project is first conceived—and then be honed, refined, and updated as the system evolves. This lifecycle approach means that impact assessments are performed at multiple points, from design and development through to deployment and eventual decommissioning. This continuous evaluation ensures that the organization remains adaptable to evolving risks, changing regulatory requirements, and shifting societal expectations.

The formalization of the IIA process, exemplified by standards like ISO/IEC 42005, elevates it from a "good practice" to a core operational requirement for demonstrating due diligence. An organization's governance will increasingly be judged by its ability to conduct and document these structured, multi-dimensional assessments. The IIA is therefore not just a tool for ethical reflection; it is a fundamental component of defensible, responsible, and sustainable AI innovation.

Part II: The Global Regulatory and Standards Gauntlet

The development of a unified governance framework does not occur in a vacuum. It must be architected to navigate and satisfy a complex and increasingly demanding landscape of international laws, regulations, and standards. This section provides a critical analysis of the most influential of these external requirements, deconstructing their core tenets to inform the design of our integrated model. We will examine the mandatory legal regime of the EU AI Act, the principles-based approach of the UK, the voluntary risk management process of the US NIST AI RMF, and the certifiable global standard of ISO/IEC 42001.

Chapter 3: The EU AI Act: A Comprehensive, Risk-Based Mandate

Adopted in June 2024, the European Union's Artificial Intelligence Act is the world's first comprehensive, binding law for AI. It sets a significant global precedent, establishing a detailed framework for AI compliance, risk management, and governance that aims to ensure AI systems are safe, transparent, traceable, non-discriminatory, and overseen by humans. Its influence extends far beyond the EU's borders, creating a de facto global compliance floor for any organization wishing to operate within the vast EU market.

The Risk-Based Approach

The cornerstone of the EU AI Act is its risk-based approach, which categorizes AI systems into four distinct tiers, with regulatory obligations escalating according to the level of risk they pose to users' health, safety, or fundamental rights. This tiered structure is the organizing principle of the entire regulation.

Unacceptable Risk: The Act outright prohibits certain AI practices deemed to pose an unacceptable threat to fundamental rights. This includes systems that engage in cognitive behavioral manipulation of vulnerable groups (e.g., toys that encourage dangerous behavior), social scoring by public authorities, and most forms of real-time remote biometric identification in public spaces. The ban on these systems is set to take effect in early 2025.

High-Risk: This is the most heavily regulated category. High-risk systems are permitted but are subject to a stringent set of requirements and a conformity assessment before they can be placed on the market. This category includes AI systems used as safety components in products already regulated by EU law (e.g., medical devices, cars, toys) as well as systems deployed in eight specific critical areas, including: management of critical infrastructure, education and vocational training, employment and worker management, access to essential public and private services (like credit scoring), law enforcement, and migration and border control management.

Limited Risk: These are systems that pose a risk of manipulation or deception, such as chatbots or systems that generate "deepfakes." They are subject to specific transparency obligations. For example, users must be clearly informed that they are interacting with an AI system, and AI-generated content must be labeled as such.

Minimal Risk: The vast majority of AI systems are expected to fall into this category (e.g., AI-enabled video games or spam filters). The Act imposes no new legal obligations on these systems, though providers may voluntarily adhere to codes of conduct.

Obligations for High-Risk Systems: A Shared Responsibility

The Act creates a chain of accountability by imposing distinct but interconnected obligations on the different actors in the AI value chain, primarily the "providers" (who develop the AI system) and the "deployers" (who use the AI system).

Obligations for Providers of High-Risk AI Systems: Providers face a comprehensive list of duties that must be fulfilled throughout the system's lifecycle to ensure it is safe and compliant before and after it reaches the market. Key obligations include:

Risk Management System: Establish, implement, document, and maintain a continuous, iterative risk management system for the entire lifecycle of the AI system. This involves identifying, evaluating, and mitigating known and reasonably foreseeable risks.

Data and Data Governance: Ensure that the data sets used for training, validation, and testing are of high quality. They must be relevant, representative, error-free, and complete, and providers must implement data governance practices to manage and mitigate potential biases.

Technical Documentation and Record-Keeping: Maintain extensive technical documentation that demonstrates the system's compliance with all requirements. This documentation, along with automatically generated event logs, must be kept for at least 10 years after the system is placed on the market to ensure traceability.

Transparency and Provision of Information: Design the system to be transparent and provide deployers with clear, comprehensive instructions for use, including information on the system's capabilities, limitations, and intended purpose.

Human Oversight: Design and develop the high-risk AI system in such a way that it can be effectively overseen by humans. This includes implementing appropriate human-machine interface measures.

Accuracy, Robustness, and Cybersecurity: Ensure the system achieves an appropriate level of accuracy, robustness, and cybersecurity, and is resilient to errors, faults, or inconsistencies.

Conformity Assessment and Registration: Before placing the system on the market, providers must ensure it undergoes a conformity assessment, draw up an EU declaration of conformity, affix the CE marking to indicate compliance, and register the system in a public EU database.

Obligations for Deployers of High-Risk AI Systems: Deployers, particularly those using AI systems for professional activities, also have critical responsibilities to ensure the safe and proper use of the technology. Key obligations include:

Use According to Instructions: Take appropriate technical and organizational measures to use the high-risk AI system in accordance with the provider's instructions for use.

Human Oversight: Assign human oversight to natural persons who have the necessary competence, training, and authority to intervene if necessary.

Data Quality: To the extent that the deployer exercises control over input data, they must ensure it is relevant and sufficiently representative for the system's intended purpose.

Monitoring and Reporting: Monitor the operation of the system and, if a serious incident or risk is identified, suspend its use and inform the provider and relevant national authorities without delay.

Fundamental Rights Impact Assessment (FRIA): Deployers that are public bodies or private entities providing public services, as well as those using systems for credit scoring or life and health insurance pricing, must conduct a FRIA to evaluate the system's impact on fundamental rights before putting it into use.

Regulation of General-Purpose AI (GPAI)

Recognizing the rapid rise of powerful foundation models, the Act includes specific rules for General-Purpose AI (GPAI) models like ChatGPT. While not classified as high-risk themselves, GPAI models are subject to transparency requirements. Providers must draw up technical documentation, comply with EU copyright law, and publish detailed summaries of the content used for training. Furthermore, GPAI models identified as having "systemic risk"—based on criteria such as the computational power used for training (e.g., greater than 1025 FLOPs)—face more stringent obligations, including conducting model evaluations, assessing and mitigating systemic risks, and reporting serious incidents to the EU AI Office.

The EU AI Act's comprehensive and extraterritorial nature—applying to any provider or deployer whose AI system is placed on or whose output is used in the EU market, regardless of their location —effectively sets a global standard. The detailed, lifecycle-based obligations for both providers and deployers create a shared accountability chain that any multinational organization must be prepared to join. Consequently, any "unified" governance framework must, by default, be architected to satisfy the EU's high-risk criteria, as this will provide a robust foundation for meeting the requirements of most other jurisdictions. The explicit mandate for a FRIA for certain deployers also directly embeds the principle of integrated impact assessment into law, reinforcing the central thesis of this report.

Chapter 4: The UK's Pro-Innovation Stance: A Principles-Based Alternative

In stark contrast to the EU's comprehensive, horizontal legislation, the United Kingdom has pursued a distinct path for AI regulation. The UK's approach is deliberately "pro-innovation" and "light-touch," aiming to foster economic growth and establish the country as a global AI leader by avoiding what it considers to be burdensome, prescriptive laws. Instead of creating new, AI-specific legislation, the UK government has opted for a flexible, principles-based, and decentralized model that relies on adapting existing legal frameworks and empowering existing regulators.

A Framework of Five Cross-Sectoral Principles

The foundation of the UK's AI governance model was laid out in its March 2023 AI Regulation White Paper. This document eschewed a new AI law in favor of establishing five cross-sectoral, non-statutory principles intended to guide the responsible development and use of AI across the economy. These principles are:

Safety, security, and robustness: AI systems should function in a secure and reliable way throughout their lifecycle, with risks being continually identified, assessed, and managed.

Appropriate transparency and explainability: AI systems should be appropriately transparent and explainable, with the level of transparency depending on the context and potential impact of the system.

Fairness: AI systems should not discriminate against individuals or create unfair market outcomes.

Accountability and governance: Clear lines of accountability for AI systems must be established across the AI lifecycle.

Contestability and redress: There must be clear routes for individuals to contest harmful outcomes or decisions generated by AI.

These principles, while comprehensive in scope, are intentionally high-level, designed to be adaptable to different contexts rather than imposing rigid, one-size-fits-all rules.

Decentralized Enforcement by Existing Regulators

A defining feature of the UK model is its rejection of a new, centralized AI regulatory body. Instead, the government has delegated the responsibility for AI oversight to existing sectoral regulators, such as the Information Commissioner's Office (ICO) for data protection, the Financial Conduct Authority (FCA) for financial services, and the Competition and Markets Authority (CMA) for market competition. These regulators are tasked with interpreting the five high-level principles and issuing sector-specific guidance on how they apply within their respective domains. This approach is intended to leverage the deep domain expertise of existing bodies and allow for a more tailored and context-aware application of the principles.

An Evolving and Potentially Unstable Landscape

While the UK government remains committed to its non-statutory approach, this position is creating significant regulatory uncertainty and is facing growing pressure. The lack of legally binding rules has been criticized for creating ambiguity for businesses and failing to provide sufficient protection for citizens. This has led to legislative initiatives from outside the government. For instance, the Artificial Intelligence (Regulation) Bill, a Private Member's Bill reintroduced in the House of Lords in March 2025, proposes the creation of a statutory AI Authority and mandates several governance structures, including mandatory AI impact assessments. While such bills often struggle to become law without government support, its reintroduction reflects a growing momentum toward more formal regulation and a potential future policy shift.

To support its current approach, the government has focused on providing practical, non-binding guidance. A key example is the AI Playbook, a resource developed for UK government departments and public sector organizations. It provides accessible explanations of AI technologies, sample use cases, and corporate guidance on topics such as bias, privacy, data protection, and cybersecurity, aiming to build capacity and promote responsible AI use within the public sector.

This unique strategy creates a "compliance paradox" for organizations. On the surface, the UK's framework appears less burdensome than the EU's prescriptive regime. However, this "light-touch" is not a "no-touch" approach. Penalties for AI-related misconduct are enforced through existing, powerful legal frameworks. For example, a violation of the "fairness" principle that involves personal data could be prosecuted under the UK General Data Protection Regulation (UK GDPR), which carries potential fines of up to £17.5 million or 4% of global annual turnover. This forces companies into the legally ambiguous position of having to interpret how a broad principle like "fairness" or "transparency" maps onto the complex requirements of existing data protection, equality, and consumer rights laws.

For a unified governance framework, this has a critical implication. The framework must be robust enough not only to meet the explicit, detailed rules of a jurisdiction like the EU but also to generate the evidence and documentation needed to build a defensible case that it is adhering to the broad, interpretive principles of the UK. This elevates the importance of the Integrated Impact Assessment, as its detailed, documented analysis provides the necessary justification for the governance choices made. Furthermore, any UK-based company that offers its AI services to European users must still comply with the full force of the EU AI Act, meaning that adherence to the UK's principles alone is insufficient for any business with a European footprint.

Chapter 5: The NIST AI Risk Management Framework (RMF): A Voluntary Path to Trustworthiness

While the EU has focused on creating binding law and the UK on high-level principles, the United States has taken a different route, led by the National Institute of Standards and Technology (NIST). The NIST AI Risk Management Framework (AI RMF), released in January 2023, is a voluntary resource designed to provide organizations with a structured, practical methodology for managing the risks associated with AI. Though not legally binding, its influence is significant, and it is widely regarded as the most detailed operational blueprint for how to implement AI risk management in practice.

A Framework for Fostering Trustworthy AI

The stated purpose of the NIST AI RMF is to equip organizations with approaches that increase the trustworthiness of AI systems and to foster the responsible design, development, deployment, and use of AI over time. It is intended for use by any organization or individual involved in the AI lifecycle, from private companies and government agencies to research institutions.

The framework is grounded in the promotion of seven key characteristics of trustworthy AI. These characteristics serve as the goals that the risk management process aims to achieve :

Valid and Reliable: Systems should perform accurately and as intended.

Safe: Systems should not endanger human life, health, property, or the environment.

Secure and Resilient: Systems should be protected from security threats and be able to withstand and recover from adverse events.

Accountable and Transparent: There should be clear mechanisms and documentation for the system's decisions and outcomes.

Explainable and Interpretable: Stakeholders should be able to understand the system's decision-making process to an appropriate degree.

Privacy-Enhanced: Systems should respect privacy and protect personal data.

Fair – with harmful bias managed: Systems should be designed and used in a way that mitigates harmful bias and promotes equity.

The Four Core Functions: An Operational Cycle

The heart of the NIST AI RMF is its "Core," which provides a structured, iterative process for managing AI risks through four key functions. This cycle is not linear but is meant to be performed continuously throughout the AI lifecycle.

GOVERN: This function is foundational and cross-cutting. It involves cultivating a culture of risk management across the organization. Key activities include establishing policies, processes, and accountability structures for AI risk management; ensuring these are aligned with legal and ethical principles; and providing training to empower personnel. The GOVERN function ensures that the entire organization is prepared to manage AI risks consistently.

MAP: This function is about establishing the context and identifying the risks related to that specific context. It involves understanding an AI system's intended purpose, its capabilities and limitations, the stakeholders it will affect, and the potential benefits and harms it could create. The MAP function provides the situational awareness necessary to frame the risks properly.

MEASURE: This function focuses on the analysis and assessment of the risks identified in the MAP function. It employs a mix of quantitative and qualitative tools and methodologies to track, benchmark, and monitor AI risks and their impacts. Key activities include rigorous software testing, performance assessments against benchmarks, and tracking metrics related to the trustworthy AI characteristics.

MANAGE: This function involves acting on the risks that have been mapped and measured. Based on the analysis, risks are prioritized, and resources are allocated to treat them. Risk treatment can include mitigating the risk (e.g., by implementing a control), transferring it, avoiding it, or accepting it. This function also includes developing plans for responding to and recovering from AI-related incidents.

Adaptability Through Profiles

A key feature of the NIST AI RMF is its flexibility. It is designed to be adapted to different contexts through the use of "AI RMF Profiles." A Profile is an implementation of the RMF's functions, categories, and subcategories that is tailored to a specific setting, such as a particular industry sector (e.g., healthcare, finance), a type of AI technology (e.g., generative AI, facial recognition), or a specific use case. This allows organizations to apply the framework in a way that is relevant to their unique requirements, risk tolerance, and resources.

The NIST AI RMF should not be viewed as a competitor to other regulatory frameworks but rather as a powerful implementation guide. While prescriptive regimes like the EU AI Act and certifiable standards like ISO/IEC 42001 define what an organization must achieve (e.g., "establish a risk management system"), the NIST RMF provides a detailed, practical methodology for how to achieve it. For example, the EU AI Act's Article 9 requirement for a risk management system can be directly operationalized by following the MAP, MEASURE, and MANAGE functions of the NIST framework. Similarly, the GOVERN function provides a clear structure for establishing the overall organizational culture and policies required by both the EU Act and ISO 42001. Therefore, a well-designed unified framework should adopt the NIST RMF's four-function cycle as its core operational engine, using it as the process to fulfill the substantive requirements of other global mandates.

Chapter 6: ISO/IEC 42001: Codifying AI Management into a Global Standard

While regulations create legal obligations and frameworks like NIST's provide guidance, the international standards landscape offers a crucial third element: a mechanism for verification and certification. The publication of ISO/IEC 42001 in December 2023 marks a pivotal moment in the maturation of AI governance. It is the world's first international, auditable standard for an AI Management System (AIMS), providing organizations with a clear, globally recognized benchmark for responsible AI practices.

A Certifiable Standard for Trust

The most significant feature of ISO/IEC 42001 is that it is a certifiable standard. Unlike voluntary guidance, an organization can be formally audited by an accredited third party and, if successful, receive a certification demonstrating its conformance with the standard's requirements. This process provides independent, external validation of an organization's AI governance efforts. This certification is a powerful tool for building trust with customers, partners, and regulators, and it is poised to become a critical differentiator in the marketplace. Leading technology companies like Microsoft are already pursuing ISO/IEC 42001 certification for their flagship AI products, such as Microsoft 365 Copilot, to provide customers with this tangible assurance of responsible development and risk management.

The AI Management System (AIMS)

The central requirement of ISO/IEC 42001 is for an organization to establish, implement, maintain, and continually improve an AI Management System (AIMS). An AIMS is a structured framework of policies, objectives, and processes designed to manage the risks and opportunities associated with AI in a responsible manner. It provides a systematic approach to governing AI projects, models, and data practices across the organization.

The standard mandates several key requirements for an AIMS, which align closely with the principles and functions of other major frameworks :

AI Risk Management: A core component is the requirement to identify, assess, and mitigate risks associated with AI systems, including technical risks as well as ethical and societal risks like bias, lack of accountability, and data protection failures.

AI System Impact Assessment: The standard explicitly requires organizations to conduct assessments of the potential impacts of their AI systems on individuals, groups, and society. This is not an optional extra but a mandatory part of the management system, solidifying the role of the IIA as a central governance tool.

System Lifecycle Management: The AIMS must apply to the entire lifecycle of an AI system, from its initial conception and design through to its development, deployment, operation, and eventual decommissioning.

Ethical Principles: The standard is built on a foundation of ethical AI, encouraging organizations to embed principles of transparency, fairness, and accountability into their AI development and deployment processes.

Stakeholder Engagement: It promotes the involvement of a wide range of stakeholders—including compliance teams, AI developers, risk management professionals, and affected communities—in decision-making processes.

A Structure for Continuous Improvement: Plan-Do-Check-Act (PDCA)

ISO/IEC 42001 follows the classic Plan-Do-Check-Act (PDCA) high-level structure common to other ISO management system standards like ISO 9001 (Quality) and ISO 27001 (Information Security). This provides a familiar and proven methodology for implementing the AIMS and driving continuous improvement. The ten clauses of the standard map to this cycle :

Plan (Clauses 4, 5, 6): This phase involves understanding the organizational context, demonstrating leadership commitment, and planning the AIMS. This includes defining the scope, establishing AI objectives, and planning actions to address risks and opportunities.

Do (Clauses 7, 8): This is the implementation phase. It requires providing the necessary resources, support, and communication, as well as executing the operational processes for the AIMS. This is where the AI risk and impact assessments are conducted.

Check (Clause 9): This phase focuses on performance evaluation. It mandates monitoring, measuring, analyzing, and evaluating the effectiveness of the AIMS through activities like internal audits and management reviews.

Act (Clause 10): This final phase is about improvement. It requires the organization to address any non-conformities through corrective actions and to continually enhance the suitability, adequacy, and effectiveness of the AIMS.

The emergence of a certifiable international standard like ISO/IEC 42001 fundamentally changes the strategic calculus of AI governance. It transforms what was previously an internal policy matter into a verifiable and marketable asset. While regulations like the EU AI Act create the obligation to be responsible and frameworks like NIST provide the guidance on how to be responsible, ISO/IEC 42001 provides the mechanism to prove it. For many organizations, particularly those in high-stakes or business-to-business sectors, achieving this certification will likely become a commercial necessity. Therefore, a primary objective of any unified governance program should be to align with the requirements of ISO/IEC 42001, as it offers the most credible and globally recognized form of external validation for an organization's commitment to trustworthy AI.

Part III: A Unified Framework for Governance and Impact Assessment

Having deconstructed the core concepts and analyzed the external regulatory and standards landscape, this section synthesizes these elements into a single, cohesive, and efficient operational framework. The central thesis is that a truly unified system is achieved when the Integrated Impact Assessment is not merely a component of governance but is the primary engine that drives an evidence-based, proactive, and continuous governance cycle.

Chapter 7: The Symbiotic Relationship: How Impact Assessments Power Governance

Traditional governance models are often reactive, responding to incidents after they occur or focusing on backward-looking compliance checklists. A modern, effective AI governance framework must be proactive, anticipatory, and evidence-based. This shift is powered by establishing a symbiotic, cyclical relationship between the Integrated Impact Assessment (IIA) and the Unified AI Governance (UAG) framework. The IIA generates the foundational evidence, and the UAG provides the structure to act on that evidence.

The IIA as the Foundational Input for Governance

At their core, all major governance frameworks—from the EU AI Act to NIST's RMF—require organizations to systematically identify, assess, manage, and mitigate risks. The IIA is the structured process that generates the specific, contextualized evidence necessary to perform these fundamental governance functions. An impact assessment is not a parallel activity to risk management; it is the primary tool for conducting it. By systematically exploring potential harms across multiple dimensions—societal, ethical, legal, and economic—the IIA uncovers the very risks of bias, privacy infringement, security threats, and unfair outcomes that the governance framework is designed to address.

This relationship is becoming deeply embedded in practice. A growing number of organizations are integrating AI impact assessments directly into their existing enterprise risk management (ERM) processes. The outputs of an IIA—a documented analysis of potential harms and benefits—feed directly into the organization's broader risk register, allowing AI-specific risks to be managed alongside other strategic business risks. This integration prevents AI governance from becoming a siloed, technical-only exercise and ensures that its risks are given appropriate weight at the enterprise level.

Enabling Proactive Governance and Ethical Innovation

The integration of the IIA transforms governance from a reactive to a proactive discipline. A traditional governance approach might wait for a model to exhibit bias in production and then react with a corrective action. An IIA-driven approach, however, anticipates the potential for bias by requiring a thorough analysis of training data representativeness and fairness metrics before the model is ever deployed. This forces a forward-looking perspective, compelling teams to ask "what could go wrong?" at the earliest stages of the AI lifecycle, rather than "what went wrong?" after damage has been done.

This proactive stance is also the key to fostering genuine ethical innovation. By systematically identifying not only risks but also potential benefits, the IIA provides decision-makers with a balanced and evidence-based ledger to inform their go/no-go decisions on AI projects. This structured analysis moves the conversation beyond a simple focus on risk avoidance. It encourages a more nuanced discussion about trade-offs and value creation. This process enables organizations to pursue innovation responsibly, with clear guidelines and ethical safeguards embedded directly into the design process, rather than treating ethics as a restrictive constraint applied after the fact.

A Continuous Cycle of Assessment and Adaptation

The relationship between IIA and governance is not a one-way street or a linear process. It is a dynamic, symbiotic cycle that creates a continuous learning loop for the organization. The process unfolds as follows:

Initial Assessment Informs Governance: An initial IIA is conducted during the ideation or design phase of an AI project. It identifies potential risks, harms, benefits, and affected stakeholders.

Governance Acts on Assessment: The UAG framework takes this initial assessment as its primary input. It uses the identified risks to define the necessary controls, set policies, establish human oversight procedures, and ultimately make an informed decision about whether and how to proceed with deployment.

Governance Mandates Monitoring: Once a system is deployed, the UAG framework mandates continuous monitoring of its performance, usage, and real-world impacts.

Monitoring Triggers Re-assessment: This continuous monitoring will inevitably detect events that require a re-evaluation of the initial assessment. These "triggers" can include technical issues like model drift, the emergence of new and unanticipated uses of the system, reports of harm from users, or significant changes in the regulatory environment.

Updated Assessment Refines Governance: The triggered re-assessment generates new evidence and a revised understanding of the system's impacts. This updated IIA then feeds back into the UAG framework, which may in turn lead to the refinement of controls, the updating of policies, or even the decision to decommission the system.

This cyclical process ensures that the organization's understanding of its AI systems is not static. It creates an adaptive governance system that can evolve alongside the technology it governs. In this model, the IIA should not be viewed as a one-off report that is filed away, but as a "living document" that is continuously updated throughout the AI lifecycle, powered by the monitoring mechanisms of the governance framework.

Chapter 8: Architecting the Integrated Process: A Lifecycle Approach

To translate the symbiotic relationship between impact assessment and governance into practice, a structured operational process is required. This chapter architects a practical, step-by-step model that embeds the IIA into the key stages of the AI lifecycle. This integrated process ensures that governance is not a separate gatekeeping function but an intrinsic part of how AI is conceived, developed, and managed. The model draws heavily on the logic of emerging integrated frameworks that sequence international standards into a cohesive workflow.

The proposed integrated lifecycle model consists of seven distinct phases, each with specific governance actions and IIA triggers.

Phase 1: Strategic Alignment & Oversight (Pre-Project)

Description: This foundational phase occurs before any specific AI project is initiated. It involves establishing the overarching governance culture and structure for the entire organization.

Key Actions:

Establish Governance Body: Create a cross-functional AI Governance Board or Committee, comprising members from legal, ethics, compliance, data science, IT, and key business units. This body is responsible for setting the AI strategy and overseeing the entire governance framework.

Define Policies and Principles: The board establishes the organization's core AI ethics policies, code of conduct, and risk tolerance levels, aligning them with corporate values and strategic objectives. This directly corresponds to the

Leadership and Planning clauses of ISO/IEC 42001 and the GOVERN function of the NIST AI RMF.

Regulatory Scoping: The organization identifies the high-level regulatory obligations that will apply to its operations, such as determining its potential exposure under the EU AI Act's risk tiers.

IIA Trigger: The primary trigger in this phase is the formulation of a new strategic initiative that involves the potential use of AI technology.

Phase 2: Scoping & Initial Impact Assessment (Ideation)

Description: This phase begins when a specific AI use case or project is proposed. The goal is to conduct a preliminary evaluation to determine feasibility and make an initial go/no-go decision.

Key Actions:

Conduct Preliminary IIA: A lightweight, initial IIA is performed to scope the project. This includes a high-level contextual analysis (defining the problem and intended purpose), initial stakeholder mapping, and a preliminary classification of the system's potential risk level according to frameworks like the EU AI Act.

Go/No-Go Decision: The results of this initial assessment are presented to the governance board. Based on the potential benefits versus the initial view of risks and harms, a decision is made on whether the project is worth pursuing further.

IIA Trigger: The proposal of any new AI use case, application, or system.

Phase 3: Governance-by-Design & Full Impact Assessment (Design & Procurement)

Description: Once a project receives initial approval, it moves into the detailed design or procurement phase. Here, a comprehensive IIA is conducted to deeply inform the system's architecture and requirements.

Key Actions:

Conduct Full IIA: A full, detailed IIA is executed, following a structured methodology such as the one outlined in ISO/IEC 42005. This involves a deep dive into data sources, algorithmic choices, potential harms and benefits for all stakeholders, and failure scenarios.

Integrate Findings into Design: The findings of the IIA are not just documented; they are translated into concrete design specifications. This is "governance-by-design." For example, if the IIA identifies a risk of bias, the design specifications will mandate the use of specific bias detection tools, diverse training data, and fairness metrics.

Inform Procurement: If the AI system is being procured from a third party, the IIA findings are used to define the vendor selection criteria and contractual requirements. The procurement process must demand transparency from vendors regarding their own data governance, model development, and risk management practices.

IIA Trigger: The project's approval to move from ideation into the formal design or procurement phase.

Phase 4: Development & Validation (Build & Test)

Description: This phase involves the actual building and testing of the AI system, guided by the risks and requirements identified in the full IIA.

Key Actions:

Apply Risk Treatments: The development team implements the risk mitigation strategies defined in the IIA. This can include technical controls, procedural safeguards, or changes to the user interface to guide responsible use.

Validation and Verification: The system undergoes rigorous testing to validate that it meets the requirements set out in the design phase. This should include not only performance testing but also adversarial testing and red teaming to probe for vulnerabilities and unintended behaviors that may not have been foreseen in the initial assessment.

IIA Trigger: Any significant changes to the system's design during development, or the discovery of new, unanticipated risks during testing, must trigger a re-assessment and update of the IIA.

Phase 5: Deployment & Continuous Monitoring (Operations)

Description: The validated AI system is deployed into a production environment. The governance focus now shifts from pre-deployment assessment to post-deployment oversight.

Key Actions:

Deploy with Safeguards: The system is deployed with real-time monitoring capabilities and clear fallback controls in case of failure.

Continuous Monitoring: The governance framework mandates the continuous tracking of key metrics, including model performance, data drift, usage patterns, and any reported incidents or user complaints. This aligns with the MEASURE function of the NIST RMF.

Establish Feedback Channels: Formal mechanisms are established to allow users and other affected stakeholders to report issues, appeal decisions, and provide feedback on the system's impact.

IIA Trigger: The IIA is subject to periodic review. A new assessment is triggered if monitoring detects a significant performance deviation, a serious incident, an emerging pattern of misuse, or a change in the external regulatory context.

Phase 6: Incident Response & Improvement (Maintenance)

Description: This phase deals with the inevitable reality that incidents will occur. A mature governance framework includes a well-defined process for managing them.

Key Actions:

Implement Incident Response Protocol: A clear, pre-defined protocol is activated when an incident is detected. This protocol outlines steps for incident classification, containment, stakeholder communication, and, where necessary, reporting to regulatory authorities.

Root Cause Analysis and Improvement: After an incident is resolved, a root cause analysis is conducted. The lessons learned are fed back into the governance framework, leading to updates in policies, controls, training programs, and the risk models used in future IIAs. This embodies the

Act part of the ISO PDCA cycle.

IIA Trigger: The occurrence of any serious incident or near-miss.

Phase 7: Decommissioning (Sunset)

Description: The final phase of the lifecycle involves the responsible retirement of the AI system.

Key Actions:

Conduct Final Impact Assessment: A final IIA is conducted to manage the risks associated with decommissioning the system. This includes planning for secure data retention or deletion in compliance with regulations, managing the transition for users who relied on the system, and archiving necessary documentation.

IIA Trigger: The formal decision to retire or replace the AI system.

This integrated lifecycle model provides a comprehensive, end-to-end process that ensures governance and impact assessment are inextricably linked, driving a cycle of continuous learning and adaptation.

Chapter 9: The Unified Control Framework (UCF) as a Blueprint

While the integrated lifecycle model in the previous chapter provides the "what" and "when" of unified governance, the Unified Control Framework (UCF) offers a powerful conceptual blueprint for the "how." Proposed by researchers seeking to address the inefficiencies of siloed governance, the UCF is a comprehensive approach that integrates risk management and regulatory compliance through a unified, mappable set of controls. It provides the missing "Rosetta Stone" for AI governance, translating the abstract language of risks and the legalistic language of regulations into the concrete, operational language of controls.

The Three Pillars of the UCF

The UCF is built on the understanding that effective AI governance must simultaneously address three distinct but related concerns: managing organizational and societal risks, ensuring regulatory compliance, and enabling practical implementation. It achieves this by connecting three core components through a series of bidirectional mappings :

A Synthesized Risk Taxonomy: This component provides a structured and comprehensive catalog of potential AI-related risks. The taxonomy is designed to be MECE (Mutually Exclusive, Collectively Exhaustive), ensuring that all possibilities are covered without overlap, which simplifies integration with other processes. The framework identifies 15 high-level risk types (e.g., Fairness & Bias, Security, Societal Impact, Malicious Use) which are broken down into approximately 50 specific and concrete risk scenarios (e.g., "Toxic content generation," "Data poisoning," "Algorithmic discrimination"). This granular taxonomy gives organizations a precise vocabulary to identify and discuss risks, forming the basis for the

MAP and MEASURE functions of the governance process.

A Policy Requirement Library: This component deconstructs complex legal and standards documents into a library of structured, discrete policy requirements. For example, a specific article from the EU AI Act requiring documentation for high-risk systems is translated into a clear, actionable policy goal within the library. This process makes dense regulatory text machine-readable and directly mappable to internal controls, bridging the gap between legal obligations and operational reality.

A Unified Control Library: This is the operational heart of the UCF. It contains a parsimonious set of 42 well-defined, implementable governance actions or processes (i.e., "controls"). Each control is designed to be multi-purpose, simultaneously addressing multiple risk scenarios and satisfying multiple policy requirements. Examples of controls include "Establish AI system documentation framework," "Implement adversarial testing and red team program," and "Establish user rights and recourse framework". Each control in the library is accompanied by detailed implementation guidance, including a description of what successful implementation entails, common tools used, and potential pain points.

The Power of Bidirectional Mapping

The true innovation of the UCF lies in the intricate, bidirectional mappings that connect these three pillars. This structure creates a highly efficient and flexible system that allows an organization to approach governance from multiple starting points:

A Risk-Led Approach: An organization can start by identifying a relevant risk scenario from the taxonomy (e.g., as part of an IIA). For example, it might identify "Harmful Content" as a key risk for a new generative AI tool. By consulting the UCF's mappings, the organization can immediately see the specific set of controls from the library that are designed to mitigate this risk. The mappings also show which regulatory requirements are addressed by implementing these controls, providing an instant view of compliance coverage.

A Compliance-Led Approach: Alternatively, an organization can start with a specific regulatory obligation from the policy library (e.g., a requirement from the Colorado AI Act or the EU AI Act). The mappings will then point to the exact controls needed to satisfy that legal requirement. As a secondary benefit, the organization can also see all the additional risk scenarios that are mitigated by implementing that same set of controls, thus enhancing its risk management posture as a byproduct of its compliance activities.

This "many-to-many" relationship—where one control can solve multiple problems (risks and policy requirements) and one problem can be addressed by a specific set of controls—is the key to the framework's efficiency. It eliminates the redundant work that occurs when risk teams and legal teams operate in silos, for example, by implementing separate processes for mitigating bias (a risk) and for complying with the EU AI Act's fairness requirements (a policy). The UCF shows that a single, well-designed control, such as "Implement fairness testing and bias mitigation," can achieve both goals simultaneously.

The UCF's architecture provides the conceptual blueprint for the integrated technology platforms that are now emerging in the market. It offers a logical structure for how an organization should organize its internal governance efforts: first, by defining its unique risk landscape (using the taxonomy as a guide); second, by parsing its specific regulatory and policy requirements; and third, by building or adopting a library of concrete controls that efficiently and verifiably connects the two. This model makes governance scalable, adaptable, and, most importantly, operational.

Chapter 10: Meeting Multifaceted Demands: Mapping the Unified Process to Global Regulations

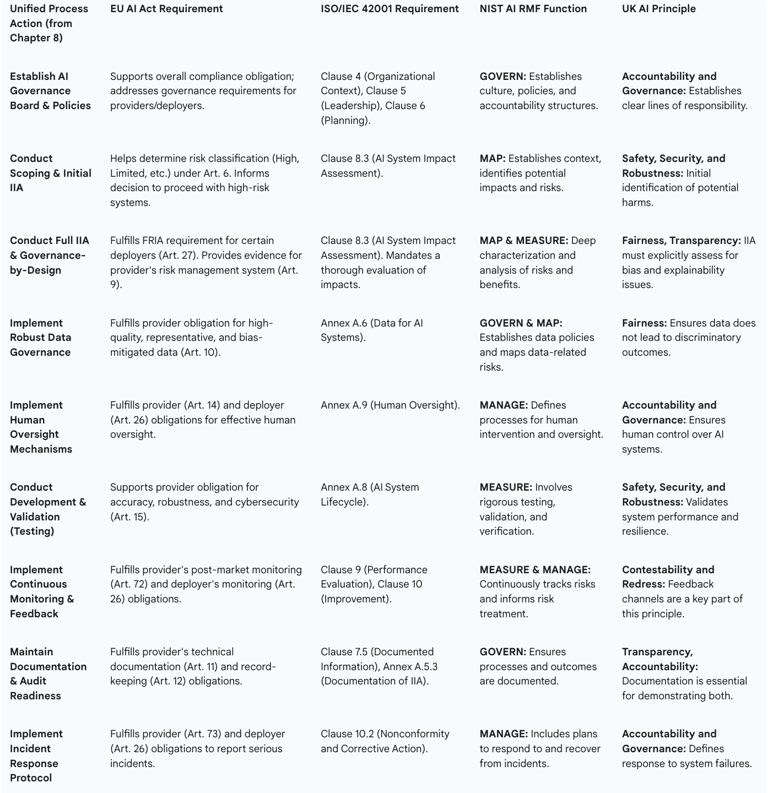

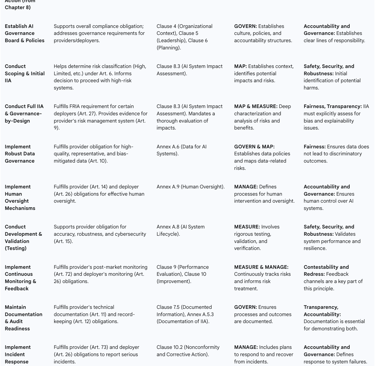

The ultimate test of a unified governance framework is its ability to satisfy the complex and varied demands of the global regulatory and standards landscape. The integrated lifecycle model proposed in Chapter 8, when powered by the logic of the Unified Control Framework from Chapter 9, is designed to do precisely this. This chapter explicitly demonstrates how the key actions within the unified process align with and fulfill the specific requirements of the EU AI Act, ISO/IEC 42001, the NIST AI RMF, and the UK's principles-based approach.

The following table serves as a compliance crosswalk, providing a structured, auditable mapping that shows how a single, cohesive process can efficiently meet multiple, often overlapping, international mandates. This mapping provides clear evidence that a unified approach is not only more efficient but also more comprehensive than a siloed, jurisdiction-by-jurisdiction compliance strategy.

As the table demonstrates, the actions within the proposed unified process are not designed in a vacuum. Each step is a direct response to the convergent demands of the world's leading governance frameworks. For instance, the action of conducting a "Full IIA" is not merely a best practice; it is a single, cohesive activity that simultaneously helps fulfill the mandatory FRIA requirement for certain deployers under the EU AI Act, the mandatory AIIA requirement of ISO/IEC 42001, and the core activities of the MAP and MEASURE functions of the NIST AI RMF. Similarly, establishing robust "Human Oversight Mechanisms" is a direct response to explicit articles in the EU AI Act, a specific control in the ISO 42001 Annex, a key part of the NIST MANAGE function, and a central tenet of the UK's accountability principle.

This mapping reveals the profound inefficiency of a fragmented approach. An organization attempting to comply with these frameworks separately would find itself creating four different processes for risk management, four different sets of documentation, and four different approaches to impact assessment. The unified model consolidates these overlapping requirements into a single, streamlined workflow. By adopting the integrated lifecycle process, an organization can be confident that it is building a governance system that is not only internally coherent and efficient but also externally defensible and compliant with the highest global standards.

Part IV: Practical Implementation and Strategic Considerations

Architecting a unified framework is a critical first step, but its value is only realized through successful implementation. This final part of the report grounds the proposed framework in reality. It explores practical examples through case studies, provides a frank assessment of the challenges and limitations that organizations will face, and uses a high-profile governance failure as a cautionary tale to underscore the stakes. The objective is to provide not just a blueprint, but a realistic guide for the journey from theory to practice.

Chapter 11: From Theory to Practice: Case Studies in AI Governance

Examining how different organizations are grappling with the challenges of AI governance provides invaluable practical insights. These case studies illustrate the principles of unified governance and impact assessment in action, showcasing a variety of approaches across the public and private sectors.

Municipal Governance: Pioneering Transparency and Oversight in Barcelona and Amsterdam Cities are on the front lines of AI deployment, using algorithms for everything from traffic management to social services. As a result, some have become pioneers in AI governance. The cities of Barcelona and Amsterdam provide leading models for public sector governance, emphasizing transparency, stakeholder engagement, and structured oversight.

Key Mechanisms: A hallmark of their approach is the creation of public algorithm registers. These are publicly accessible databases that list the AI systems in use by the municipality, explain their purpose, and classify them by risk level. This commitment to transparency is a foundational step in building public trust.

Integrated Approach: Both cities have developed comprehensive AI strategies that go beyond simple registers. Barcelona's strategy includes mandatory algorithmic impact assessments for high-risk systems, the creation of a multidisciplinary advisory council on AI ethics, and the inclusion of digital rights clauses in procurement contracts. Amsterdam has developed an "Algorithms Playbook" that provides a lifecycle approach to governance, including tools for bias analysis, human rights impact assessments, and standard contractual clauses for AI vendors. These initiatives demonstrate a mature, integrated approach where impact assessment, procurement, and oversight are woven together.

Higher Education: A Stakeholder-Centric Model at the University of Technology Sydney (UTS) The University of Technology Sydney (UTS) offers a compelling case study in how to build a governance framework from the ground up through deep stakeholder engagement. Recognizing that trust was paramount, UTS did not impose a top-down policy. Instead, it undertook detailed consultation processes with both students and staff to co-design the principles that would govern the use of AI and analytics at the university.

Key Insight: This collaborative process was not just about gathering feedback; it was about building shared ownership. By listening to and incorporating the concerns and aspirations of its community, UTS was able to create an AI Operations Policy and Procedure that had broad buy-in. This stakeholder-centric approach improved the quality of the policies and, crucially, increased the confidence of senior leadership in adopting and using AI systems responsibly.

Governance Structure: The process culminated in the creation of a formal AI Operations Board, an interdisciplinary body responsible for overseeing the use of AI at UTS and ensuring the implementation of the co-designed policies. This demonstrates the importance of coupling policy with a clear, empowered governance structure.

Enterprise Governance: Turning Compliance into a Competitive Advantage In the private sector, mature AI governance is evolving from a cost center focused on compliance to a strategic function that can deliver a competitive edge. Case studies from the e-commerce and banking sectors illustrate this shift.

E-commerce Data Lineage: A global e-commerce brand, facing the challenge of tracking customer data across a complex web of AI models for recommendations and payment processing, implemented an end-to-end data lineage solution. This gave them full visibility into how data was collected, used, and transformed by their AI systems. This was not just a compliance exercise to meet GDPR and CCPA requirements; it also built greater customer trust and improved internal efficiency by providing a clear map of their data ecosystem.

Banking and Bias Mitigation: A leading bank, wary of the reputational and legal risks of biased AI in credit decisions, deployed a proactive governance strategy. They integrated real-time AI monitoring tools to audit AI decisions for fairness and flag potential bias indicators during the model training phase, before the models went into production. By tracking data lineage and transformations, they could pinpoint how data influenced outcomes and fix problems early. This approach turned fairness and compliance from a reactive burden into a proactive, trust-building feature of their products.

Public Services: Diverse Applications Highlighting a Common Need for Governance The breadth of AI adoption in public services globally underscores the universal need for robust governance frameworks. Examples include:

Singapore's GovTech Chatbots: AI-powered virtual assistants deployed across more than 70 government websites to handle citizen inquiries, reducing call center workloads by 50% and providing 24/7 multilingual support.

Japan's Earthquake Prediction System: A deep learning system that analyzes seismic data in real time, increasing earthquake detection accuracy by 70% and enabling faster, more reliable early warnings.

EU's iBorderCtrl: A pilot AI-driven border security system using facial recognition and lie-detection tools to screen travelers, aiming to enhance security while reducing wait times.

While these applications deliver significant public benefits, they also touch on sensitive areas of privacy, fairness, and safety, highlighting that as AI deployment scales, so too must the sophistication of the governance frameworks that oversee them.

Chapter 12: Navigating the Pitfalls: Challenges and Limitations of Integrated Frameworks

While a unified governance framework is the ideal, its implementation is fraught with practical challenges and inherent limitations. Acknowledging these pitfalls is crucial for any organization embarking on this journey. The primary barriers are not a lack of principles or theoretical models, but rather organizational inertia, technical complexity, and the operational difficulty of overcoming fragmentation.

Organizational and Resource Challenges

The most significant hurdles to implementing unified governance are often internal and organizational in nature.

Fragmented Systems and Manual Processes: The top challenge, cited by 58% of organizations, is the struggle to unify disparate AI models, data sources, and governance tools that are scattered across different departments. Many governance functions, such as documentation and risk tracking, remain highly manual, making compliance and oversight inefficient, costly, and prone to error.

Resource Constraints and Expertise Gaps: Effective governance requires investment. Organizations often suffer from insufficient budgets and a lack of skilled personnel, particularly AI governance specialists who can bridge the gap between legal, ethical, and technical domains. This knowledge gap can exist at all levels, from IT and security teams who lack expertise in AI-specific risks to business units who underestimate the consequences of unchecked AI adoption.

Lack of Clear Ownership and Leadership Buy-in: Without a clear mandate from the top, AI governance initiatives often falter. Uncertainty about which department or individual "owns" AI governance leads to accountability gaps and inefficiencies. A lack of leadership buy-in, cited by 18% of organizations, can starve governance programs of the resources and authority they need to succeed.

Technical and Data-Related Challenges

Beyond organizational issues, the nature of AI technology itself presents formidable challenges.

Data Quality and Bias: The "garbage in, garbage out" principle is amplified in AI. The effectiveness, fairness, and reliability of any AI system are fundamentally limited by the quality of the data it is trained on. Biased, incomplete, or unrepresentative data will inevitably lead to biased and unreliable systems, a risk that is difficult and expensive to fully mitigate.

The "Black Box" Problem: Many advanced AI models, particularly deep learning systems, operate as "black boxes." Their internal decision-making logic is so complex that it can be impossible for humans to fully understand or explain how they arrive at a specific output. This opacity is a direct barrier to achieving transparency and accountability, hindering trust among users and regulators.

Unpredictability and Emergent Risks: AI systems can be non-deterministic, meaning they can produce unpredictable outputs and behave in ways that were not anticipated by their designers. This makes it impossible for any impact assessment, no matter how thorough, to foresee every possible outcome, misuse, or emergent risk, especially as systems learn and adapt in real-world settings.

Inherent Limitations of Frameworks

Finally, it is essential to recognize that no governance framework or software tool is a panacea. They have inherent limitations that require humility and continuous vigilance.

Cannot Replace Human Judgment: Governance tools can provide data, automate checks, and offer recommendations, but they cannot replace human judgment, especially in ethically ambiguous or socially complex situations. The ultimate responsibility for making difficult trade-off decisions remains with humans.

Cannot Guarantee Complete Accuracy or Security: While frameworks can significantly mitigate risks, they cannot eliminate them entirely. No tool can guarantee 100% accuracy or provide complete protection against all novel cyber threats or data breaches.

Risk of "Ethics Washing": If not implemented with genuine commitment, governance frameworks can become a superficial, check-the-box exercise. Vague policies or unenforced controls can create a false sense of security or be used for "ethics washing"—projecting an image of responsibility without making meaningful changes to underlying practices.

The critical takeaway from these challenges is that the success of a unified governance program is as much about change management, technology investment, and cultural development as it is about writing policies. The implementation gap, not a knowledge gap, is the true challenge. Therefore, any project to establish a unified framework must be treated as a major digital transformation initiative, requiring strong executive sponsorship, cross-functional collaboration, and dedicated resources to overcome the deep-seated challenge of organizational fragmentation.

Chapter 13: A Critical Perspective: Lessons from the Dutch Childcare Benefits Scandal

To fully grasp the severe, real-world consequences of failed AI governance, one need look no further than the Dutch childcare benefits scandal (toeslagenaffaire). This high-profile disaster serves as a powerful and tragic cautionary tale, illustrating how the challenges discussed in the previous chapter can converge to create a catastrophic outcome. It is the ultimate argument for why a truly integrated and human-centric governance framework is not a "nice-to-have" but a fundamental necessity for any organization, public or private, deploying high-stakes AI.

A Cascade of Governance Failures

The core of the scandal involved the Dutch tax authority using a self-learning, automated risk-profiling system to detect potential fraud in childcare benefit applications. The algorithm falsely flagged tens of thousands of families, a disproportionate number of whom had minority or immigrant backgrounds, as high-risk. This led to devastating consequences, with families being forced into crippling debt to pay back benefits, losing their homes, and in over a thousand cases, having their children taken into state care. The political fallout ultimately led to the resignation of the entire Dutch government in 2021.

This was not a simple technical glitch; it was a profound, multi-faceted governance breakdown at every level :

Lack of Transparency (The Black Box): The algorithm used was a "black box," making it nearly impossible for officials, let alone the affected citizens, to understand or challenge its reasoning. This opacity prevented any meaningful scrutiny of its flawed logic.

Failure of Human Oversight (Automation Bias): Officials exhibited a severe case of "automation bias," blindly trusting the system's outputs without applying critical judgment or meaningful review. The system's "risk" classification was treated as fact, allowing the automated process to proceed without effective human intervention.

Biased Data and Discriminatory Proxies: The model was trained on historical data and used discriminatory criteria, such as holding dual nationality, as a proxy for risk. This embedded systemic bias directly into the decision-making process, violating fundamental principles of non-discrimination.

Perverse Incentives: The tax authority was operating under an efficiency mandate that created a "perverse incentive" to maximize the amount of funds seized from alleged fraudsters to demonstrate a return on investment. This focus on financial recovery overrode any considerations of fairness or accuracy.

Critical Knowledge Gaps: The decision-makers and officials overseeing the system lacked the necessary AI literacy to understand its limitations, question its validity, or appreciate the potential for catastrophic harm.

The Inadequacy of Partial Solutions

The Dutch scandal also serves as a stark warning against placing faith in partial or superficial governance mechanisms. In the wake of the scandal, there has been a push for tools like public algorithm registers. However, critics argue that such registers, while a step toward transparency, are often "unbearably light". They are voluntary and frequently do not include the most sensitive, high-risk, and potentially harmful systems used in areas like welfare and law enforcement, which are often shielded from public view. The core issue is often a pre-existing political or organizational will to deploy AI for purposes of efficiency or control, with governance treated as a secondary concern rather than a prerequisite for asking the most fundamental question:

should this system be deployed at all?.

The Dutch childcare benefits scandal is the definitive argument for an integrated impact assessment and governance framework. It vividly demonstrates that a failure in any single area—data governance, transparency, human oversight, ethical principles, or impact assessment—can cause the entire system to collapse with devastating human consequences. The risks are not siloed; they are interconnected and they cascade. A biased dataset leads to a discriminatory model; a lack of transparency prevents its detection; a failure of human oversight allows it to cause harm; and an inadequate impact assessment fails to anticipate the potential for disaster in the first place.