GDPR compliance in Emerging Technologies (VR & AR)

Explore how virtual reality, augmented reality, and other emerging technologies must adapt to GDPR requirements, the unique privacy challenges they present, and practical strategies for maintaining compliance while innovating.

Virtual and Augmented Reality (VR/AR) technologies are rapidly transforming various sectors, from entertainment to healthcare, by offering profoundly immersive experiences. This innovation, however, introduces unprecedented data privacy challenges under the General Data Protection Regulation (GDPR). Unlike traditional digital platforms, VR/AR systems engage in continuous, real-time collection of highly sensitive biometric and behavioral data, leading to the creation of comprehensive user profiles that can inadvertently reveal real-world identities and intimate personal details. This report details the specific data types collected, analyzes the implications for each core GDPR principle, and highlights key compliance hurdles such as complex consent management, the difficulty of true data anonymization, ambiguous controller-processor relationships in multi-party ecosystems, and the complexities of cross-border data transfers. It also addresses the amplified risks posed by AI integration and the operational challenges in fulfilling data subject rights. The analysis underscores a persistent gap between technological advancement and regulatory frameworks. To navigate this intricate landscape, a proactive, privacy-by-design approach is not merely a best practice but a critical imperative. Recommendations include implementing robust technical safeguards, fostering user-centric privacy controls, and advocating for harmonized international regulatory frameworks to ensure compliance, mitigate risks, and build enduring user trust in the evolving immersive digital future.

2. Introduction to GDPR in Emerging Technologies

Overview of VR and AR Technologies and their Unique Data Collection Capabilities

Virtual Reality (VR) and Augmented Reality (AR) represent a significant technological leap, reshaping industries from gaming and entertainment to healthcare and education through their capacity to create deeply immersive digital experiences. These technologies differ fundamentally from conventional digital ecosystems, such as websites or mobile applications, primarily in their reliance on continuous, real-time data collection. Rather than merely capturing discrete user interactions like clicks or typed inputs, VR/AR systems meticulously track granular user behaviors, including gaze direction, physical movement within virtual spaces, and even nuanced emotional responses. This pervasive tracking capability has led to the characterization of VR environments as a "digital panopticon," where every gesture, movement, and gaze can be recorded, analyzed, and potentially monetized. The scope of these immersive technologies also extends to Mixed Reality (MR) devices, exemplified by Microsoft HoloLens and HTC Vive Pro, which seamlessly integrate real-world imagery with digital data. These devices hold particular promise in specialized fields such as medical applications, where precise data overlay and interaction are critical for diagnosis, therapy, and rehabilitation.

The Foundational Role of GDPR in Regulating Personal Data

The General Data Protection Regulation (GDPR) stands as the cornerstone of the European Union's data protection legislation, establishing a comprehensive legal framework for safeguarding personal data and privacy. Its provisions directly apply to the processing of personal data by virtual world providers, encompassing not only the operations of the platform itself but also data collected via connecting devices, as well as any other entities that utilize personal data within these immersive environments. The GDPR's overarching objective is to empower individuals by granting them greater control over their personal data sharing practices.

The regulation's core principles serve as foundational rules for responsible data handling, aiming to protect customer data and mitigate legal risks for businesses. Non-compliance with these principles can result in severe penalties, including substantial fines amounting to 4% of total global annual turnover or up to €20 million, whichever sum is higher. A critical aspect of GDPR is its extraterritorial application, which means its mandates govern the processing of personal data belonging to EU residents, regardless of the geographical location of the data controller or processor. This broad reach ensures that any entity interacting with EU citizens' data, even within a virtual world, falls under the purview of this robust regulatory framework.

The Evolving Data Landscape and Regulatory Challenges

The emergence of VR/AR technologies has fundamentally altered the paradigm of data collection, creating a complex interplay between technological capability and regulatory oversight. This shift is characterized by two significant phenomena:

The "Data Volume and Granularity Escalation" Effect: VR/AR technologies do not merely increase the quantity of data collected; they profoundly escalate its granularity and sensitivity. These systems continuously capture highly intimate physiological and behavioral data, such as eye movements, facial expressions, heart rate, gait, and even inferred emotions. This goes far beyond the discrete interactions typical of traditional digital platforms, moving into a realm where continuous, deep-seated data flows are the norm. The pervasive nature of this data, often likened to a "digital panopticon" , inherently amplifies the potential for privacy invasion, misuse, and unauthorized profiling to an extent previously unimaginable in conventional digital environments. This escalation necessitates a fundamental re-evaluation of how existing GDPR principles, largely conceived before the widespread adoption of such immersive technologies, can be effectively applied. A superficial, "check-the-box" compliance approach is insufficient; instead, a deeper, more nuanced understanding of these unique data flows, their potential inferences, and their cumulative impact on individual rights is required. The sheer volume and continuous nature of VR/AR data make the practical operationalization of traditional consent mechanisms and data subject rights significantly more challenging.

The "Regulatory Lag" Phenomenon: A persistent delay exists between the rapid pace of technological innovation in VR/AR and the legislative and interpretive efforts to regulate it. Multiple sources indicate that regulators are actively "catching up" to these advancements , acknowledging that existing legal systems were primarily "built for the physical world". This inherent temporal gap means that while current legal frameworks are broadly applicable, they may not yet fully address the unique nuances, unforeseen challenges, and complex data processing scenarios inherent in dynamic, immersive environments. This regulatory lag creates significant legal uncertainty for businesses developing and operating within the VR/AR space. Consequently, adopting a proactive "privacy-by-design" approach becomes not just a recommended practice but a critical necessity to anticipate future regulatory clarity and mitigate risks. This situation also suggests a heightened risk of regulatory scrutiny and potential enforcement actions as authorities continue to develop a more profound understanding of the technology's implications and seek to apply existing laws to novel, previously unaddressed scenarios.

3. The Data Landscape of VR and AR

Types of Personal Data Collected

Virtual and augmented reality technologies are characterized by "expansive data collection," gathering "unprecedented amounts of user data". The breadth and depth of this data far exceed those of traditional digital platforms, presenting unique privacy considerations.

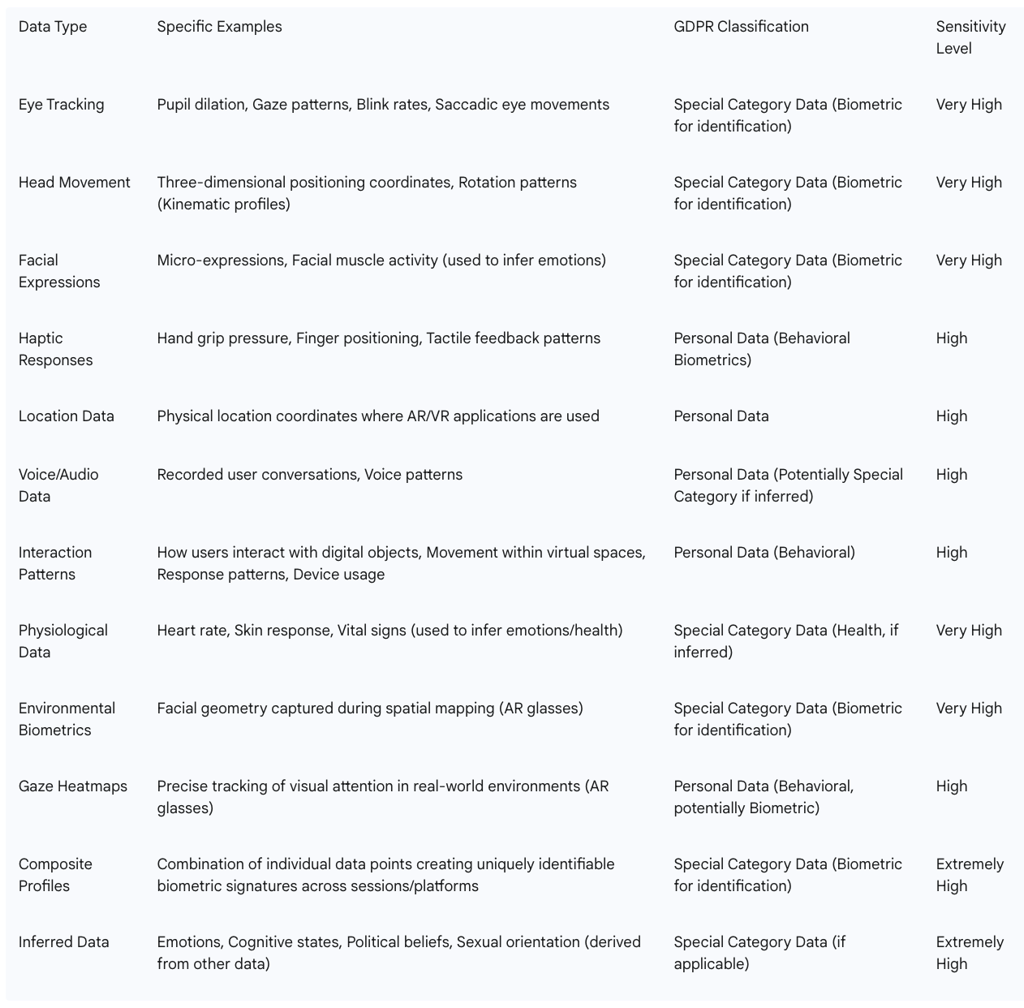

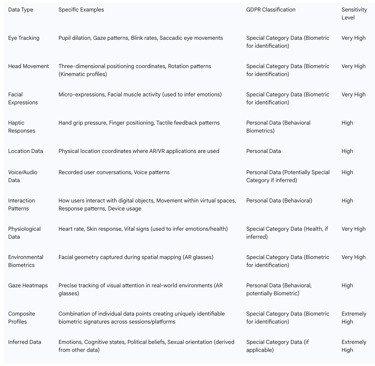

Key categories of personal data collected include:

Biometric Data: This is a particularly sensitive category and forms a core part of VR/AR data collection. It encompasses:

Eye Tracking Data: Continuous capture of pupil dilation, gaze patterns, blink rates, and saccadic eye movements, which can form unique identification patterns.

Head Movement Signatures: Three-dimensional positioning coordinates and rotation patterns that reveal distinctive kinematic profiles.

Facial Expressions/Muscle Activity: Micro-expressions captured through integrated cameras or inferred from head movement patterns, used to infer emotions and reactions.

Haptic Responses: Data derived from hand grip pressure, finger positioning, and tactile feedback patterns, which contribute to behavioral biometrics.

Physiological Data: This includes heart rate , skin response, and vital signs , often collected implicitly.

For AR glasses, additional layers involve Environmental Biometrics (e.g., facial geometry captured during spatial mapping) and Gaze Heatmaps (precise tracking of visual attention in real-world environments).

Behavioral Data: This broad category covers how users interact with digital objects, their movement within virtual spaces, and their response patterns. It also includes voice patterns and general movement tracking.

Location Data: Records the physical location where users interact with AR/VR applications.

Voice and Audio Data: Many VR platforms record user conversations, which carries significant risks of inadvertent data collection beyond the intended purpose.

Composite Profiles: A critical concern arises from the aggregation of these individual data points. While each data point might seem innocuous in isolation, their combination leads to the creation of comprehensive, uniquely identifiable "biometric profiles" or "biometric signatures". GDPR acknowledges that datasets become biometric if they result from "specific technical processing" that enables unique identification, even if individual components appear anonymous. This composite approach facilitates re-identification across various sessions, platforms, and even physical locations.

Sensitivity and Scale of Data Collection

The continuous capture of multiple biometric signals and the real-time nature of data collection are fundamental operational differences that set VR/AR apart from traditional digital platforms. This extensive volume and variety of data transform AR/VR environments into a "goldmine" for various actors, including advertisers, cybercriminals, and even authoritarian governments.

The data collected can be used to infer emotions and reactions , create unique biometric signatures , and even reveal real-world identities from ostensibly anonymous avatars through the analysis of unique walking patterns, gaze direction, and hand movements. Significant concerns also arise regarding profiling and discrimination, such as the potential use of VR behavioral data by employers to screen job applicants based on cognitive response tests.

The metaverse, as an advanced iteration of VR/AR, involves "vast streams of personal information," where every movement, gesture, and interaction serves as a data source. This leads to "deeper profiling" and "constant monitoring," facilitated by an expanded array of connected devices like wearables, motion sensors, microphones, and heart/respiratory monitors. Such pervasive monitoring also enables the inference of "special categories of personal data," including physiological responses, emotions, vital signs, and potentially even political beliefs or sexual orientation. The inherent sensitivity of this data necessitates stringent protective measures.

Implications of Data Sensitivity and Persistence

The unique data collection capabilities of VR/AR technologies introduce profound implications for data privacy and security, extending beyond the mere volume of information.

The "Inferred Sensitivity" Challenge: VR/AR systems collect raw biometric and behavioral data, such as eye movements, gait, and heart rate. When this raw data undergoes specific technical processing, it can be used to derive highly sensitive information about an individual, including their emotions, cognitive states, reactions, and potentially even their political beliefs or sexual orientation. Under GDPR, biometric data processed for unique identification is a special category requiring explicit consent. A critical point is that the derivation of other special categories of data from seemingly innocuous raw data points (e.g., physiological responses) creates a new, often hidden layer of sensitivity that may not be immediately obvious to users or even developers. This means that data not initially collected as special category data can become so through subsequent processing. This necessitates that organizations conduct Data Protection Impact Assessments (DPIAs) that extend beyond merely assessing the data

collected to rigorously evaluating the potential inferences that can be drawn from that data and their profound implications for data subjects' fundamental rights and freedoms. It also complicates the "purpose limitation" principle, as inferred data might be used for purposes beyond the user's initial understanding or explicit consent, potentially leading to unforeseen ethical and legal liabilities.

The "Persistent Digital Twin" Risk: VR/AR technologies are capable of generating highly unique "biometric signatures" and "kinematic profiles" from user interactions, described as being "as distinctive as a fingerprint". Even when users adopt "anonymous avatars," their real-world identity can be revealed through the analysis of their unique walking patterns, gaze direction, and hand movements. This capability suggests the creation of a persistent, uniquely identifiable digital representation or "digital twin" of the user, based on their intrinsic physical and behavioral characteristics within virtual environments. Crucially, this digital twin can be re-identified and linked back to the individual across multiple sessions, different platforms, and even physical locations. The emergence of such persistent digital twins fundamentally challenges the efficacy of traditional anonymization and pseudonymization techniques in VR/AR. Simply removing direct identifiers may be insufficient to truly de-identify users, significantly increasing re-identification risks. This persistent digital identity also dramatically amplifies risks related to long-term profiling, algorithmic discrimination, and pervasive surveillance. Consequently, fundamental GDPR rights, such as the "right to be forgotten" and the principle of data minimization, become exceptionally difficult to implement and enforce effectively in these immersive and interconnected environments.

Key Data Types Collected by VR/AR and their Sensitivity under GDPR

4. GDPR Core Principles: Implications for VR and AR

The General Data Protection Regulation (GDPR) is built upon seven core principles that guide the lawful and ethical processing of personal data. The unique characteristics of VR and AR technologies introduce specific challenges and implications for each of these foundational principles.

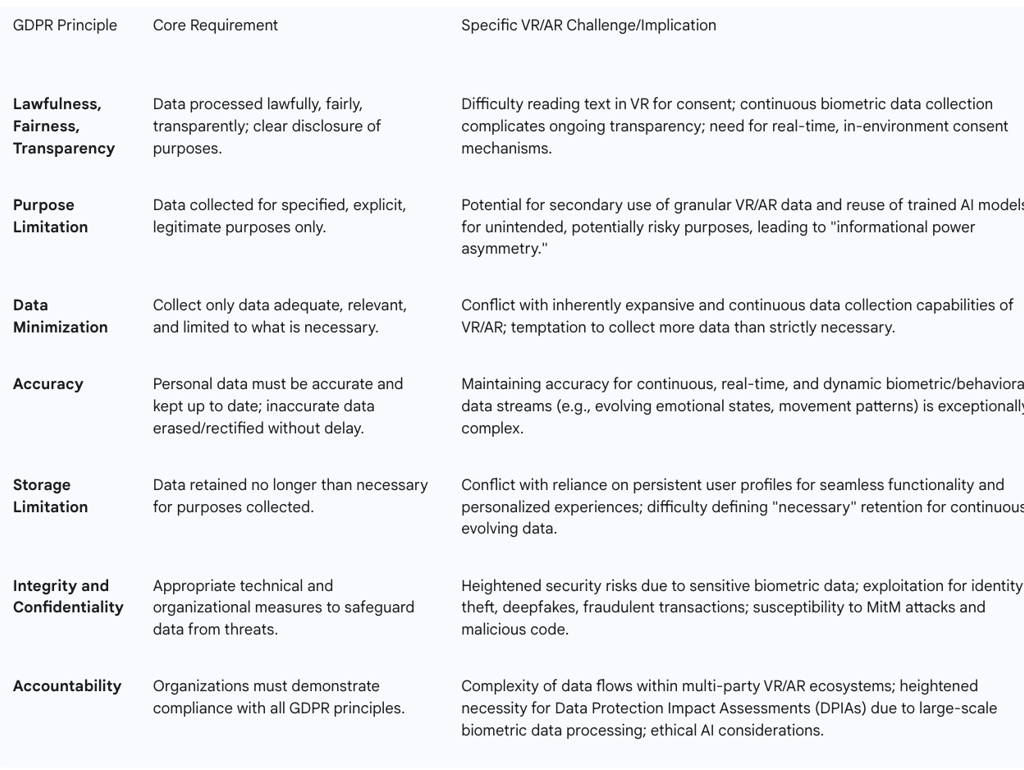

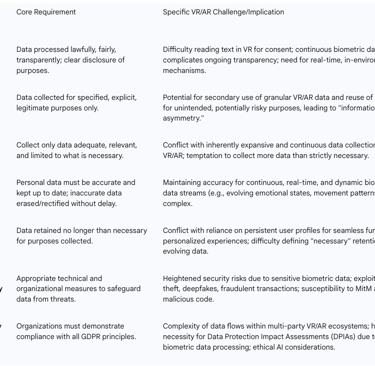

Lawfulness, Fairness, and Transparency

The principle of lawfulness, fairness, and transparency requires that personal data processing respects the data subjects' rights and freedoms, ensuring data is handled reasonably and with their well-being in mind. Processing must be based on a valid legal basis, which can include explicit consent, contractual necessity, legal obligation, protection of vital interests, tasks carried out in the public interest, or legitimate interests. Notably, explicit consent is a mandatory requirement for the processing of biometric data.

Transparency mandates that organizations provide full and honest disclosure of all intentions and purposes for data collection and processing. This requires that shared data be intelligible, easily accessible, contain all necessary and relevant information, and be provided in a timely manner. Any subsequent changes to processing activities or additional purposes must also be clearly disclosed.

Challenges in VR/AR: Traditional consent mechanisms, such as static, text-heavy forms, are largely ineffective and "break down" in immersive VR environments where reading text can be difficult and disruptive. The continuous nature of biometric data collection in VR systems throughout entire sessions complicates the provision of ongoing, real-time transparency and consent. Users must possess a clear and precise understanding of exactly what biometric data is being collected and how it will be used. The inherent lack of transparent control mechanisms for users regarding data collection and utilization in VR/AR presents a significant privacy risk.

Purpose Limitation

Organizations are permitted to collect personal data only for specified, explicit, and legitimate purposes, and must not process it in a manner incompatible with those purposes. They must clearly outline the end goal and retain data only for the duration necessary to achieve that goal. Limited flexibility is allowed for processing carried out for archiving in the public interest, scientific or historical research, or statistical purposes.

Challenges in VR/AR: A significant challenge arises from the potential for secondary use of highly granular VR/AR data and the reuse of trained Artificial Intelligence (AI) models for numerous purposes beyond their original intent. This can include potentially risky or malicious applications such as differential pricing or generating fake news. This practice contributes to an "asymmetry of informational power" between data companies and society. Concerns specifically include the reuse of insights about psychological dispositions (e.g., depression) in AI-assisted hiring procedures. The "unaccounted multiplication of informational power asymmetry" is directly linked to the legal ability to reuse trained AI models for secondary purposes that may not be publicly visible. To address this, the concept of "purpose limitation for models" is emerging, advocating that the production and use of AI models must be limited to specific, explicitly stated purposes determined

ex ante and rigorously enforced throughout the AI model's lifecycle.

Data Minimization

Personal data must be "adequate, relevant, and limited to what is necessary in relation to the purposes for which they are processed". Organizations should retain only the absolute minimum amount of data required for their stated purposes and are explicitly prohibited from collecting personal data merely on the possibility that it

could be useful later.

Challenges in VR/AR: The inherently expansive and continuous data collection capabilities of VR/AR technologies present a direct conflict with the principle of data minimization. The immersive nature and richness of data in these environments create a strong temptation for developers and operators to collect more data than is strictly necessary.

Mitigation Strategies: Practical steps to adhere to data minimization include reviewing existing data collection processes, regularly auditing data storage systems to identify and delete redundant or unnecessary data, and establishing clear data retention policies. Anonymization and pseudonymization are crucial technical and organizational measures to implement this principle effectively. Adhering to data minimization reduces the risk of data breaches and abuse, enhances transparency, builds user trust, and promotes operational efficiency by reducing data storage and security costs.

Accuracy

Personal data must be accurate and, where necessary, kept up to date; every reasonable step must be taken to ensure that inaccurate personal data is erased or rectified without delay. Organizations are required to regularly review information held about individuals and promptly amend or delete any inaccurate data.

Challenges in VR/AR: The continuous, real-time, and dynamic nature of VR/AR data, particularly biometric and behavioral data (e.g., evolving emotional states, changing movement patterns), makes the ongoing maintenance of accuracy and ensuring timely rectification exceptionally complex. This necessitates robust mechanisms for continuous validation and updating of user profiles to ensure data integrity over time.

Storage Limitation

Personal data should not be retained for longer than is necessary for the specific purposes for which it was collected or processed.

Challenges in VR/AR: VR/AR platforms often rely on persistent user profiles to deliver seamless functionality and personalized experiences, which can directly conflict with the GDPR mandate for automatic deletion of biometric data once its original collection purposes expire. Defining what constitutes a "necessary" retention period for continuous, evolving, and highly granular biometric and behavioral data streams is a complex legal and technical challenge that requires careful consideration of both utility and privacy.

Integrity and Confidentiality (Security)

Organizations must implement appropriate technical and organizational measures to safeguard personal information from a range of threats, including internal risks (accidental damage or loss, unauthorized use) and external threats (cyberattacks). Access to information must be strictly limited to authorized individuals.

Challenges in VR/AR: The collection of highly sensitive biometric data by VR/AR platforms creates heightened security risks. If compromised, this data can be exploited for identity theft, the creation of deepfakes, fraudulent transactions, or unauthorized access to virtual spaces. Real-time environmental data and device usage patterns are vulnerable to exploitation for targeted attacks, intrusive advertising, or surveillance by malicious actors. The heavy reliance on real-time data transmission makes VR/AR experiences susceptible to Man-in-the-Middle (MitM) attacks, where communications can be intercepted and manipulated. The installation of software, plugins, or third-party content also creates vectors for malicious code, leading to ransomware, data corruption, or unauthorized device control. Phishing and social engineering attacks are also intensified by the immersive nature, where users can be tricked by fake avatars or experiences that appear authentic.

Mitigation Strategies: Essential measures include implementing multi-factor authentication (MFA) for accounts, employing end-to-end encryption (E2EE) for securing communications and data sharing , and ensuring regular software updates and security patches. Secure storage and transmission of data must be ensured through robust encryption protocols.

Accountability

The accountability principle requires organizations to demonstrate compliance with all GDPR principles. This involves studying current data processing practices, potentially appointing a Data Protection Officer (DPO), establishing a comprehensive personal data inventory, ensuring proper consent mechanisms are in place, and conducting Data Protection Impact Assessments (DPIAs). Data controllers bear primary legal accountability for GDPR adherence.

Challenges in VR/AR: The inherent complexity of data flows within VR/AR and the multi-party ecosystem (hardware manufacturers, platform operators, application developers) make demonstrating comprehensive accountability particularly challenging. The necessity for DPIAs is significantly heightened due to the "risks of varying likelihood and severity" posed by the large-scale processing of biometric data. Furthermore, the integration of AI components necessitates careful consideration of ethical AI development, including bias prevention and transparency in algorithmic decision-making.

Navigating Principle Application in Immersive Environments

The application of GDPR principles in VR/AR contexts reveals fundamental tensions that require innovative solutions and a forward-looking regulatory approach.

The "Usability vs. Compliance" Paradox in Consent: GDPR strictly mandates explicit, informed, and unambiguous consent, especially for the processing of sensitive biometric data. However, the immersive and dynamic nature of VR/AR environments creates a fundamental conflict with traditional consent mechanisms. Static, text-heavy consent forms are "difficult to read" and "don't work well" within VR , disrupting the user experience. Moreover, VR/AR systems involve continuous data collection throughout entire sessions, meaning consent is not a one-time event but an ongoing process. This creates a paradox: how can developers provide truly informed, granular, and easily withdrawable consent mechanisms without either breaking the immersion or overwhelming the user with constant prompts? This tension highlights a critical design challenge that goes beyond mere legal interpretation. This paradox necessitates significant innovation in the design of consent interfaces within immersive environments. Solutions like spatial dialogs, gaze-activated controls, voice commands for withdrawal, and haptic feedback notifications are not just technical enhancements but crucial enablers of GDPR compliance in VR/AR. Failure to develop such intuitive and continuous consent flows could lead to widespread non-compliance, as user consent might be deemed invalid (e.g., not "freely given" if no viable alternative exists) , or users may simply bypass complex consent processes, undermining the spirit of transparency and control.

The "AI Amplification of GDPR Risks": VR/AR data, particularly the rich biometric and behavioral information, is explicitly identified as a "goldmine" for advertisers and for creating detailed user profiles. This profiling is often powered by Artificial Intelligence (AI) to enable hyper-personalization. This AI-driven personalization, while enhancing user experiences, introduces significant risks: it can lead to excessive profiling, subtle "AI-driven nudging" that influences user decisions without their full awareness, and the reinforcement of societal biases through discriminatory algorithms. The EU AI Act's classification of many VR emotion recognition and biometric categorization systems as "high-risk AI" explicitly acknowledges that AI, when integrated into immersive technologies, significantly amplifies existing GDPR risks, particularly concerning fairness, transparency, and the right not to be subject to automated decision-making. This amplification means that GDPR compliance in VR/AR cannot be addressed in isolation; it must be considered holistically in conjunction with emerging AI regulations. The use of AI shifts the compliance burden beyond just initial data collection to the entire AI model lifecycle—including the training data, model development, and deployment phases. This necessitates the implementation of "purpose limitation for models" to prevent unregulated secondary uses. Organizations must proactively integrate ethical considerations into their AI development processes, focusing on bias prevention, algorithmic transparency, and ensuring human oversight to mitigate the heightened risks of discrimination and manipulation.

GDPR Principles and their Specific Challenges/Implications in VR/AR

5. Key Compliance Challenges in VR/AR Environments

The unique characteristics of Virtual and Augmented Reality environments introduce a series of complex compliance challenges that necessitate tailored approaches beyond conventional GDPR interpretations.

Consent Management

The GDPR mandates explicit consent for processing biometric data, a critical requirement given the extensive biometric collection inherent in VR/AR systems. However, traditional consent mechanisms, such as static, text-heavy forms, are largely ineffective and "break down" in immersive environments, where reading text is difficult and disruptive to the user experience. Key challenges include interface limitations that hinder text readability, the continuous nature of biometric data collection throughout entire VR sessions, the need for precise purpose specification so users clearly understand how their biometric data is used, and the necessity for easily accessible withdrawal mechanisms. The EU AI Act further complicates this landscape by classifying many VR emotion recognition and biometric categorization systems as "high-risk AI," necessitating additional safeguards and transparency measures beyond standard consent. Recent European Data Protection Board (EDPB) guidance emphasizes that VR consent interfaces must separate consent collection from general terms of service, provide real-time processing indicators within the environment, and enable withdrawal through simple voice commands. The 2024 Charlotte Tilbury settlement (under BIPA, a US law) serves as a significant precedent, establishing that virtual try-on features constitute biometric data collection requiring separate written notice, consent, and annual reaffirmation. This case underscores a broader global trend towards strict biometric consent requirements, highlighting the need for innovative, immersive-friendly consent solutions.

The "Composite Profile Problem" and Anonymization

Individual data points collected by VR/AR systems, such as eye tracking, head movements, and haptic responses, are not innocuous in isolation; their combination creates comprehensive, uniquely identifiable "biometric profiles". GDPR explicitly recognizes datasets as biometric when they result from "specific technical processing" that enables unique identification, even if the individual components might appear anonymous on their own. This composite approach allows for the re-identification of users across different sessions, platforms, and even physical locations. Even when users adopt "anonymous avatars," their real-world identity can be inadvertently revealed through unique walking patterns, gaze direction, and hand movements.

Challenges: Achieving "true anonymization" in this context is exceptionally difficult. While anonymization involves removing personally identifiable information and pseudonymization is a related technique , the inherent richness and interconnectedness of VR/AR data streams make robust de-identification challenging. Furthermore, the more data an organization holds, the more attractive a target it becomes for cybercriminals, increasing breach risks. The "composite profile problem" significantly elevates re-identification risks, suggesting that traditional anonymization techniques may be insufficient for achieving GDPR compliance in VR/AR. Organizations must proactively and continuously maintain the integrity of any purportedly anonymized data.

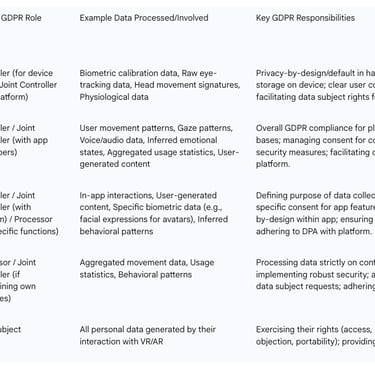

Defining Controller and Processor Roles

Under GDPR, a data controller is the natural or legal person who determines both the purposes and the means of processing personal data – essentially, the "why" and "how" of data use. Controllers bear primary legal accountability for GDPR adherence. A

data processor is a person or body that processes personal data strictly on behalf of and according to the instructions of the controller. Processors are responsible for implementing security measures and promptly notifying controllers of any data breaches.

Complexities in VR/AR: The VR/AR ecosystem is inherently multi-layered, involving numerous actors such as headset manufacturers, platform operators, application developers, and third-party analytics providers. Each of these entities may collect and process different types of data for various, often interdependent, purposes.

Joint controllership scenarios occur when two or more data controllers jointly determine the purposes and means of processing personal data. Joint controllership can manifest in various forms, with the participation and influence of different controllers potentially being unequal. For instance, a VR platform operator and an application developer might jointly determine how user behavioral data is collected and utilized for a shared objective, such as optimizing a virtual experience or enabling cross-platform functionality.

Challenges: Accurately determining who decides "what" and "how" data is processed is crucial, as the legal qualification depends on the factual circumstances rather than merely contractual designations. If a processor independently decides on the objectives and means of processing, they risk being reclassified as a controller, incurring full controller liabilities. The dynamic nature of VR/AR data flows means the same stakeholder may assume multiple data-related roles, necessitating a meticulous case-by-case analysis and clear mapping of data flows to assign responsibilities accurately.

Responsibilities: Controllers are broadly responsible for complying with GDPR principles, establishing legal bases for processing, providing clear privacy notices, conducting DPIAs, implementing robust security measures, managing data subject rights requests, engaging compliant data processors, and handling cross-border data transfers. Processors, in turn, must strictly follow controller instructions, ensure data security, and assist controllers in fulfilling their GDPR obligations.

Cross-Border Data Transfers

VR/AR applications inherently operate across national borders, creating significant complexities in determining which country's laws apply in specific data processing scenarios. The decentralized and distributed nature of the metaverse often involves data being stored and processed across multiple international jurisdictions. GDPR imposes stringent requirements for international data transfers, stipulating that personal data can only be transferred to countries that ensure an adequate level of privacy protection.

Challenges: Traditional legal systems and jurisdictional tests (e.g., "minimum contacts" in the US, or "where the harmful action happened" in Europe) become "meaningless" in virtual worlds where users can exist in multiple virtual spaces simultaneously and actions occur in non-physical locations. This ambiguity creates substantial legal uncertainty for companies, which could face lawsuits in numerous countries simply because their platform is globally accessible. There is a fundamental disagreement on whether virtual worlds should be governed by their internal rules (software code, game rules) or by the laws of the users' physical locations, potentially forcing a single virtual world to comply with hundreds of different national laws concurrently. Real court cases, such as a Pennsylvania court asserting jurisdiction over a California VR company due to local advertising and payments, underscore this confusion.

Mechanisms: GDPR provides specific legal mechanisms to facilitate cross-border data transfers in the absence of an Adequacy Decision, including Binding Corporate Rules (BCRs) and Standard Contractual Clauses (SCCs). Adequacy Decisions, issued by the European Commission, allow data transfers to specific third countries without requiring additional safeguards.

Practical Implications: These complexities necessitate meticulous due diligence on data recipients, the establishment of comprehensive contractual agreements (Data Processing Agreements), and potentially the consideration of data localization strategies or the implementation of strong encryption.

AI Integration and Profiling

AI-driven personalization within VR/AR environments can lead to "excessive profiling," "AI-driven nudging" (influencing user decisions without their awareness), and the reinforcement of discrimination through biased AI algorithms. VR/AR platforms are a "goldmine" for advertisers, actively utilizing collected personal data for highly targeted advertising campaigns. GDPR grants individuals the fundamental right to object to decisions based solely on automated processing, particularly when such decisions significantly affect them. In metaverse applications powered by AI, automated profiling could influence critical aspects such as virtual job applications, access permissions to virtual spaces, or even personalized pricing for digital assets. The EU AI Act introduces another layer of regulation by classifying many VR emotion recognition and biometric categorization systems as "high-risk AI," thereby mandating additional safeguards and transparency measures.

Challenges: Ensuring full transparency in the operation of AI algorithms, actively preventing algorithmic bias, and upholding human autonomy in AI-influenced decisions are paramount challenges.

Operationalizing Data Subject Rights

GDPR enshrines several important rights for individuals regarding their personal data: the right to be informed, the right of access, the right to rectification, the right to erasure (also known as the "right to be forgotten"), the right to restrict processing, the right to data portability, the right to object to processing, and rights related to automated decision-making and profiling.

Challenges in VR/AR:

Right to Access and Data Portability: VR/AR systems generate extensive and complex datasets, including interaction logs, avatar configurations, and highly granular biometric identifiers. Ensuring that users can easily download and transfer this data in a structured, commonly used, and machine-readable format without undue barriers is a significant operational challenge.

Right to Erasure/Be Forgotten: This right becomes particularly problematic in a metaverse economy that relies on persistent digital identities and often incorporates decentralized systems. If avatars, transaction histories, and social interactions are stored across multiple servers or on blockchain-based ledgers, achieving permanent and verifiable data deletion may not always be technically feasible. This necessitates exploring innovative technical solutions, such as advanced anonymization and pseudonymization techniques, to meet regulatory obligations. The California Privacy Rights Act (CPRA) also mandates automatic deletion of biometric data once its original collection purposes expire, highlighting a broader regulatory trend.

Right to Object and Restrict Processing: Implementing mechanisms for users to limit data use or object to specific processing purposes for continuous, real-time data streams in immersive environments is technically and operationally complex.

Mitigation Strategies: Organizations must provide clear means for users to correct or update their information and ensure data subjects can request to see their data, ask for corrections, or request deletion. Developing effective deletion workflows, potentially leveraging blockchain-based deletion ledgers for immutable compliance documentation, SDK-level data lifecycle management with automatic cleanup, and embedding machine-readable retention policies in biometric metadata for automated compliance, are crucial.

Children's Data Protection

VR technologies are frequently targeted towards and used by young gamers, making the protection of children's data a critical concern.

Challenges: A significant practical difficulty lies in accurately ascertaining whether a VR user is a child and, consequently, obtaining valid parental consent for data processing. Minors represent a substantial and increasingly tech-savvy portion of the gaming audience, often navigating these environments with advanced digital literacy.

Mitigation Strategies: VR providers must implement adequate procedures and robust security measures specifically designed to protect children's personal data. This includes designing and implementing VR technologies in strict compliance with privacy-by-design and privacy-by-default principles from the very outset. Organizations should regularly review their practices for protecting children's personal data and actively explore and implement more effective age verification and parental consent mechanisms beyond simple reliance on user declarations.

Interdependencies and Regulatory Gaps

The inherent complexity of VR/AR environments creates unique challenges that highlight the need for a nuanced understanding of regulatory application.

The "Distributed Accountability" Dilemma: The VR/AR ecosystem is inherently complex, involving a multitude of actors: headset manufacturers, platform operators, application developers, and potentially various third-party analytics or service providers. Each of these entities may collect, process, and share data, leading to intricate and often ambiguous controller-processor or joint-controller relationships. The GDPR's definition of a controller, based on "who decides what and how" , becomes exceptionally nuanced when data flows are real-time, continuous, and interdependent across distinct hardware and software layers. This distributed nature of data processing makes it incredibly challenging to pinpoint ultimate accountability for GDPR compliance, particularly in the event of a data breach or when data subject rights are invoked across multiple entities. This dilemma necessitates the establishment of exceptionally robust and meticulously drafted contractual agreements, specifically Data Processing Agreements (DPAs), between

all parties involved in the VR/AR data supply chain. It also implies a greater prevalence of joint controllership scenarios, which legally mandate clear, transparent arrangements outlining each party's specific responsibilities and liabilities. Regulatory bodies may need to issue highly specific guidance on how to apply controller/processor definitions to the unique multi-stakeholder model of immersive environments, potentially requiring new models of shared liability.

The "Jurisdictional Quagmire" for Cross-Border Data: VR/AR platforms, by their very design, are global in reach, allowing users and data to span dozens of countries simultaneously. Traditional legal tests for jurisdiction, such as "minimum contacts" in the US or "where the harm occurred" in Europe, become "meaningless" in virtual worlds that lack a fixed physical presence. This fundamental disconnect creates a complex situation where a single virtual world platform might theoretically be subject to "hundreds of different countries' laws" regarding various issues like speech, violence, or data privacy. Real-world cases, like a Pennsylvania court asserting jurisdiction over a California VR company due to local advertising and payments, underscore this confusion. This "jurisdictional quagmire" makes cross-border data transfers exceptionally complex, risky, and unpredictable for VR/AR companies. It significantly increases the likelihood of conflicting legal obligations and the potential for simultaneous lawsuits in multiple jurisdictions. The existing GDPR mechanisms for international transfers (SCCs, BCRs, adequacy decisions) , while foundational, may not be sufficiently agile or comprehensive to effectively govern the real-time, continuous, and globally distributed nature of VR/AR data flows. This highlights a critical and urgent need for greater international cooperation and the potential development of harmonized regulatory frameworks specifically tailored to virtual worlds to ensure legal certainty, facilitate responsible innovation, and enable effective enforcement.

Roles and Responsibilities in the VR/AR Data Ecosystem

6. Regulatory Landscape and Guidance

The regulatory environment for data protection in emerging immersive technologies is dynamic, with various authorities issuing guidance and frameworks to address the unique challenges.

European Data Protection Board (EDPB) Guidelines

The European Data Protection Board (EDPB) plays a pivotal role in clarifying EU data protection laws by issuing general guidance, recommendations, and best practices. Recognizing the complexities of immersive technologies, the EDPB has provided specific guidance on VR consent interfaces, emphasizing the need for consent collection to be isolated from general terms of service, real-time processing indicators within the environment, and easily accessible withdrawal mechanisms through simple voice commands.

Furthermore, the EDPB's influence extends to Artificial Intelligence (AI) regulation, notably classifying many VR emotion recognition and biometric categorization systems as "high-risk AI" under the EU AI Act. This classification mandates additional safeguards and transparency measures for these systems. The EDPB has also issued guidelines on the processing of personal data through blockchain technologies and on pseudonymization , both of which are relevant given the distributed and data-intensive nature of immersive environments. Their opinion on AI systems and data protection law provides crucial insights into how GDPR principles apply to AI models, including the concept of "purpose limitation for models." Additionally, updated guidelines on the ePrivacy Directive address evolving tracking technologies, including pixel tracking and the use of unique identifiers, underscoring the requirement for user consent before accessing information on terminal equipment like VR headsets. While not specific to VR/AR, the EDPB's guidelines on connected vehicles offer relevant principles concerning biometric and location data, privacy by design, data minimization, and data subject rights, which can be analogously applied to immersive technologies.

ICO Guidance (UK Context)

In the United Kingdom, the Information Commissioner's Office (ICO) provides comprehensive guidance and resources for UK GDPR compliance. The ICO has issued specific guidance on AI and data protection, addressing critical aspects such as accountability, transparency, lawfulness, accuracy, fairness, security, data minimization, and individual rights within AI systems. This guidance is particularly relevant given the significant role of AI in VR/AR experiences. However, it is important to note that the ICO's current guidance is under review due to the Data (Use and Access) Act, which came into law on June 19, 2025, indicating potential future changes. As of current information, there are no specific ICO guidance documents directly addressing VR/AR technologies beyond this general AI guidance. Organizations operating in the UK must monitor these updates to ensure ongoing compliance.

Future Regulatory Trends and Amendments

The global regulatory landscape for data privacy is continuously evolving, with a clear trend towards stricter regulations and increased enforcement. Governments worldwide are expected to continue implementing more stringent data privacy laws, similar to the GDPR and California Consumer Privacy Act (CCPA). There is a growing consensus on the need for a more harmonized global approach to data protection, which would streamline compliance for businesses operating across borders and ensure consistent consumer protection. Regulations must adapt proactively to address new challenges posed by rapidly advancing technologies, requiring a forward-looking stance on legislation that anticipates future developments. Compliance with global regulations, including updates to existing laws like GDPR, CCPA, and China's Personal Information Protection Law (PIPL), as well as new frameworks like the EU-U.S. Data Privacy Framework, will be vital for achieving cyber resilience.

Potential amendments to the GDPR are also on the horizon. The European Commission published proposed amendments as part of its "Simplification Omnibus IV" package on May 21, 2025, signaling a material shift towards economic pragmatism. These proposals include a revised Article 30(5), which would remove record-keeping obligations for certain small and medium-sized enterprises (SMEs) and small mid-cap enterprises (SMCs) (organizations with fewer than 750 employees, provided processing activities are not likely to result in "high risk" to data subjects' rights and freedoms). This recalibration reflects a political willingness to adjust GDPR enforcement, moving from an "absolutism" to a more "pragmatism" approach.

Looking ahead, there will be an increased focus on data minimization and the adoption of advanced privacy-preserving technologies such as homomorphic encryption and differential privacy. Consumers will demand enhanced control and transparency over their personal data, fostering the rise of privacy-focused business models. The ubiquity of AI will place self-determination and control at the center of global privacy debates. Data Protection Authorities (DPAs) are expected to gain a more prominent role in enforcing GDPR at the intersection with new EU acts, including the EU AI Act. Regulators will also intensify their focus on controlling synthetic data, advanced emotional chatbots, and the proliferation of deepfakes, recognizing their potential for harmful content.

7. Industry Best Practices and Technical Solutions

Achieving GDPR compliance in the complex and evolving VR/AR landscape requires a strategic integration of best practices and technical solutions, rooted in a privacy-centric development philosophy.

Privacy-by-Design and Default Principles

Privacy-by-design and default are foundational to ethical VR/AR development, advocating for the embedding of privacy defenses and data safeguards into technical designs, organizational practices, and processes from the very outset, rather than as an afterthought. This proactive approach involves continuous risk assessment and the regular conduct of Data Protection Impact Assessments (DPIAs) to identify and mitigate potential privacy issues before they manifest.

A core tenet is "privacy as the default setting," meaning that users' data is protected automatically without requiring them to adjust complex settings. Privacy should be inherently embedded into the design and architecture of IT systems and business practices, ensuring that systems are built to respect privacy from the ground up. This approach aims for "full functionality" – a positive-sum outcome where privacy is integrated without diminishing system capabilities. "End-to-end security" emphasizes full lifecycle protection, encompassing secure data storage, careful handling during processing, and secure deletion practices. Transparency is crucial, requiring clear, honest communication about how data is used, stored, and shared through accessible privacy policies. Ultimately, the approach must be "user-centric," valuing individual privacy preferences and providing users with control over their data through easy-to-use privacy settings and clear options for consent.

Secure Data Architectures

Implementing robust secure data architectures is paramount for protecting the highly sensitive data collected by VR/AR systems. This includes:

Data Encryption: Sensitive data must be encrypted both during transit (e.g., when moving between devices and servers) and when at rest (e.g., in storage databases).

Multi-Factor Authentication (MFA): Implementing MFA for all VR/AR accounts significantly enhances security by requiring multiple forms of verification, making unauthorized access far more difficult.

End-to-End Encryption (E2EE): E2EE should be employed for securing communications and data sharing within and across VR/AR platforms, ensuring that only the sender and intended recipient can read the messages.

Robust Access Controls: Strict access controls must be implemented to ensure that only authorized personnel can access user data, limiting internal threats.

Regular Software Updates and Security Patches: Timely application of updates and patches is essential to address newly discovered vulnerabilities and protect against evolving cyber threats.

Data Partitioning: Inspired by practices in connected vehicles, partitioning vital VR/AR system functions from those constantly relying on telecommunication capacities can enhance security by isolating sensitive operations.

Blockchain-based Deletion Ledgers: For ensuring immutable compliance documentation and verifiable data deletion, especially in decentralized environments, blockchain-based deletion ledgers offer a promising technical solution.

User-Centric Privacy Controls

Empowering users with meaningful control over their data is a cornerstone of GDPR compliance in VR/AR. This involves:

Explicit Consent with Clear Explanations: Users must provide explicit consent for data collection and processing, accompanied by clear, easily understandable explanations of data usage and privacy policies.

Opt-in/Opt-out Choices: Providing clear and accessible mechanisms for users to opt-in or opt-out of specific data collection preferences and invasive features is crucial.

In-Environment Consent Interfaces: Innovative consent interfaces are needed that are native to the immersive environment, such as spatial dialogs that appear as floating interfaces, gaze-activated controls for consent, voice commands for withdrawal, and haptic feedback notifications to alert users when biometric processing begins or changes intensity. These solutions aim to provide transparency without disrupting immersion.

Means for Data Correction and Update: Organizations must provide clear and easy-to-use means for users to correct or update their information.

SDK-level Data Lifecycle Management: Implementing SDK-level data lifecycle management with automatic cleanup ensures that data is deleted when retention periods expire, aligning with storage limitation principles.

Machine-Readable Retention Policies: Embedding machine-readable retention policies in biometric metadata can facilitate automated compliance with data retention requirements.

Regular Privacy Setting Reviews: Users should be encouraged and enabled to regularly review and adjust their privacy settings to control data exposure.

Transparent Data Policies: Platform providers must ensure transparent data policies that clearly communicate data practices and allow users to opt out of intrusive data collection.

Ethical AI Integration

Given the deep integration of AI in VR/AR, ethical considerations are paramount:

Bias Prevention: Any AI systems used within VR/AR environments must be actively designed to be free from biases, particularly concerning content recommendations, facial recognition, and behavioral profiling, to prevent discriminatory outcomes.

Transparency in Algorithms: Developers should provide users with an understanding of how algorithms influence their experience, fostering trust and enabling informed choices.

Regular Audits: Conducting regular audits of AI systems is essential to detect and mitigate biases, ensuring fairness and compliance.

Continuous Compliance and User Education

Maintaining compliance in VR/AR is an ongoing process that requires continuous vigilance and user empowerment:

Privacy Impact Assessments (PIAs): Conducting PIAs before launching new VR/AR features helps organizations understand potential compliance risks and address them proactively.

Regular Review of Data Practices: Regularly reviewing and updating data collection practices ensures ongoing alignment with data minimization and other GDPR principles.

Data Retention Policies: Establishing clear data retention policies for all types of data collected is crucial for compliance with storage limitation.

Cybersecurity Awareness: Promoting cybersecurity awareness among users and employees is vital to mitigate risks associated with phishing, social engineering, and other cyber threats.

Ethical Guidelines: Establishing and enforcing ethical guidelines for AR/VR interactions can help prevent harassment and abuse within virtual environments.

Employee Training: Comprehensive training for employees on data protection laws, regulations, and best practices is essential for fostering a privacy-aware culture.

Third-Party Accountability: Ensuring that any third parties involved in data handling adhere to stringent data privacy and security standards, and informing users of such access, is critical for maintaining accountability across the ecosystem.

8. Conclusion and Recommendations

The integration of GDPR compliance within the rapidly evolving landscape of Virtual and Augmented Reality presents a complex yet critical challenge. These immersive technologies fundamentally alter the nature and scale of personal data collection, moving from discrete interactions to continuous, real-time capture of highly intimate biometric and behavioral information. This shift creates comprehensive "digital twins" of users, leading to an escalation in data granularity and sensitivity, and amplifying risks related to profiling, re-identification, and unforeseen inferences of special category data. The inherent "regulatory lag" between technological innovation and legislative adaptation further complicates the compliance environment, necessitating a proactive and adaptive approach from all stakeholders.

The application of core GDPR principles to VR/AR reveals significant tensions. Traditional consent mechanisms prove inadequate in immersive environments, demanding innovative, in-environment solutions that balance usability with explicit, granular control. The principle of purpose limitation is challenged by the potential for secondary use of rich VR/AR data and AI models, highlighting the need for "purpose limitation for models" to prevent misuse. Data minimization, accuracy, and storage limitation face hurdles due to the continuous, dynamic, and persistent nature of immersive data. Furthermore, the multi-layered VR/AR ecosystem complicates the clear assignment of data controller and processor roles, leading to a "distributed accountability" dilemma that requires robust contractual agreements and potentially new models of shared liability. The global reach of VR/AR platforms also creates a "jurisdictional quagmire" for cross-border data transfers, underscoring the urgent need for international cooperation and harmonized regulatory frameworks.

Recommendations

To navigate these complexities and foster a trustworthy immersive digital future, the following recommendations are put forth for developers, platform operators, and regulators:

Embrace Privacy-by-Design and Default: Integrate privacy and data protection considerations into every stage of VR/AR development, from conception to deployment. This includes designing systems that collect only necessary data, default to the most privacy-protective settings, and ensure end-to-end security throughout the data lifecycle.

Innovate Consent Mechanisms: Develop intuitive, in-environment consent interfaces that are easily understandable, allow for granular control over data types and processing purposes, and provide simple, real-time mechanisms for withdrawal of consent (e.g., via gaze, voice commands, or haptic feedback).

Prioritize Data Minimization and Robust Anonymization: Rigorously assess data collection to ensure only truly necessary data is gathered. Invest in advanced anonymization and pseudonymization techniques, recognizing the "composite profile problem" and the high re-identification risks inherent in VR/AR data. Implement automated data lifecycle management and clear retention policies.

Clarify Roles and Responsibilities: For multi-party VR/AR ecosystems, establish clear, legally binding Data Processing Agreements (DPAs) that meticulously define the roles of data controllers, processors, and joint controllers. Conduct thorough due diligence on all third-party partners to ensure their GDPR compliance.

Strengthen Security Protocols: Implement multi-factor authentication (MFA), end-to-end encryption (E2EE) for all communications and data at rest and in transit, and robust access controls. Regularly update software and apply security patches to mitigate evolving cyber threats, including those related to biometric data compromise.

Address AI-Specific Risks: When integrating AI, implement "purpose limitation for models" to prevent unauthorized secondary uses. Prioritize ethical AI development, focusing on bias prevention, algorithmic transparency, and human oversight to mitigate risks of excessive profiling, nudging, and discrimination. Adhere to "high-risk AI" requirements under the EU AI Act.

Operationalize Data Subject Rights Effectively: Develop technical solutions that enable data subjects to easily exercise their rights, particularly the right to access, portability, and erasure, for the complex and extensive datasets generated in VR/AR. This may involve exploring blockchain-based deletion ledgers for verifiable data removal in decentralized environments.

Protect Children's Data with Enhanced Vigilance: Given the prevalence of minors in VR/AR, implement robust age verification and parental consent mechanisms that go beyond simple declarations. Design experiences with children's privacy and well-being as a primary consideration, adhering strictly to privacy-by-design principles.

Advocate for Harmonized Regulation: Engage with regulatory bodies and industry consortia to push for clearer, harmonized international legal frameworks specifically tailored to immersive technologies. This will reduce jurisdictional uncertainty and facilitate responsible cross-border data flows.

Foster Continuous Education and Accountability: Promote a culture of data privacy awareness among developers, operators, and users. Conduct regular Privacy Impact Assessments, internal audits, and provide ongoing training to ensure that all stakeholders understand their responsibilities and the evolving compliance landscape.

Frequently Asked Questions

What makes data collection in Virtual and Augmented Reality (VR/AR) systems uniquely challenging for GDPR compliance?

VR/AR systems continuously collect highly sensitive biometric and behavioural data in real time, a significant departure from traditional digital platforms. This includes intimate details like eye-tracking, head movement signatures, facial expressions, and even physiological data such as heart rate. This expansive and granular data collection leads to the creation of comprehensive "digital twins" or "biometric profiles" of users, which can inadvertently reveal real-world identities and intimate personal details, even when users employ "anonymous avatars." This continuous, deep-seated data flow amplifies the potential for privacy invasion, misuse, and re-identification to an unprecedented extent.

How do VR/AR technologies create "composite profiles" and what are the GDPR implications of this?

VR/AR systems combine various individual data points (e.g., eye-tracking, head movements, haptic responses) to create comprehensive, uniquely identifiable "biometric profiles" or "biometric signatures." Even if individual data points seem innocuous, their aggregation, through "specific technical processing," allows for the unique identification and re-identification of users across different sessions, platforms, and even physical locations. Under GDPR, such composite profiles are often classified as "Special Category Data" (biometric data for identification), requiring explicit consent for processing. This capability fundamentally challenges traditional anonymisation techniques, as simply removing direct identifiers may be insufficient to truly de-identify users, significantly increasing re-identification risks and complicating the "right to be forgotten."

What are the main difficulties in obtaining and managing user consent in immersive VR/AR environments under GDPR?

Traditional consent mechanisms, such as static, text-heavy forms, are ineffective in immersive VR/AR environments, as they are difficult to read and disrupt the user experience. GDPR mandates explicit, informed, and unambiguous consent, particularly for sensitive biometric data. However, VR/AR systems involve continuous data collection throughout entire sessions, meaning consent is an ongoing process rather than a one-time event. This creates a "usability vs. compliance" paradox, requiring innovative, in-environment consent interfaces (e.g., spatial dialogues, gaze-activated controls, voice commands for withdrawal) that provide granular control and real-time transparency without breaking immersion. Failure to provide such mechanisms can render consent invalid.

How does the principle of "purpose limitation" apply to VR/AR data, especially with AI integration?

The principle of purpose limitation dictates that personal data should only be collected for specified, explicit, and legitimate purposes. In VR/AR, a significant challenge arises from the potential for secondary use of highly granular data and the reuse of trained Artificial Intelligence (AI) models for purposes beyond their original intent. This can lead to an "asymmetry of informational power" and potentially risky applications like differential pricing or discriminatory AI-assisted hiring. To address this, the concept of "purpose limitation for models" is emerging, advocating that the production and use of AI models derived from VR/AR data must be limited to specific, explicitly stated purposes determined ex ante and rigorously enforced throughout the AI model's lifecycle.

What are the security risks associated with VR/AR data, and what mitigation strategies are recommended?

The collection of highly sensitive biometric and behavioural data by VR/AR platforms creates heightened security risks. If compromised, this data can be exploited for identity theft, the creation of deepfakes, fraudulent transactions, or unauthorised access to virtual spaces. Real-time environmental data and device usage patterns are vulnerable to targeted attacks, intrusive advertising, or surveillance. VR/AR experiences are also susceptible to Man-in-the-Middle (MitM) attacks, malicious code injection through third-party content, and intensified phishing via immersive environments. Mitigation strategies include implementing multi-factor authentication (MFA), end-to-end encryption (E2EE) for communications and data, robust access controls, regular software updates and security patches, data partitioning, and potentially blockchain-based deletion ledgers for immutable compliance documentation.

Why is defining controller and processor roles so complex in the multi-layered VR/AR ecosystem?

The VR/AR ecosystem is inherently multi-layered, involving numerous actors such as headset manufacturers, platform operators, application developers, and third-party analytics providers. Each of these entities may collect and process different types of data for various, often interdependent, purposes. Accurately determining who decides "what" and "how" data is processed (the controller) and who processes data on behalf of another (the processor) is crucial. The dynamic nature of VR/AR data flows means that the same stakeholder may assume multiple data-related roles, leading to intricate and often ambiguous controller-processor or joint-controller relationships. This "distributed accountability" dilemma necessitates meticulous case-by-case analysis, clear contractual agreements (Data Processing Agreements), and potentially new models of shared liability to ensure compliance and pinpoint accountability.

How do cross-border data transfers become a "jurisdictional quagmire" in VR/AR environments?

VR/AR applications inherently operate across national borders, allowing users and data to span multiple countries simultaneously. This creates significant complexities in determining which country's laws apply, as traditional legal tests for jurisdiction become "meaningless" in virtual worlds that lack a fixed physical presence. A single VR/AR platform could theoretically be subject to "hundreds of different countries' laws," leading to substantial legal uncertainty, conflicting legal obligations, and the potential for simultaneous lawsuits in multiple jurisdictions. While GDPR provides mechanisms for international data transfers (e.g., Standard Contractual Clauses, Binding Corporate Rules), the real-time, continuous, and globally distributed nature of VR/AR data flows highlights an urgent need for greater international cooperation and harmonised regulatory frameworks specifically tailored to immersive technologies.

What is "Privacy-by-Design and Default" and why is it essential for GDPR compliance in VR/AR?

"Privacy-by-Design and Default" is a foundational approach that advocates for embedding privacy defences and data safeguards into the technical designs, organisational practices, and processes of VR/AR systems from the very outset. It means that user data is protected automatically by default, without requiring users to adjust complex settings. This proactive approach involves continuous risk assessment, regular Data Protection Impact Assessments (DPIAs), and ensuring "full functionality" where privacy is integrated without diminishing system capabilities. Key aspects include "end-to-end security" throughout the data lifecycle, transparent communication about data use, and a "user-centric" design that provides users with clear, easy-to-use controls over their data. This proactive integration is crucial for navigating the complex data landscape of VR/AR and building user trust.

Additional Resources

EU GDPR: A Comprehensive Guide - A thorough overview of GDPR principles, requirements, and implementation strategies.

Privacy by Design: A Guide to Implementation Under GDPR - Practical insights on embedding privacy considerations into CRM system design and implementation.

GDPR Compliance Assessment: A Comprehensive Guide - A methodical approach to evaluating and improving GDPR compliance in organizational systems, including CRM.

Consent in GDPR: Understanding Its Significance for Businesses - Detailed exploration of consent requirements and implementation in business contexts.

The Accountability Principle in GDPR: Enhancing Data Protection and Business Practices - Insights on demonstrating and documenting GDPR compliance in organizational processes.